If you’ve spent any time exploring large language models (LLMs) or natural language processing (NLP) tools, you’ve probably come across the concept of “tokens”.

Two of the most common types of tokens, which you will probably have heard of from various models such as ChatGPT and Gemini and Claude Sonnet, are input tokens and output tokens.

The former is simply the capacity of the input text to the LLM, and the latter is the capacity of the output text the LLM can generate.

These are not just numbers, but they make a huge difference in the applications of LLM and are considered a differentiator between LLMs.

But what exactly are tokens, and how do they translate into word count or text length?

In this post, we’ll walk through:

- A quick definition of tokens.

- How tokens map to words.

- Examples of different token counts, from 1,000 tokens to 2 million tokens.

- How these different sizes might be used in real-world scenarios.

— -

1. What Is a Token?

A token is essentially a chunk of text. In many NLP models:

- Commonly, each token can be roughly 0.75 words (or 3–4 characters, depending on the model and the text).

- Alternatively, you can approximate 1 token ≈ 0.75 words or 1 token ≈ 3–4 characters in English.

But keep in mind that the exact tokenization process depends on the specific tokenizer and the language. For example, short words like “I” could be one token, and punctuation marks can sometimes count as separate tokens. So the approximation of 1 token ≈ ¾ words is just that — an approximation.

— -

2. Mapping Tokens to Words

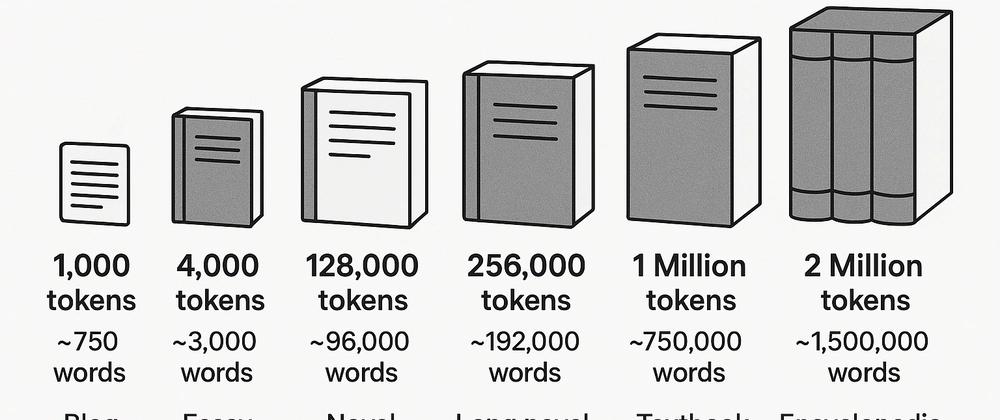

If we use the rough rule of thumb that 1 token is about 0.75 words, then:

- 1,000 tokens ≈ 750 words

- 4,000 tokens ≈ 3,000 words

- 128,000 tokens ≈ 96,000 words

- 256,000 tokens ≈ 192,000 words

- 200,000 tokens ≈ 150,000 words

- 1,000,000 tokens ≈ 750,000 words

- 2,000,000 tokens ≈ 1,500,000 words

These are very rough estimates. In practical terms, the actual word count could vary. If you have a high proportion of short words or special characters, you might need more tokens. Conversely, large words or continuous text might use fewer tokens than expected.

— -

3. Real-Life Examples of Text Length

To give more tangible examples, here’s how many tokens might appear in different text scenarios. (All numbers are approximations based on typical English usage.)

Example 1: 1,000 Tokens (~750 Words)

- Word Count: ~750 words

- Text Size: A blog article that’s roughly one to two pages long (depending on formatting).

- Possible Use Case: A concise opinion piece or a short newsletter article.

Example 2: 4,000 Tokens (~3,000 Words)

- Word Count: ~3,000 words

- Text Size: Equivalent to about 6–8 pages of single-spaced text.

- Possible Use Case: A more in-depth article, a short eBook chapter, or a research summary.

Example 3: 128,000 Tokens (~96,000 Words)

- Word Count: ~96,000 words

- Text Size: This is nearing the length of a full novel (typical novels range from 70,000 to 100,000 words).

- Possible Use Case: This could represent the rough length of a standard full-length fiction or non-fiction book.

Example 4: 256,000 Tokens (~192,000 Words)

- Word Count: ~192,000 words

- Text Size: About double the length of a typical novel; could be considered a large novel or an extensive reference work.

- Possible Use Case: A very comprehensive technical manual or a multi-part series compiled into one volume.

Example 5: 200,000 Tokens (~150,000 Words)

- Word Count: ~150,000 words

- Text Size: Still quite substantial, perhaps a large non-fiction book or a long multi-chapter textbook.

- Possible Use Case: A research compendium, or a very thorough reference text.

Example 6: 1 Million Tokens (~750,000 Words)

- Word Count: ~750,000 words

- Text Size: This is truly massive. For perspective, the entire “Harry Potter” series (7 books) totals around 1.08 million words.

- Possible Use Case: This could encompass several novel-length works combined, or a huge dataset of articles, documentation, or multi-volume textbooks.

Example 7: 2 Million Tokens (~1.5 Million Words)

- Word Count: ~1.5 million words

- Text Size: Extremely large, often spanning multiple lengthy novels or entire encyclopedias.

- Possible Use Case: This scale is where you’d store entire knowledge bases, entire library archives, or massive academic and legal documents.

— -

4. Why These Differences Matter

- Storage and Memory: Larger token counts require more storage, whether it’s on your local computer or in the cloud.

- Processing Time: For many LLM-based applications, the time to analyze or generate text increases with higher token counts.

- Cost: If you’re using a paid model or an API that charges by token usage, bigger token counts can become expensive quickly.

- Context Window Limits: Many language models have a maximum context window size (i.e., the maximum number of tokens you can use in a single prompt or conversation). Exceeding this limit often means you must chunk or summarize text.

— -

5. Putting It All Together

The main takeaway is that “token” is a building block of text in NLP. A token count is simply another way to measure how much text you’re dealing with and how much computational power and cost might be involved in processing it.

As you work with NLP models:

- Always keep an eye on how many tokens your input and output consume.

- Plan for scaling if you expect your projects to involve tens of thousands, hundreds of thousands, or even millions of tokens.

- Use chunking or summarization techniques if you run into context window limitations.

Top comments (0)