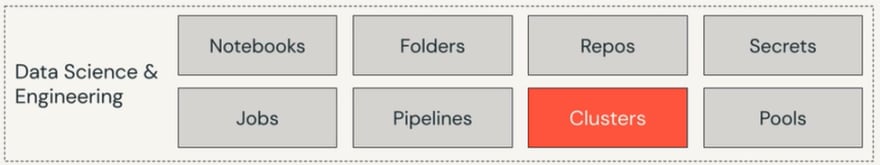

Talking about Data Science & Engineering workspace, the classic Databricks environment for collaboration among Data Scientists, Data Engineers, and Data Analysts. Just mentioning it is the backbone of Databrick’s machine learning environment and provides an environment for accessing and managing all your Databricks assets, you might be asking yourself what sorts of assets I'm talking about? The below figure shows the some of the Data assets & all three Personas assets in Databricks.

Sounds Interesting right? Let's address all of them one by one in this blog with some short description to get to know all of them.

- Data assets, while accessible from the space fall under the category of unity catalog i: e., Databricks data governance solution,

- These assets include Databases, Tables and Views also included under this umbrella or catalogs, which is a top-level container that contains the database

- Storage credentials and external locations which managed credentials and paths for accessing files and external cloud storage containers

- Share recipients, which are special assets in principles for use with Delta. Sharing the open-source high-performance secured data sharing protocol developed by Databricks.

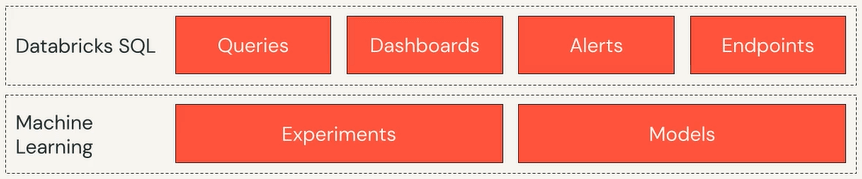

These assets like Queries, Dashboards, Alerts, and Endpoints also, do not fall under the category of Data Science and Engineering workspace, but rather manage and access within Databricks SQL, likewise Experiments and Models are managed within Databricks Machine Learning.

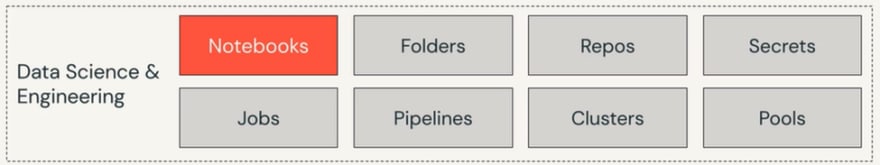

Data Science & Engineering workspace, the classic Databricks environment for collaboration among Data Scientists, Data Engineers, and Data Analysts. Just mention it is the backbone of Databrick’s machine learning environment and provides an environment for accessing and managing all your Databricks assets These assets are:

- Notebooks, arguably, the centerpiece of the data science and engineering workspace cost to feed a web-based interface to documents that contain a series of cells. These cells can contain commands that operate on data in any of the languages supported by Databricks. Visualizations that provide a visual interpretation of an output narrative text can be employed to document the notebook Magic commands that allow you to perform higher-level operations, such as running, other notebooks invoking, a database utility, and more notebooks that are collaborative. They have a built-in history and users can exchange notes. They can be run interactively or in batch mode as a job through Databricks workflows.

- Foldersprovide a file system, like construct within the workspace, to organize workspace assets, such as notebooks.

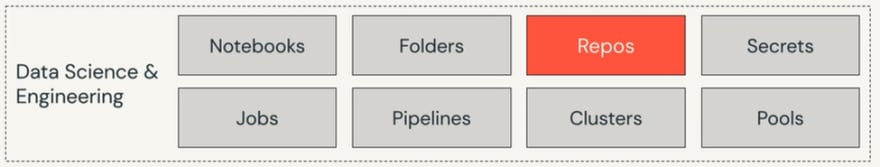

- While notebooks provide a built-in history that can be very handy in many circumstances. The functionality is limited and does not go far to fulfill the requirements of a fully-fledged CI/CD environment. Reposeprovides the ability to sync notebooks and files to remote git. Repose provides functionality for Pushing and Pulling, Managing Branches, Viewing Differences & reverting changes. Repos also provide an API for integration with other CI/CD tools.

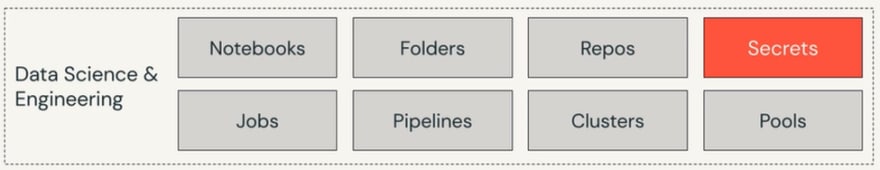

- Databrick Secret fulfills security best practices by providing secure key-value storage, for sensitive information allowing you to easily decouple such material from your code.

- Jobs have the capability of automatically running tasks. Jobs can be simple with a single task or can be large multitask workflows with complex dependencies. Job tasks can be implemented with notebooks, jobs pipelines, or applications written in Python Scala, and Java, or using the spark summit style.

- Delta live table is a framework that delivers, a simplified approach for building reliable, maintainable, and testable data processing pipelines. The main unit of execution in Delta live tables is a pipeline which is a directed acyclic graph, linking data sources to target datasets. pipelines are implemented using notebooks.

- A cluster is a set of computation resources and configurations on which you run Data, Engineering, Data Science, and Data Analytics workloads such as production ETL pipeline, streaming analytics, Ad-hoc analytics, and Machine Learning. The workloads can consist of a set of commands in a notebook or a workflow that runs jobs or pipelines.

- Pools reduce cluster start, and auto-scaling times by maintaining a set of idols ready-to-use virtual machine instances when a cluster is attached to a pool, cluster nodes are created using the pool idle instances, creating new ones as needed.

NOTE: Reference & Images in the blogs are taken from Databricks site & courses.

This is it for this blog, hope you like the blog. Kindly do Follow.

Top comments (0)