Problem

Docker Hub has implemented rate limits on image pulls back on November 2, 2020, which made a lot of headaches for those who are using it in their environment. The following limits were introduced:

- Anonymous Users: Limited to 100 pulls per 6 hours

- Authenticated Users: Limited to 200 pulls per 6 hours

Many of us migrated to private repositories, quay.io, ghrc.io, registry.gitlab.com, and others. For some, it was enough to use an authentication token to pull from Docker Hub.

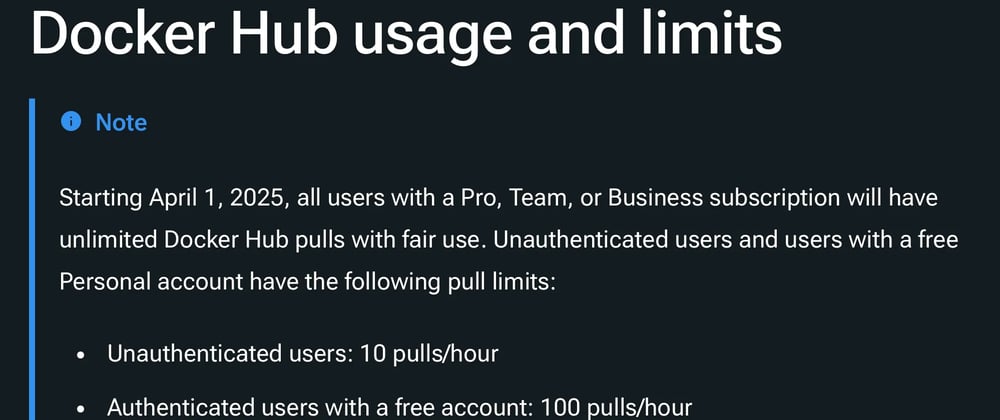

Starting from April 1, 2025 there is a new limits:

- Personal (authenticated): Limited to 100 pulls per hour

- Unauthenticated Users: Limited to 10 pulls per IPv4 address or IPv6 /64 subnet per hour

Solution

Let’s see what we have at AWS to solve the problem with the rate limit and even more perks.

In November 2021, AWS introduced the pull-through cache feature for Amazon Elastic Container Registry (Amazon ECR), which can help us with the following problems:

- caching public and private images using an authentication token in your private ECR registry

- speedup pulling from private ECR to your local services (ECS, EKS, Lambdas, etc.)

- lifecycle policy to keep only the required number of the latest tags

- security scanning of images during pull

- a single place to update your token in case of rotation or expiration (e.g. Gitlab do not allow you to create tokens with an expiration date longer than one year). Just imagine you need to go through all your credentials in all K8s clusters one per year to update tokens.

So, let’s dive into the implementation of this feature. To make it work, you need to create:

- a pull-through cache rule, where you define the prefix you want to use in your registry and the destination registry where you forward all requests

- secrets manager credentials you want to use to authenticate to the destination registry

- a pull-through cache repository template if you want to have a lifecycle policy, security scans

and some other features, which are optional at this stage.

As you can see, you are not creating the repository itself. It will be created automatically during the first pull-through cache request using default settings or a template if you have assigned one.

If I created a rule with the prefix dockerhub in the us-east-1 region and say to forward my pull requests to the registry-1.docker.io, I can use the following command:

# direct pull from Docker Hub

docker pull timberio/vector:0.45.0-alpine

# pull through ECR

docker pull 123456789012.dkr.ecr.us-east-1.amazonaws.com/dockerhub/timberio/vector:0.45.0-alpine

As you can see, I only added my repository and dockerhub as prefix, and then the original image to pull. It’s what you need to update in manifests later to pull images through ECR, which is pretty straightforward.

Automation

Sounds easy, but why not make it using Terraform (with a Terragrunt wrapper)? I created a simple Terraform module which helps you create multiple pull-through rules from the basic YAML file with the following structure:

dockerhub:

registry: registry-1.docker.io

username: user

accessToken: token

gitlab:

registry: registry.gitlab.com

username: user

accessToken: token

and module will create two rules with prefixes: dockerhub and gitlab , secrets manager credentials with username and accessToken, and basic lifecycle policy to keep only 3 latest images in cache (makes sense to extend the YAML with per-registry settings but I have no time for it now).

Because I’m using Terragrunt I can encrypt this file with Sops and commit to the repository safely, so my terragrunt.hcl is looking like that:

terraform {

source = "${get_parent_terragrunt_dir()}modules/ecr-pullthrough"

}

include "root" {

path = find_in_parent_folders("root.hcl")

expose = true

}

locals {

registries = yamldecode(sops_decrypt_file("${get_terragrunt_dir()}/secrets.enc.yaml"))

}

inputs = {

registries = local.registries

tags = merge(include.root.locals.default_tags)

}

You can find my module on Github https://github.com/opsworks-co/ecr-pull-through

If you are using https://github.com/terraform-aws-modules/terraform-aws-eks module to create your cluster and using in-built role it is creating for the worker nodes, don’t forget to extend it with a new policy to allow

ecr:BatchImportUpstreamImagebecause by default it attaches managed policyarn:aws:iam::aws:policy/AmazonEC2ContainerRegistryPullOnlywhich doesn’t allow pull-through cache.

Looking for your comments, suggestions and improvements!

Follow me on Medium, connect on LinkedIn!

Top comments (0)