In our last post, we enabled audit logs using parameter groups in Aurora Postgres.

Now, we are collecting our required Aurora logs in CloudWatch but we need to filter our those logs and send to S3 to archive for analysis and long term storage.

Why this is useful?

We can set retention on CloudWatch logs and keep our audit logs in S3. This will help to save cost. In a different use case, we can send to another external destination also for audit or analysis.

At this point, I am assuming you already have your application logs in CloudWatch. For our use case, I am collecting Aurora logs in CloudWatch as explained in part of this series. Although below use case should work for any logs in CloudWatch

In order to send logs to S3 from CloudWatch, we will create subscription filter which can help to stream log data in near realtime to destinations.

What is Subscription Filter in CloudWatch?

CloudWatch subscription filter provide filter patterns and options to deliver logs events to AWS services. It can provide log delivery of events to multiple destinations.

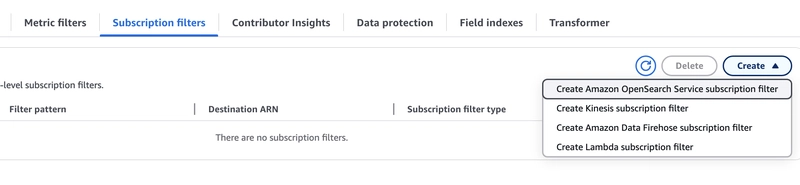

CloudWatch provide multiple service options to create subscription filter.

- OpenSearch

- Kinesis

- Data Firehose

- Lambda

We will go with Firehose considering log volume and cost, and deployment for Firehose is comparatively easier for our goal to steam logs to S3.

Firehose can transform records or convert format before delivery to the S3

To begin with, we need to follow these steps.

- Create S3 bucket

- Create Firehose Stream

- Create IAM role for Firehose

- Create CloudWatch subscription filter

- Validation

The reason we need to follow this approach as we need S3 bucket when creating Firehose stream. For CloudWatch subscription, we need to have Firehose stream first.

Step1: Create S3 bucket

Creating S3 bucket is straightforward. You need to search S3 service and create S3 bucket with default settings.

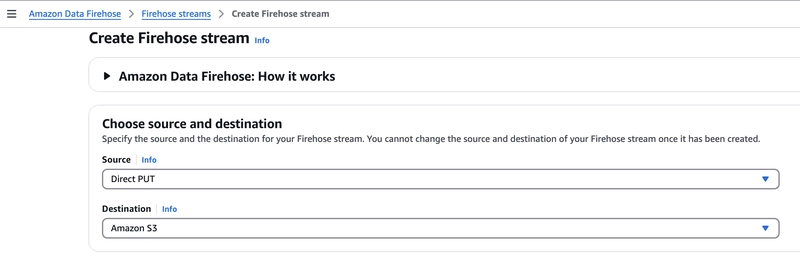

Step2: Create Firehose Stream

You can keep the option to create IAM roles by itself.

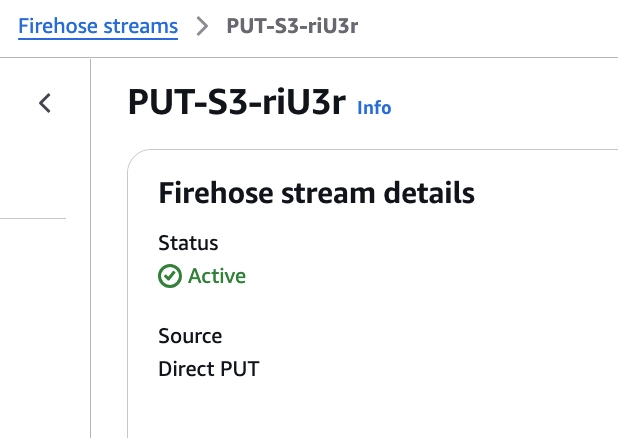

It can take a few minutes for Firehose Stream to get created and will show active status.

Note: We can't change destination for Firehose after creating stream.

Step3: Create IAM role to allow CloudWatch logs -> Firehose

Create IAM policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowPutToFirehose",

"Effect": "Allow",

"Action": [

"firehose:PutRecord",

"firehose:PutRecordBatch"

],

"Resource": "arn:aws:firehose:<region>:<account-id>:deliverystream/<your-firehose-name>"

}

]

}

Create IAM role LogsToFirehose

Update Trust Policy as

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "logs.<region>.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

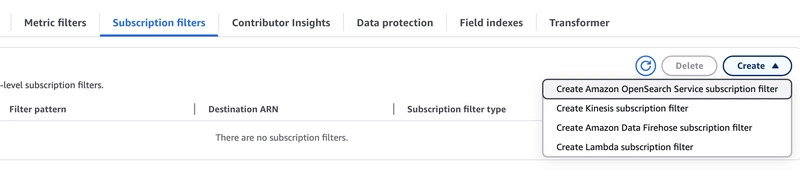

Step4: Create CloudWatch Subscription Filter

Now, switch back to our log group in CloudWatch.

Click on Create Amazon Data Firehose subscription filter

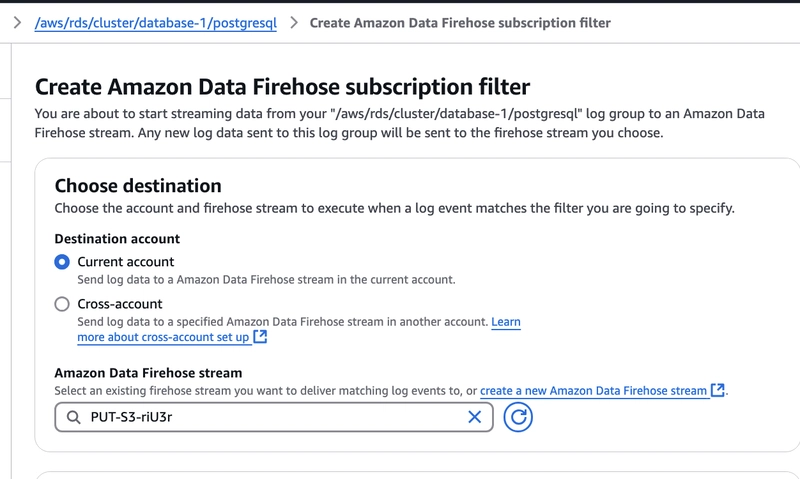

Now, after adding filter name, we need to select Firehose stream in current account that we created in Step2.

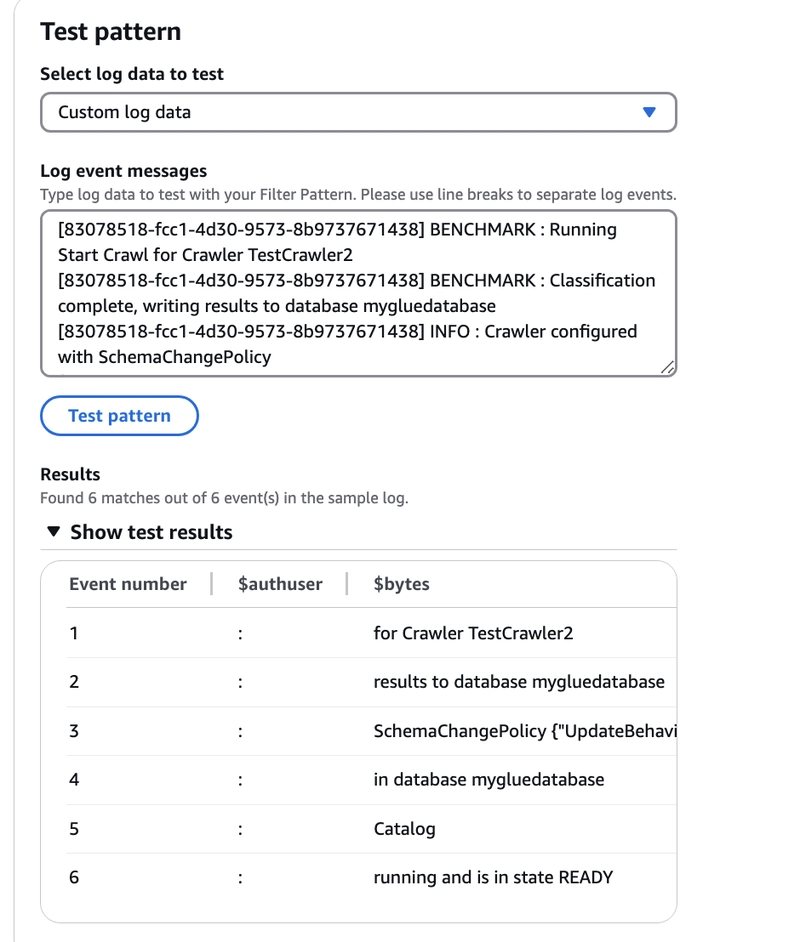

We can also add pattern if we want to filter our logs further before sending to Firehose and add prefix.

Also, assign IAM role to grant permission to receive logs by Firehose from CloudWatch. We created this IAM role in Step3. Now, Create Subscription button.

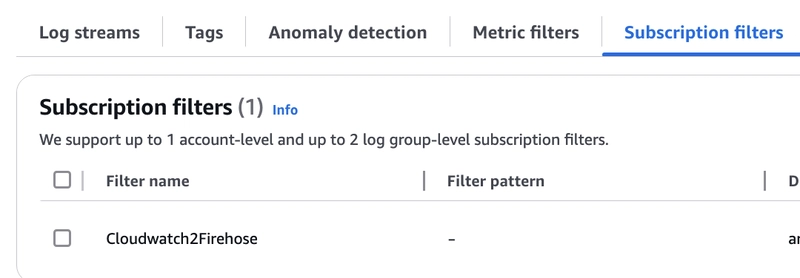

We should see subscription filter for our logs added like this.

Step5. Validate logs

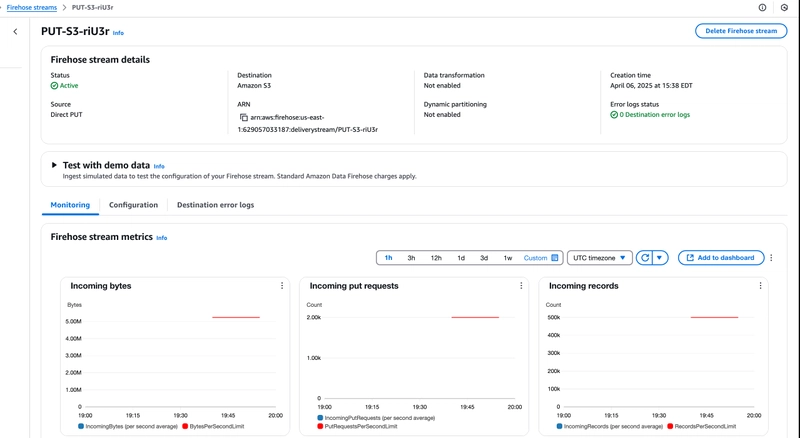

After creating subscription filter, we need to check Firehose Stream monitoring metrics, if it shows data getting collected

From our metrics, we can say, we are collecting logs.

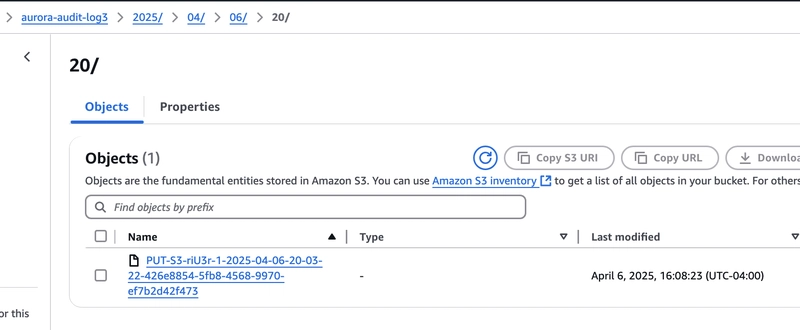

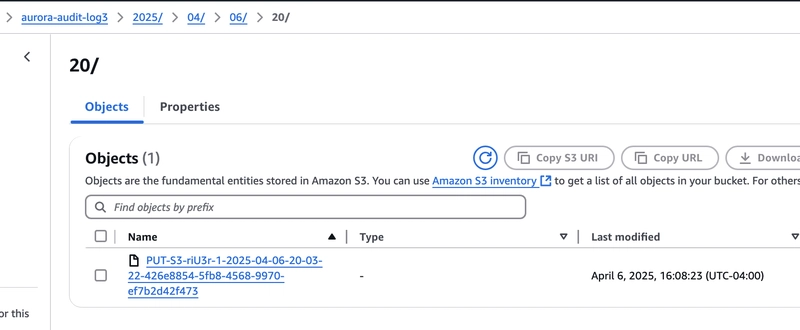

Now, we need to go to S3 our final destination to confirm if we are getting those in bucket.

We should see logs organized in bucket with year, month and day.

Top comments (0)