Level 400

According to the second part, in this blog you can add some controls to the deployment if you need to align your deployments with NIST framework and AWS Best Practices using the shift left security principle implementing some technics like unit test, SAST for infrastructure code and shift right security principle using CloudFormation Hooks.

Remember that goal is create a simple stack, for demo purpose an s3 bucket. Also, you will be finding the reports and improve the notifications options using channels such as Slack. If you want, you could download the code and extend the functionalities for example sending findings to AWS Security Hub or integrating CodeGuru but in other post you find more demos and tips.

Hands On

Requirements

- cdk >= 2.43.0

- AWS CLI >= 2.7.0

- Python >= 3.10.4

- Pytest >= 7.1.3

- cdk-nag >=2.18.44

- checkov >= 2.1.229

AWS Services

- AWS Cloud Development Kit (CDK): is an open-source software development framework to define your cloud application resources using familiar programming languages.

- AWS Identity and Access Management (IAM): Securely manage identities and access to AWS services and resources.

- AWS IAM Identity Center (Successor to AWS Single Sign-On): helps you securely create or connect your workforce identities and manage their access centrally across AWS accounts and applications.

- AWS CodeBuild: fully managed continuous integration service that compiles source code, runs tests, and produces software packages that are ready to deploy.

- AWS CodeCommit: secure, highly scalable, managed source control service that hosts private Git repositories.

- AWS CodePipeline: fully managed continuous delivery service that helps you automate your release pipelines for fast and reliable application and infrastructure updates.

- AWS Key Management Service (AWS KMS): lets you create, manage, and control cryptographic keys across your applications and more than 100 AWS services.

- AWS CloudFormation: Speed up cloud provisioning with infrastructure as code as code

Solution Overview

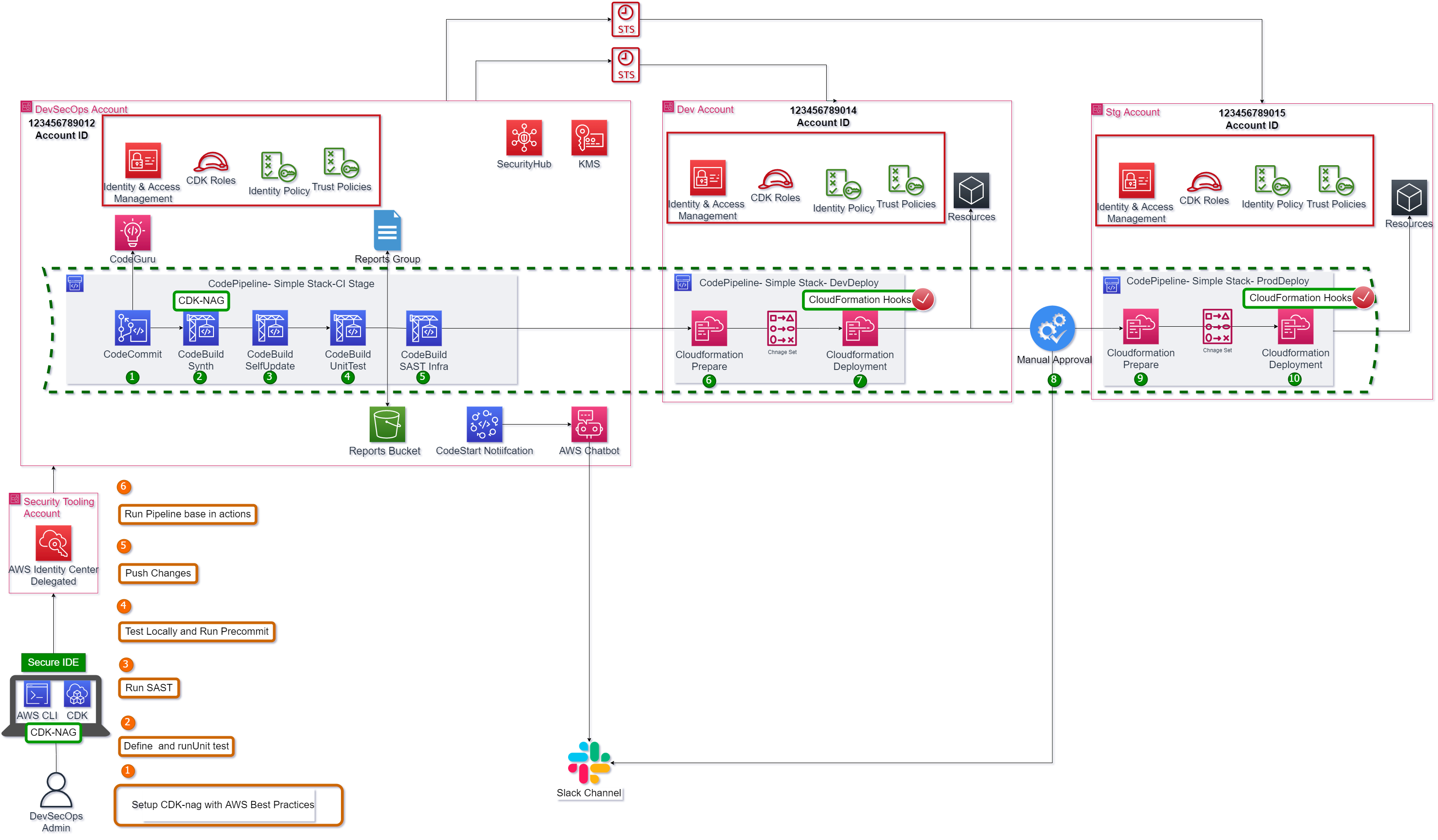

Figure 1. Solution Overview – Enhanced CDK Pipeline

The Figure 1 shows the steps to accomplish this task. It shows a cross account pipeline using AWS CodePipeline, AWS CodeCommit, AWS Codebuild and AWS CloudFormation as AWS principal services but, other services as Security Hub, CodeGuru, AWS Chatbot and AWS CodeStart to improve the vulnerability management and user experience for developers. In this solution the first step is create a secure CDK project using cdk-nag, define unit test and local SAST before to push to the pipeline. Next, the pipeline runs the following steps:

1.The changes are detected and activate de pipeline. For this demo the branch master is the default branch.

2.The CDK project is synthesized if is aligned with AWS Security Best practices.

3.The pipeline run self-update action.

4.The unit test runs, and its report is published in codebuild reports group.

5.The SAST runs, and its report is published in codebuild reports group.

6.The Cloudformation stack is prepared for developer environment.

7.The Cloudformation stack is deployed for developer environment after success result from Cloudformation hooks.

8.To move between environments a manual approval step is added, the notification is sent to slack channel.

9.The Cloudformation stack is prepared for staging environment.

10.The Cloudformation stack is deployed for staging environment after success result from Cloudformation hooks.

Step by Step

Following the steps to setup the CDK project showed in the second part of this series or just clone the version 1.0 of GitHub code if you want add step by step the code.

Please visit github for downloading the template.

velez94

/

cdkv2_pipeline_multienvironment

velez94

/

cdkv2_pipeline_multienvironment

Repository for cdk pipelines for multiaccount environment

CDK Pipelines Multi Environment Devployment

This is a project for CDK development with Python for creating multi AWS account deployment.

Solution Overview

This pipeline is a default pipeline composed by next steps 1.The changes are detected and activate de pipeline. For this demo the branch master is the default branch. 2.The CDK project is synthesized if is aligned with AWS Security Best practices. 3.The pipeline run self-update action. 4.The unit test runs, and its report is published in codebuild reports group. 5.The SAST…

Let's begin with traditional steps.

Add unit tests

You can add unit test for testing the infrastructure constructs, but what kind of cases you should create?

When you use infrastructure as a code with a high level of abstraction using tools such as CDK or Pulumi you enter the world of Infrastructure as Software, that is, now the infrastructure is treated as another application and the software development cycle must also be applied to its construction and operation. The first step is use BDD, or TDD for the components but a small component could be a single piece of a big puzzle. You should construct the test based in stack or micro stack and not in single components.

In this scenario the code shows the unit test using fine-grained assertions for verifying that server-side encryption is enable using AES256 for s3 bucket.

First, create a test file test/unit/test_simple_s3_stack.py

import aws_cdk as core

import aws_cdk.assertions as assertions

from ...src.stacks.simple_s3_stack import SimpleS3Stack

from ...project_configs.helpers.project_configs import props

# example tests. To run these tests, uncomment this file along with the example

# resource in src/stacks/simple_s3_stack.py

def test_s3_bucket_created():

app = core.App()

props["storage_resources"]["s3"][0]["environment"] = 'dev'

stack = SimpleS3Stack(app, "SimpleS3Stack", props=props["storage_resources"]["s3"][0])

template = assertions.Template.from_stack(stack)

template.has_resource_properties("AWS::S3::Bucket", {

"BucketEncryption": {

"ServerSideEncryptionConfiguration": [

{

"ServerSideEncryptionByDefault": {

"SSEAlgorithm": "AES256"

}

}

]

}

})

Of course, the cdk-pipeline could be tested in the same way

Now, the unit test step should be created into the pipeline in file src/pipeline/cdk_pipeline_multienvironment_stack.py, for that a CodeBuild step runs the pytest command and export the report in junitxml format to report group Pytest-Report.

unit_test_step = pipelines.CodeBuildStep("UnitTests", project_name="UnitTests",

commands=[

"pip install pytest",

"pip install -r requirements.txt",

"python3 -m pytest --junitxml=unit_test.xml"

],

partial_build_spec=codebuild.BuildSpec.from_object(

{"version": '0.2',

"reports": {

f"Pytest-{props['project_name']}-Report": {

"files": [

"unit_test.xml"

],

"file-format": "JUNITXML"

}

}

}

)

)

Finally, the step is added as pre step for deployment into Dev environment.

...

# Add Unit test pre step

deploy_dev_stg.add_pre(unit_test_step)

...

Your code is ready to deploy but, before run local test as follow:

$ python3 -m pytest

========================================================================================================= test session starts ==========================================================================================================

platform linux -- Python 3.10.4, pytest-7.1.3, pluggy-1.0.0

rootdir: /home/walej/projects/blogs/multienvironment_deployments_series/cdk_pipeline_multienvironment

plugins: typeguard-2.13.3

collected 2 items

tests/unit/test_cdk_pipeline_multienvironment_stack.py . [ 50%]

tests/unit/test_simple_s3_stack.py . [100%]

========================================================================================================== 2 passed in 4.44s ===========================================================================================================

Verify changes:

cdk diff -e CdkPipelineMultienvironmentStack --profile labxl-devsecops

And Commit changes and push

git commit -am "add unit test step"

git push

The Figure 2 shows a view of fail test for this stage.

The Figure 3 shows a view of report group created automatically for Codebuild.

Figure 3. Unit test Report Group

Add SAST test step

Now, it's time to add some SAST step, Checkov will be the tool for this task.

In file src/pipeline/cdk_pipeline_multienvironment_stack.py add some code:

# Create SAST Step for Infrastructure

sast_test_step = pipelines.CodeBuildStep("SASTTests",

project_name="SASTTests",

commands=[

"pip install checkov",

"ls -all",

"checkov -d . -o junitxml --output-file . --soft-fail"

],

partial_build_spec=codebuild.BuildSpec.from_object(

{"version": '0.2',

"reports": {

f"checkov-{props['project_name']}-Report": {

"files": [

"results_junitxml.xml"

],

"file-format": "JUNITXML"

}

}

}

),

input=pipeline.synth.primary_output

)

Here the step is created and report group to publish results_junitxml.xml. Watch that the input for this stage is the cdk synth output generated in the previous stage.

The next step is add the step to the deployment stage for Dev environment.

...

# Add SAST step

deploy_dev_stg.add_pre(sast_test_step)

...

Your code is ready to deploy but, before run local test as follow:

checkov -d cdk.out/

[ kubernetes framework ]: 100%|████████████████████|[12/12], Current File Scanned=assembly-CdkPipelineMultienvironmentStack-DeployDev/manifest.json

[ cloudformation framework ]: 100%|████████████████████|[4/4], Current File Scanned=/assembly-CdkPipelineMultienvironmentStack-DeployDev/CdkPipelineMultienvironmentStackDeployDevSimpleS3StackD63BA93D.template.json

[ secrets framework ]: 100%|████████████████████|[12/12], Current File Scanned=cdk.out/assembly-CdkPipelineMultienvironmentStack-DeployDev/manifest.json

_ _

___| |__ ___ ___| | _______ __

/ __| '_ \ / _ \/ __| |/ / _ \ \ / /

| (__| | | | __/ (__| < (_) \ V /

\___|_| |_|\___|\___|_|\_\___/ \_/

By bridgecrew.io | version: 2.1.229

Update available 2.1.229 -> 2.1.286

Run pip3 install -U checkov to update

...

Check: CKV_AWS_54: "Ensure S3 bucket has block public policy enabled"

FAILED for resource: AWS::S3::Bucket.multienvdemo94A5B7DE

File: /SimpleS3Stack.template.json:3-39

Guide: https://docs.bridgecrew.io/docs/bc_aws_s3_20

3 | "multienvdemo94A5B7DE": {

4 | "Type": "AWS::S3::Bucket",

5 | "Properties": {

6 | "BucketEncryption": {

7 | "ServerSideEncryptionConfiguration": [

8 | {

9 | "ServerSideEncryptionByDefault": {

10 | "SSEAlgorithm": "AES256"

11 | }

12 | }

13 | ]

14 | },

15 | "BucketName": "multi-env-demo",

16 | "Tags": [

17 | {

18 | "Key": "Environment",

19 | "Value": "dev"

20 | },

21 | {

22 | "Key": "Owner",

23 | "Value": "DevSecOpsAdm"

24 | },

25 | {

26 | "Key": "Project",

27 | "Value": "multiDev"

28 | }

29 | ],

30 | "VersioningConfiguration": {

31 | "Status": "Enabled"

32 | }

33 | },

34 | "UpdateReplacePolicy": "Retain",

35 | "DeletionPolicy": "Retain",

36 | "Metadata": {

37 | "aws:cdk:path": "SimpleS3Stack/multi-env-demo/Resource"

38 | }

39 | },

...

If you don't correct the finds your pipeline will be broken, if the finding is a false positive or requirement doesn't apply for your environment you can use

--soft-failflag to checkov command to pass the step. From thischeckov -d . -o junitxml --output-file .to thischeckov -d . -o junitxml --output-file . --soft-fail

Finally check the differences and push your changes.

$ cdk diff -e CdkPipelineMultienvironmentStack --profile labxl-devsecops

$ git commit -am "add SAST test step"

$ git push

The Figure 4 and Figure 5 show the evidence, first the SAST step in Codepipeline panel and second the report group created for Codebuild.

Figure 5. Report Group SAST using checkov.

Enhanced the practice

So far you have applied traditional practice but, what kind of tools we can use to improve this process?

The answer, cdk-nag and cloudFormation Hooks.

cdk-nag: Check CDK applications or CloudFormation templates for best practices using a combination of available rule packs. Inspired by cfn_nag.

-

Cloudformation Hooks: A hook contains code that is invoked immediately before CloudFormation creates, updates, or deletes specific resources. Hooks can inspect the resources that CloudFormation is about to provision. If hooks find any resources that don’t comply with your organizational guidelines, they are able to prevent CloudFormation from continuing the provisioning process. A hook includes a hook specification represented by a JSON schema and handlers that are invoked at each hook invocation point.

-

Characteristics of hooks include:

-

Proactive validation – Reduces risk, operational overhead, and cost by identifying noncompliant resources before they're provisioned.

- Automatic enforcement – Provide enforcement in your AWS account to prevent noncompliant resources from being provisioned by CloudFormation.

-

Proactive validation – Reduces risk, operational overhead, and cost by identifying noncompliant resources before they're provisioned.

-

Including cdk-nag in cdk project

First, the requirements must be updated in requirements.txt.

aws-cdk-lib==2.44.0

constructs>=10.0.0,<11.0.0

cdk-nag>=2.18.44

Now, install the requirements using pip install -r requirements.txt.

Second, modify the aspects of each stack for including cdk-nag before synth process. For example in the stage for deployment s3 bucket in the file src/pipeline/stages/deploy_app_stage.py.

from constructs import Construct

from aws_cdk import (

Stage,

# Import Aspects

Aspects

)

# Add AWS Checks

from cdk_nag import AwsSolutionsChecks

from ...stacks.simple_s3_stack import SimpleS3Stack

class PipelineStageDeployApp(Stage):

def __init__(self, scope: Construct, id: str, props: dict = None, **kwargs):

super().__init__(scope, id, **kwargs)

stack = SimpleS3Stack(

self,

"SimpleS3Stack",

props=props,

)

# Add aspects

Aspects.of(stack).add(AwsSolutionsChecks(verbose=True))

Continue with cdk synth command.

$ cdk synth

[Error at /CdkPipelineMultienvironmentStack/DeployDev/SimpleS3Stack/multi-env-demo/Resource] AwsSolutions-S1: The S3 Bucket has server access logs disabled. The bucket should have server access logging enabled to provide detailed records for the requests that are made to the bucket.

[Error at /CdkPipelineMultienvironmentStack/DeployDev/SimpleS3Stack/multi-env-demo/Resource] AwsSolutions-S2: The S3 Bucket does not have public access restricted and blocked. The bucket should have public access restricted and blocked to prevent unauthorized access.

[Error at /CdkPipelineMultienvironmentStack/DeployStg/SimpleS3Stack/multi-env-demo/Resource] AwsSolutions-S1: The S3 Bucket has server access logs disabled. The bucket should have server access logging enabled to provide detailed records for the requests that are made to the bucket.

[Error at /CdkPipelineMultienvironmentStack/DeployStg/SimpleS3Stack/multi-env-demo/Resource] AwsSolutions-S2: The S3 Bucket does not have public access restricted and blocked. The bucket should have public access restricted and blocked to prevent unauthorized access.

Found errors

Watch that some findings are the same gotten in SAST using checkov but in this case the process run before code is synthesized and the results was duplicated because the stage is use with different parameters twice.

Let's try to correct this findings.

Edit the stack construct to add a best practice: AwsSolutions-S2: The S3 Bucket does not have public access restricted and blocked. in file src/stacks/simple_s3_stack.py

Just add

block_public_access=s3.BlockPublicAccess.BLOCK_ALL

from aws_cdk import (

Stack,

aws_s3 as s3,

CfnOutput,

RemovalPolicy

)

from constructs import Construct

class SimpleS3Stack(Stack):

def __init__(self, scope: Construct, construct_id: str, props: dict = None, **kwargs) -> None:

super().__init__(scope, construct_id, **kwargs)

# The code that defines your stack goes here

bucket = s3.Bucket(self, id=props["bucket_name"],

bucket_name=f'{props["bucket_name"]}-{props["environment"]}',

versioned=True if props["versioned"] == "enable" else None,

enforce_ssl=True,

encryption=s3.BucketEncryption.S3_MANAGED,

removal_policy=RemovalPolicy.DESTROY,

# Best practices

block_public_access=s3.BlockPublicAccess.BLOCK_ALL,

)

# Define outputs

CfnOutput(self, id="S3ARNOutput", value=bucket.bucket_arn, description="Bucket ARN")

For demo purpose, the rule AwsSolutions-S1: The S3 Bucket has server access logs disabled, will be suppressed for this, in file src/pipeline/stages/deploy_app_stage.py the nag supression is added to the stack.

from constructs import Construct

from aws_cdk import (

Stage,

# Import Aspects

Aspects

)

# Add AWS Checks

from cdk_nag import AwsSolutionsChecks, NagSuppressions

from ...stacks.simple_s3_stack import SimpleS3Stack

class PipelineStageDeployApp(Stage):

def __init__(self, scope: Construct, id: str, props: dict = None, **kwargs):

super().__init__(scope, id, **kwargs)

stack = SimpleS3Stack(

self,

"SimpleS3Stack",

props=props,

)

# Add aspects

Aspects.of(stack).add(AwsSolutionsChecks(verbose=True))

# Add Suppression

NagSuppressions.add_stack_suppressions(stack=stack, suppressions=[

{"id": "AwsSolutions-S1", "reason": "Demo Purpose"}

])

# set_tags(stack, tags=props["tags"])

Finally, run cdk synth command again.

In order to give greater depth to cloudformation hooks in future deliveries, their use and capabilities will be demonstrated in depth.

Conclusions

- Use IAM identity Center and multi account schema for your deployment aligned with AWS RSA and AWS Well Architecture Framework.

- Apply DRY principle an enablement your team with the minimal tools that abstract the best practices and increase the agility and velocity of delivery.

- Apply security shif left principle apply common practices such as SAST and unit testing to validate stacks properties and best practices, you can enforcement these practices before the code was synthetized using cdk-nag.

- Use new features as Cloudformation Hooks for proactive validation and automatic enforcement for security shift right practices.

- Use other services such as AWS Security Hub, AWS Chatbot and Amazon CodeGuru to improve the user experience and vulnerability management.

- Expand the features progressively and don’t try put all together in the first step. Have a secure and robust pipeline require time, practice, and continuous improvement practices.

Top comments (0)