Introduction

As organizations scale their Kubernetes environments, managing multiple clusters across various regions becomes increasingly complex. Amazon Elastic Kubernetes Service (EKS) provides a managed Kubernetes service, but orchestrating workloads across multiple EKS clusters presents significant operational challenges. Enter Karmada (Kubernetes Armada) — an open-source CNCF project designed to solve multi-cluster management issues.

In this article, we'll explore how to combine Amazon EKS with Karmada to build a robust, scalable multi-cluster management solution that provides high availability, disaster recovery, and improved resource utilization across your Kubernetes estate.

Understanding Karmada

Karmada, which stands for Kubernetes Armada, is a CNCF project that provides a unified control plane for managing multiple Kubernetes clusters. Unlike other multi-cluster solutions, Karmada maintains the native Kubernetes API without introducing custom APIs, making it easier to integrate with existing Kubernetes tools and workflows.

Key features of Karmada include:

- Centralized cluster management: Manage multiple clusters from a single control plane

- Native Kubernetes API compatibility: Use familiar Kubernetes APIs and tools

- Resource propagation: Automatically distribute resources across member clusters

- Failover and high availability: Support application failover between clusters

- Override policies: Define cluster-specific configurations while maintaining a single source of truth

Why Combine EKS with Karmada?

Amazon EKS simplifies running Kubernetes on AWS by eliminating the need to install and operate your own Kubernetes control plane. However, many organizations require workloads to run across multiple regions or even multiple cloud providers. Combining EKS with Karmada offers several advantages:

- Global High Availability: Deploy applications across multiple regions to ensure service continuity even if an entire region experiences an outage

- Regulatory Compliance: Meet data residency requirements by deploying specific workloads to specific regions

- Cost Optimization: Distribute workloads to the most cost-effective regions

- Scalability: Scale beyond the limits of a single Kubernetes cluster

- Simplified Management: Manage multiple clusters with consistent policies and configurations

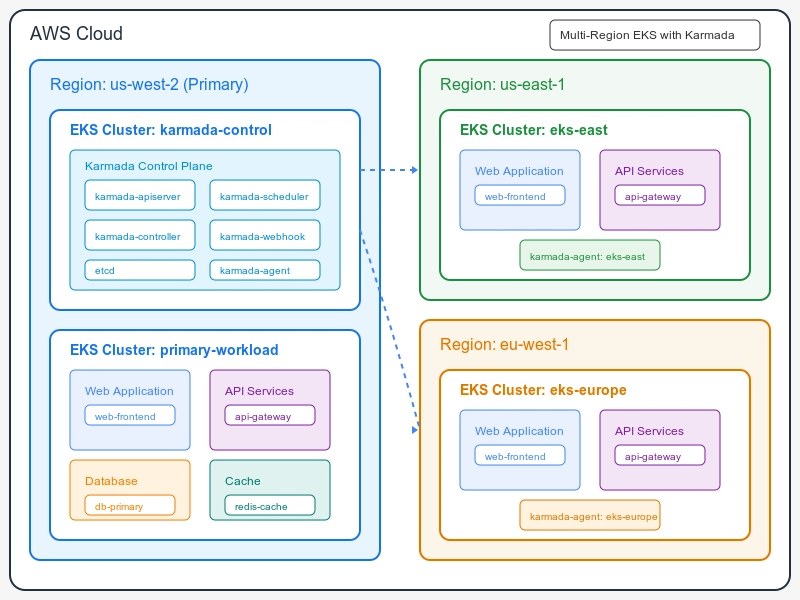

Implementation Architecture

Let's walk through a reference architecture for implementing Karmada with EKS:

- Karmada Control Plane: Deploy the Karmada control plane in a dedicated EKS cluster, preferably in your primary region

- Member Clusters: Set up multiple EKS clusters across different AWS regions

- Connectivity: Establish secure connectivity between clusters using AWS Transit Gateway or similar networking services

- Authentication: Configure IAM roles and service accounts for secure cluster access

- Monitoring: Implement a unified monitoring solution across all clusters

Step-by-Step Setup Guide

Prerequisites

- AWS CLI configured with appropriate permissions

- kubectl installed and configured

- Helm 3.x installed

1. Create EKS Clusters

First, create EKS clusters in your desired regions. Here, we'll create two clusters:

# Create a cluster in us-west-2 (for Karmada control plane)

eksctl create cluster --name karmada-control --region us-west-2 --version 1.28 --nodegroup-name standard-workers --node-type m5.large --nodes 3

# Create a member cluster in us-east-1

eksctl create cluster --name eks-east --region us-east-1 --version 1.28 --nodegroup-name standard-workers --node-type m5.large --nodes 3

# Create another member cluster in eu-west-1

eksctl create cluster --name eks-europe --region eu-west-1 --version 1.28 --nodegroup-name standard-workers --node-type m5.large --nodes 3

2. Install Karmada Control Plane

Next, install the Karmada control plane on the first cluster:

# Update kubeconfig to point to the control plane cluster

aws eks update-kubeconfig --name karmada-control --region us-west-2

# Install Karmada using Helm

helm repo add karmada https://raw.githubusercontent.com/karmada-io/karmada/master/charts

helm repo update

helm install karmada karmada/karmada --create-namespace --namespace karmada-system

3. Register Member Clusters

Now, register your EKS clusters with Karmada:

# Install karmadactl

curl -s https://raw.githubusercontent.com/karmada-io/karmada/master/hack/install-cli.sh | bash

# Get kubeconfig for member clusters

aws eks update-kubeconfig --name eks-east --region us-east-1 --kubeconfig=/tmp/eks-east.kubeconfig

aws eks update-kubeconfig --name eks-europe --region eu-west-1 --kubeconfig=/tmp/eks-europe.kubeconfig

# Register clusters with Karmada

karmadactl join eks-east --cluster-kubeconfig=/tmp/eks-east.kubeconfig

karmadactl join eks-europe --cluster-kubeconfig=/tmp/eks-europe.kubeconfig

4. Verify Cluster Registration

Check if your clusters are properly registered:

kubectl get clusters

You should see your EKS clusters listed with their status as "Ready".

Deploying Applications Across Clusters

With Karmada, you have multiple ways to deploy applications across clusters:

Using PropagationPolicy

The most common way is to use a PropagationPolicy:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.21

ports:

- containerPort: 80

---

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: nginx-propagation

namespace: default

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: nginx-deployment

placement:

clusterAffinity:

clusterNames:

- eks-east

- eks-europe

Apply this YAML to your Karmada control plane, and Karmada will propagate the nginx deployment to both the eks-east and eks-europe clusters.

Using OverridePolicy for Cluster-specific Configurations

You might want different configurations for different clusters. For instance, you might need more replicas in a region with higher traffic:

apiVersion: policy.karmada.io/v1alpha1

kind: OverridePolicy

metadata:

name: nginx-override

namespace: default

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: nginx-deployment

overrideRules:

- targetCluster:

clusterNames:

- eks-europe

overriders:

plaintext:

- path: "/spec/replicas"

operator: replace

value: 5

This policy will override the replicas count to 5 in the eks-europe cluster, while eks-east will use the default value from the original deployment.

Implementing Failover Between Regions

One of the key benefits of a multi-cluster setup is the ability to implement cross-region failover. Here's how to set up a simple failover mechanism using Karmada:

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: nginx-failover

namespace: default

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: nginx-deployment

placement:

replicaScheduling:

replicaSchedulingType: Divided

weightPreference:

staticWeightList:

- targetCluster:

clusterNames:

- eks-east

weight: 1

- targetCluster:

clusterNames:

- eks-europe

weight: 0

clusterTolerations:

- key: cluster.karmada.io/unreachable

operator: Exists

effect: NoSchedule

tolerationSeconds: 300

With this policy, your workloads initially run in eks-east. If eks-east becomes unreachable for more than 300 seconds, Karmada will reschedule the workloads to eks-europe.

Monitoring Your Multi-cluster Setup

Monitoring a multi-cluster setup requires a unified approach. Consider implementing:

- Prometheus and Grafana: Deploy Prometheus for metrics collection and Grafana for visualization

- Karmada Dashboards: Use Karmada-specific Grafana dashboards to monitor propagation status

- AWS CloudWatch: Integrate with CloudWatch for EKS-specific metrics

- Distributed Tracing: Implement tools like Jaeger or AWS X-Ray for tracing requests across clusters

Cost Optimization Strategies

Running multiple EKS clusters can increase costs. Here are strategies to optimize expenses:

- Right-size your clusters: Use appropriate instance types and autoscaling

- Spot Instances: Configure node groups to use Spot instances for non-critical workloads

- Cluster Autoscaler: Implement the Kubernetes Cluster Autoscaler to adjust node count based on workload

- Fargate Profiles: Use AWS Fargate for serverless container execution when appropriate

- Workload Distribution: Use Karmada to place workloads in lower-cost regions when possible

Common Challenges and Solutions

Networking Complexity

Challenge: Establishing secure communication between clusters across regions.

Solution: Use AWS Transit Gateway for regional connectivity, implement service mesh solutions like Istio or AWS App Mesh for service-to-service communication.

Data Synchronization

Challenge: Keeping data synchronized across multiple regions.

Solution: Use region-specific persistent volumes for regional data, employ cross-region replication for shared data, or use managed services like Amazon Aurora Global Database.

Certificate Management

Challenge: Managing TLS certificates across clusters.

Solution: Implement cert-manager with a central certificate authority, or use AWS Certificate Manager for certificates.

Conclusion

Combining Amazon EKS with Karmada provides a powerful solution for multi-cluster Kubernetes management. This approach enables global high availability, regulatory compliance, and cost optimization while simplifying operations through a unified control plane.

As organizations continue to expand their Kubernetes footprint, multi-cluster management becomes essential. By following the steps and best practices outlined in this article, you can build a robust, scalable Kubernetes platform that spans multiple regions and even multiple cloud providers.

Remember that multi-cluster management is a journey, not a destination. Start with a simple setup, gain operational experience, and gradually expand your multi-cluster capabilities as your organization's needs evolve.

Top comments (1)

This looks brilliant, well done 👍