AWS recently announced a new feature to give users the ability to use PartiQL, which is an SQL compatible query language to work with data in Amazon DynamoDB.

When this was launched, I was initially excited because it means I can write my queries in a more familiar way of SQL-like queries rather than using the Document Client API.

In this article, I would like to compare querying and inserting data into dynamoDb using PartiQL vs. using the Document Client. I will also be touching on the performance and features of using either approach. I will be using the AWS Javascript SDK throughout my Node.js code examples.

Quick introduction to the Document Client and PartiQL

PartiQL

Amazon DynamoDB supports PartiQL, an SQL-compatible query language to select, insert, update, and delete data in DynamoDB. PartiQL operations provide the same availability, latency, and performance as the other DynamoDB data operations. For the PartiQL specification and a tutorial on the core query language, see the PartiQL documentation.

Document Client

DynamoDb Document Client simplifies working with the DynamoDB methods by abstracting away the notion of attribute values. Basically, you can use Javascript objects directly and pass them to DynamoDB methods, and the Document Client will map the Javascript types to the DynamoDB tables.

For example, to get an item from a DynamoDb table, this example below will show the differences in constructing the param object between the Document Client and the DynamoDb direct method.

This example uses the DynamoDB documentClient get method.

const documentClient = new AWS.DynamoDB.DocumentClient();

const params = {

TableName: "Movies",

Key:{

"year": 2020,

"title" : "A movie"

}

};

documentClient.get(params, function(err, data) {

if (err) console.log(err);

else console.log(data);

});

And this example below is using the DynamoDB getItem method directly.

const dynamoDb = new AWS.DynamoDB();

const params = {

TableName: "Movies",

Key:{

"year":{

N: "2020"

},

"title" : {

S: "A movie"

}

}

};

dynamoDb.getItem(params, function(err, data) {

if (err) console.log(err);

else console.log(data);

});

Getting started

To follow along with this article and try the examples on your machine, you can clone this Node.js github repo and run the getting started command, which will set up a DynamoDb table and upload sample data to that table. The table and sample data is almost similar to the AWS docs example.

The getting-started command of my example repo will create a simple DynamoDb table called Movies that has a list of movies with the following attributes:

- Partition key: year (number)

- Sort key: title (string)

I created the table in the US-East-1 region, so if you are following along, you will need to update your region in the example code if you use a different region. At the moment of writing this post, PartiQL is supported in 23 regions as per the announcement post.

If you've setup everything, you should see the following data in your Movies table preview in the console UI.

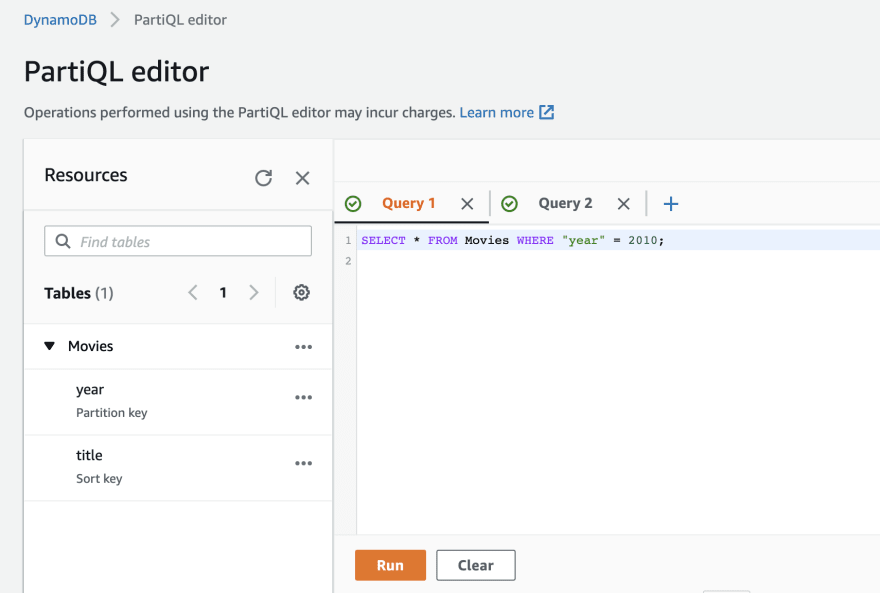

Querying the data using PartiQL Editor in the console

Before writing any code, let's test the data using the PartiQL editor in the console UI. You will need to use the new console UI preview to see this view.

We can start by writing a simple Select Query as shown below to query all movies from the year 2010.

SELECT * FROM Movies WHERE "year" = 2010;

The above will query the movies table and filter it with the Partition Key "year". Since the partition key is a number, we can pass 2010 as a number without quotes.

Another example below is querying all the movies from 2010, where the movie title contains the word 'and'.

SELECT * FROM Movies WHERE "year" = 2010

and contains("title", 'and');

Important tips to keep in mind when writing PartiQL statements

- Use single quotes for strings.

- Use double-quotes for field names, variables, and reserved words.

- Don't forget to include a WHERE clause condition that specifies a partition key to avoid having your

SELECTstatement perform an expensive full table scan. For more information, you can refer to the Select Statement reference.

Side Tip

If you are worried about writing PartiQL queries that can run full table scans accidentally, you can set an IAM policy for your user that locks full table scans. Once your policy is set, your query will return an exception. Read more here.

Using the Javascript SDK to run operations on the table

Now that we have the sample data uploaded in the table and quickly tested the PartiQL editor on the console, let's see how we can use the Javascript SDK to run operations on the table using both the Document Client and the PartiQL statements.

As mentioned above, you can view all the examples below in full, including the necessary try/catch blocks in this Github repository. If you are following the setup to run the same examples on your machine, you can run them using the node command. Refer to the repo readme file.

Querying the table

Example query with the Document Client

Let's start with an example of how a query can be done using the Document Client.

//query-documentAPI.js

const documentClient = new AWS.DynamoDB.DocumentClient();

async function queryWithDocumentClient({year, title}) {

const params = {

TableName : "Movies",

ProjectionExpression:"#yr, title, info",

KeyConditionExpression: "#yr = :yyyy and title = :title",

ExpressionAttributeNames:{

"#yr": "year"

},

ExpressionAttributeValues: {

":yyyy": year,

":title": title

}

};

const results = await documentClient.query(params).promise();

}

In the above example, we form the params object, which contains the following:

-

ProjectionExpressionspecifies the attributes you want in the result. In this example, I selected all attributes. -

KeyConditionExpressionspecifies the condition we want our query to use. Notice that we've used an alias '#yr' for the key 'year' because 'year' is a reserved keyword in DynamoDb. -

ExpressionAttributeNameslets us map the alias '#yr' to the actual word 'year'. -

ExpressionAttributeValuesallows us to put the values and map them to the alias keys we wrote in the KeyConditionExpression.

Example query with PartiQL

Let's now see how we can write the same query in PartiQL.

//query-partiQL.js

const dynamoDB = new AWS.DynamoDB();

async function queryWithPartiQL({year, title}) {

const statement = `SELECT * FROM Movies WHERE "year" = ${year} and "title" = '${title}' `

const results = await dynamoDB.executeStatement({Statement: statement}).promise();

}

In the above example, we form our SQL statement as a string and then use executeStatement method to run this statement. This will return us the item but with the dynamoDb attribute map, so if we want to convert the first item in the results back to a JS object, we can use AWS.DynamoDB.Converter.unmarshall(results.Items[0]).

Inserting an item to the table

Example insert item with the Document Client

Let's start with an example of how an insert can be done using the Document Client.

//insert-documentAPI.js

const documentClient = new AWS.DynamoDB.DocumentClient();

async function insertWithDocumentClient(movie) {

const params = {

TableName: "Movies",

Item: movie

};

await documentClient.put(params).promise();

};

const movieDetails = {

year: 2020,

title: "The Big New Movie",

info:{

plot: "Nothing happens at all.",

rating: 0

}

}

insertWithDocumentClient(movieDetails);

In this approach, inserting a single item with the Document Client is straightforward. We can pass the movie object as a JS object and construct our params object, which we pass to the put method.

You can also add additional keys to the params object such as ConditionExpression if you want to have your PutItem operation run only if a specific condition is met, e.g., attribute_not_exists.

Example insert item with PartiQL

Moving on to the next example, where we insert the same item with PartiQL. I found this part quite interesting when I was coding this example. Initially, PartiQL was constantly throwing an error for a badly formed statement.

The main reason for this issue is that the PartiQL statement expects the data to have strings in single quotes, as we mentioned earlier above. However, when you construct a regular JS object and pass it to another method, the strings become contained in double-quotes instead.

To fix this issue, I parsed the object in a simple DIY custom implementation of a JSON stringifier function, and I modified it to use single quotes instead. I also modified it to escape any single quotes in the text by doubling them. For example, if the movie's title is A bug's life, it becomes A bug''s life, which is how single quotes are usually escaped in SQL.

The code for this stringifier is in the utils folder in my Github repo; however, this is a simple implementation and was made only to demonstrate PartiQL in this blog article, so it doesn't handle a few cases. You might prefer in production to use a good JS library that can safely handle this string parsing to be compatible with PartiQL statements.

//insert-partiQL.js

const { stringify } = require('../utils/stringify')

const dynamoDB = new AWS.DynamoDB();

async function insertWithPartiQL(movie) {

// stringify object to what PartiQL will accept

const item = stringify(movie);

await dynamoDB.executeStatement(

{

Statement: `INSERT INTO Movies VALUE ${item}`

}).promise();

};

const movieDetails = {

year: 2020,

title: "The Big New Movie",

info:{

plot: "Nothing happens at all.",

rating: 0

}

}

insertWithPartiQL(movieDetails);

Batch Insert into a table

In addition to inserting and querying data, let's quickly go over an example for batch insert operations.

Example batch insert with the Document Client

In the Document Client, batch insert can be done using the batchWrite method. This method delegates to the AWS.DynamoDB.batchWriteItem but the advantage of using this Document Client method is that we can use native Javascript types instead of having to specify the DynamoDb attributes types for each field.

//batch-documentAPI.js

const documentClient = new AWS.DynamoDB.DocumentClient();

async function uploadBatchMoviesData(listOfMovies) {

const items = listOfMovies.map((movie)=> {

return {

PutRequest: {

Item: {

year: movie['year'],

title: movie['title'],

info: movie['info']

}

}

}

});

for (let i = 0; i < items.length; i += 25) {

const upperLimit = Math.min(i + 25, items.length);

const batch = items.slice(i, upperLimit);

const params = {

RequestItems: {

'Movies': batch

}

};

await documentClient.batchWrite(params).promise();

}

};

const listOfMovies = createFakeMovies(500);

uploadBatchMoviesData(listOfMovies);

From the above example, we first iterate over the array list of all the movies to construct the shape of the PutRequest body. We then iterate again over this big array of items and slice 25 items for each batch request. This is because a single call to BatchWriteItem can have as many as 25 put or delete requests, and each item can be as large as 400 KB. Therefore, we will batch 25 movies in each call until we upload the whole list.

The Document Client BatchWrite method delegates to the DynamoDb BatchWriteItem, so there are some caveats which you need to consider:

- BatchWriteItem cannot update items (it will replace the whole existing items), so if you want to update each item individually, use the

UpdateItemmethod. - The response you get back from this method will contain an array of unprocessed items if some items failed. However, you will not get an individual response per item. Also, the whole batch write operation might fail even if only one of your items contain a wrong primary key attribute.

- You cannot specify conditions on each put request.

For more information on BatchWriteItem, you can refer to the docs.

Example batch insert with PartiQL

PartiQL has some advantages here in batch operations compared to the Document Client. The reason for that is that we can write individual statements for insert and updates and then use the batchExecuteStatement method to run each statement. The other advantage is that we will get an individual response to each statement. However, one limitation is that the entire batch must consist of either read statements or write statements; you cannot mix both in one batch.

We also have the same limitation of 25 items in each batch request.

//batch-partiQL.js

const dynamoDB = new AWS.DynamoDB(listOfMovies);

async function uploadBatchWithPartiQL(listOfMovies) {

const statements = listOfMovies.map((movie) => {

const item = stringify(movie);

return {"Statement": `INSERT INTO Movies VALUE ${item}`}

})

for (let i = 0; i < statements.length; i += 25) {

const upperLimit = Math.min(i + 25, statements.length);

const batch = statements.slice(i, upperLimit);

const response = await dynamoDB.batchExecuteStatement({

Statements: batch

}).promise();

}

}

const listOfMovies = createFakeMovies(500);

uploadBatchWithPartiQL(listOfMovies)

Similar to inserting an item using PartiQL, we are stringifying the movie using our custom stringifier method to ensure single quotes are used for strings in the object. We are then simply constructing an SQL statement that we then batch into an array of 25 items each and pass it to the batchExecuteStatement method.

Keep in mind that in both PartiQL or Document Client, if the same batch (the 25 items batch) has a duplicate primary key and sort key, then that whole batch call will fail.

On the other hand, if the duplicate item was inserted in a separate batch, one of the differences between both is that an insert statement using PartiQL batchExecuteStatement will fail for that statement only if the item already exists in the table (same Partition key and sort key). This is not the case with the Document Client batchWrite, which will actually replace the whole item even if it exists.

Performance Comparision

In short, there is no noticeable performance difference between Document Client and PartiQL. Both perform fast and almost take the same duration for the same type of operations. If you run the examples on your machine, you can also see the difference between each operation. I've added a console.log time to most files.

PartiQL would perform as fast as using Document Client, and if you run the same operation multiple times, the differences are less than 100-300 ms between each operation, and in some cases, they would run at the same speed or faster.

One thing to note is the way I wrote my batch write statements examples above will run each batch statement sequentially since we are using a for-loop. However, suppose your table uses on-demand capacity, or you've provisioned your table with a higher write capacity. In that case, it will be very performant for you to write it in a concurrent approach using Promises.all as the example code shown below.

Previously, both Document Client and PartiQL would take 23 seconds to insert up to 500 items. However, using Promises.all, both methods would take less than 1.5 seconds (on my machine) to insert 500 items.

const dynamoDB = new AWS.DynamoDB(listOfMovies);

async function uploadBatchWithPartiQL(listOfMovies) {

const statements = listOfMovies.map((movie) => {

const item = stringify(movie);

return {"Statement": `INSERT INTO Movies VALUE ${item}`}

})

let promises = []

for (let i = 0; i < statements.length; i += 25) {

const upperLimit = Math.min(i + 25, statements.length);

const batch = statements.slice(i, upperLimit);

promises.push(dynamoDB.batchExecuteStatement({

Statements: batch

}).promise());

}

await Promise.all(promises.map(async(batchCall) => {

await batchCall

}))

}

const listOfMovies = createFakeMovies(500);

uploadBatchWithPartiQL(listOfMovies)

Conclusion and should you use PartiQL?

It's up to your comfort level of what you would like to use to decide if you want to include PartiQL in your codebase. There is no performance penalty to use either option. AWS provided PartiQL because AWS naturally likes to provide more than a way to communicate with DynamoDb. They also wanted to provide a tool that provides familiar semantics to access the data.

PartiQL seems to have a slight advantage in batch operations compared to batchWrite from the Document Client, particularly the ability to do batch updates. So if you are extensively working with batch operations, it might be worth looking into if PartiQL will improve your work case.

One question you might have is whether using PartiQL is a good idea with Javascript. I went over the importance of differentiating between single quotes and double quotes, which in JS can be tricky to manage. To manage that for the examples above, I've shown that I had to implement a custom stringifier method to create correct partiQL statements. My answer would be "it depends" whether the appeal of writing your queries in SQL-like format is more than using the Document Client, which is also a great way to run operations on DynamoDb items.

When writing this, the AWS Dynamodb PartiQL documentation only list examples written in Java and AWS CLI. As this is a new feature released, I'm sure AWS will add more support, and maybe the open-source community will add a useful util tool to make Javascript types compatible with partiQL statements.

Further Resources

Do you like working with DynamoDb and want to learn more? Here are some resources I recommend.

- DynamoDb DocumentClient cheat sheet by Nader Dabit.

- The DynamoDb Book by Alex DeBrie.

- Useful tools to make working with DynamoDb easier:

- NoSQL Workbench for DynamoDB: A free client-side GUI application.

- Dynobase: Professional GUI Client for DynamoDB (paid).

I hope you enjoyed this article and learned a thing or two about DynamoDb. Let me know in the comments below or on Twitter of any questions you might have.

Top comments (4)

How would this work with single table designs? Because "Where" maps to a table, in a single-table pattern, would the syntax be less useful?

Using single table patterns, where you can read an item collection of multiple related (joined) items in a single read. With PartiQL, you seem to lose this ability.

So it seems to me that PartiQL would be slower for more demanding cases. Perhaps it is best for smaller, simpler apps?

Thanks for your comment Michael.

Actually, I think partiQL will work quite well for single table patterns mainly because the WHERE is mapped to a partition key (whether its a primary partition key or a global secondary index). I don't know how complex your queries are normally, but for example, if I have a table I can easily write a simple query as follows:

In fact, it is the multi-table design that is not easy to implement in a single query bcz I don't think PartiQL implementation in dynamodb supports sub-queries at the moment, however, multi-table operations can be faciliated using dynamodb transactions.

In terms of performance, PartiQL performance is the same as current dynamoDB methods. There was a twitch session (DynamoDB office hours by Rick Houlihan) that was done around the 10th of December 2020, where Rick actually compared the performance of partiQL and dynamoDB API on a java app and he was running thousands of batch records, and the performance was the same.

I wanted to link to that twitch session but it seems it's currently archived, I already pinged Rick last week to see if they can put it back online.

This is helpful. My feedback: It looks nice. It's very nice that PartiQL is open source.

Thank you. Glad you liked it.