LINQ allows us to query multiple entities from Azure Table Storage. However, the maximum number of entities that are returned in a single query with LINQ Take operator is 1,000 (MS doc) and you may need to code more to retrieve what you want.

This blog illustrates how to ingest data from Table Storage into Data Explorer via Data Factory to prepare to query large numbers of entities with Kusto.

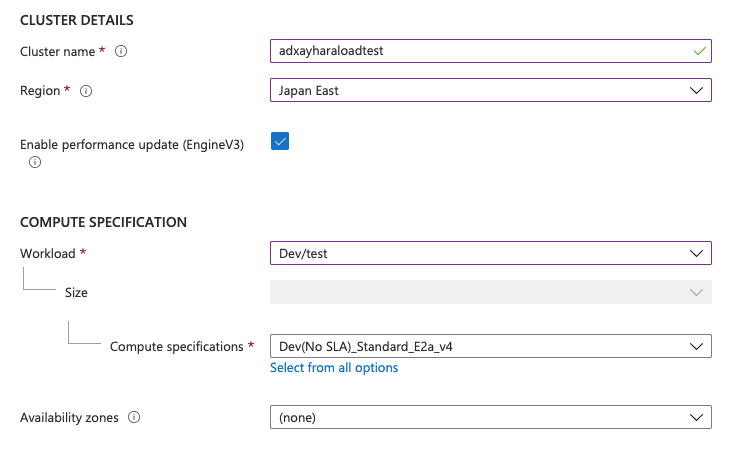

Step 1: Create Azure Data Explorer

- Create Azure Data Explorer

Here is an example setting:

- Go to the resource and click "Create database"

- Create Azure Data Explorer Database (e.g. Database name: loadtest)

- Click the database (e.g. "loadtest") and select "Query"

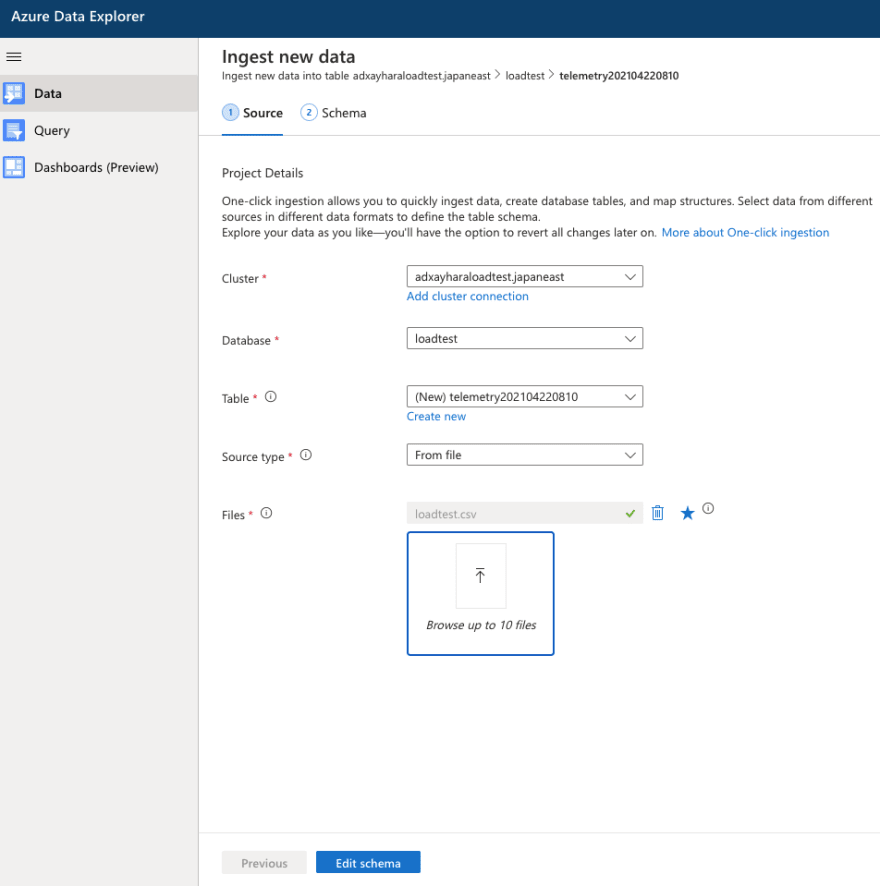

- Click "Ingest new data"

- Create table in the database and ingest data

Note: table name should be not including "-(dash)"

- Click "Edit schema"

- Select "Ignore the first record"

- Make sure all data type are correct

- Click "Start ingestion"

Note: Please copy the mapping name

Step 2: Create Azure Data Factory

- Create Azure Data Factory

Step 3: Prepare Azure Active Directory

- Go to Azure Active Directory

- Click "App registrations" and register an application

- Go to "Certificates & secrets" and add a client secret Note: Please don't forget to copy the secret

- Go to Azure Data Explorer and click "Permissions"

- Add the service principal just created

Step 4: Set Azure Data Factory to copy data from Azure Table Storage

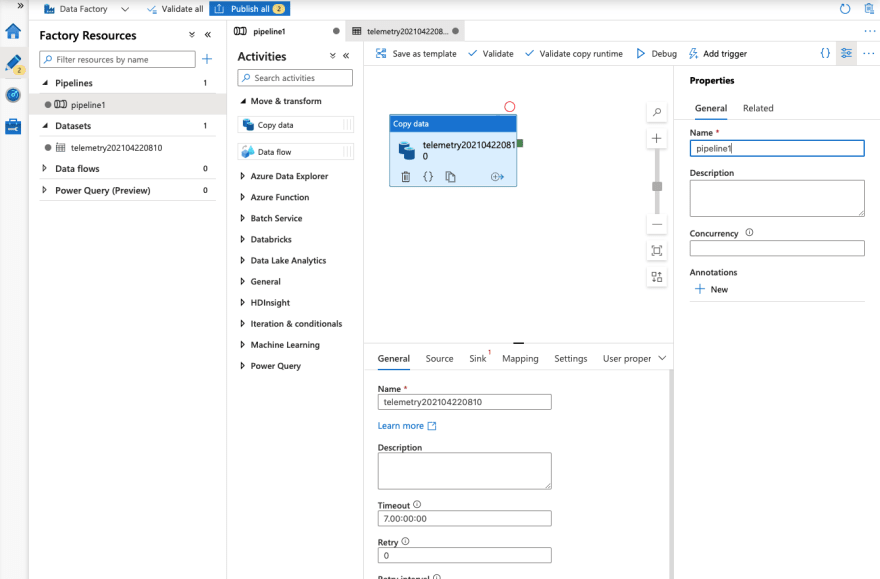

Step 4-1: Create a base pipeline on Azure Data Factory

- Create Data Factory (You can select "Configure Git later")

- Go to resource

- Click "Author & Monitor"

- Click "Author (pencil icon)"

- Click "Add new resource (plus icon)" and select "Pipeline"

- Click "Move & transform" and drag "Copy data" to the right pane

- Set General in the bottom pane

Step 4-2: Set up input data (Source) from Table Storage - Source

- Click "Add new resource (plus icon)" again and select "Dataset"

- Search "Azure Table Storage" and click "Continue"

- Click "New" in the bottom pane and set the linked service (Table Storage)

- Select the table storage from Azure subscription or Enter manually

- Click "Test connection"

- Click "Create"

- Select the table you want to copy from pulldown list and update the dataset name in the right pane

- Back to pipeline setting and select the dataset on the "Source" section in the bottom pane

Step 4-3: Set output data (Sink) to Data Explorer

- Click "Add new resource (plus icon)" again and select "Dataset"

- Search "Azure Data Explorer" and click "Continue"

- Click "New" in the bottom pane and set the linked service (Data Explorer)

- Select the data explorer cluster from Azure subscription or Enter manually

- Put your Service principal Id (= e.g. Application (client) ID of "sp-adf-ayhara-loadtest") and the client secret which you copied earlier

- Select Data Explorer database

- Click "Test connection"

- Click "Create"

- Select the table as destination from pulldown list and update the dataset name in the right pane

- Back to pipeline setting and select the dataset, table, and ingestion mapping name on the "Sink" section in the bottom pane

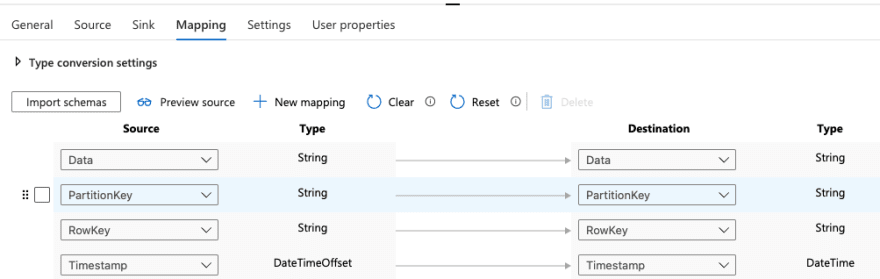

Step 4-4: Set mapping

- Click import schemas

- Check if the mapping is correct

Step 5: Ingest data from Table Storage into Data Explorer database

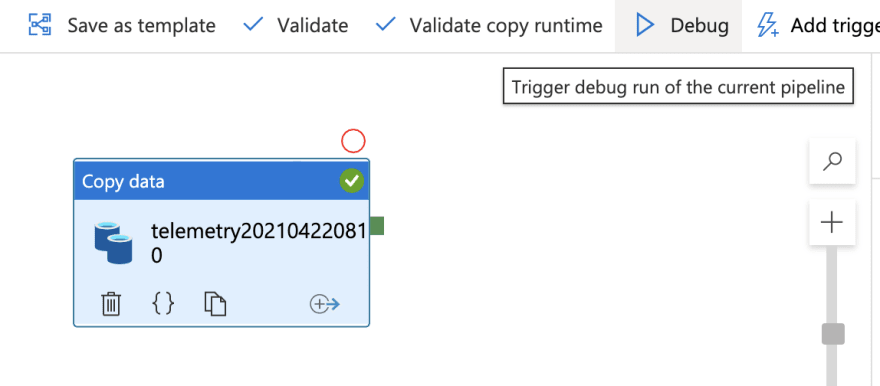

- Click "Debug"

- Click "Details (glasses icon)"if you want to see the progress

- Once successfully data is copied:

- Go to Data Explorer and try to query something

- Back to Data Factory and click "Validate All"

- Publish all if there is no error!

Now, you're ready to query data ingested from Azure Table Storage with Kusto.

Next step - Query table data in Azure Data Explorer with Kusto to analyse load test results

Top comments (0)