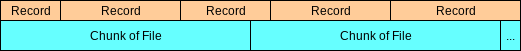

For a recent project, I needed to read data from a specific chunk of a file. The data was a sequence of serialized records, so I used a bufio Scanner for splitting. Scanners are great, but they obscure the exact number of bytes read. In working through the problem, I found a solution that worked quite nicely.

Essentially, the problem boiled down to this: I wanted to use bufio’s Scanner to cleanly split data records, but I also needed fine-grain control over exactly how many bytes were being read. Simply counting the bytes in the input record is insufficient, because data records may be split using variable-width delimiters1. (i.e. \n vs \r\n).

My initial thought was to wrap the io.Reader that I was reading records from. The result looked something like this:

type CountingReader struct {

reader io.Reader

BytesRead int

}

func (r *CountingReader) Read(p []byte) (n int, err error) {

n, err = r.reader.Read(p)

r.BytesRead += n

return n, err

}

This method does work as advertised, in that it counts the number of bytes read from the reader. However, it doesn’t actually solve my problem – counting the number of bytes read as records. For example:

func main() {

buf := bytes.NewBuffer([]byte("foo bar baz"))

reader := &CountingReader{reader: buf}

scanner := bufio.NewScanner(reader)

scanner.Split(bufio.ScanWords)

scanner.Scan()

fmt.Println(scanner.Text()) // "foo"

fmt.Println(reader.BytesRead) // 11 :(

}

(You can try this on the Go Playground)

The reason for this behavior, as I found out, is that bufio.Scanner maintains an internal buffer which it reads to in large chunks. My CountingReader could (correctly) say that 11 bytes had been read from it, even though only, say, 4 bytes of data ("foo ") had been yielded from the Scanner.

My next thought was to implement a CountingScanner by wrapping or reimplementing buffio.Scanner to count processed bytes. Neither of these options was appealing. The io module also has LimitReader which enforces a maximum number of bytes read. For my problem, this wouldn’t work; my reader needs to completely read the last record in the chunk of the file, even it if spans the cutoff point.

In the end, I tried wrapping the scanner’s SplitFunc. For reference, SplitFunc has the following signature:

type SplitFunc func(data []byte, atEOF bool) (advance int, token []byte,

err error)

Essentially, SplitFunc takes a slice of bytes and returns how many bytes forward the Scanner should advance along with the “token” extracted from the byte slice.

This advance return value is exactly what I want: how many bytes were truly processed by the Scanner.

From here, I put together a simple struct that wraps a SplitFunc, and increments an internal counter each time a split is performed:

type ScanByteCounter struct {

BytesRead int

}

func (s *ScanByteCounter) Wrap(split bufio.SplitFunc) bufio.SplitFunc {

return func(data []byte, atEOF bool) (int, []byte, error) {

adv, tok, err := split(data, atEOF)

s.BytesRead += adv

return adv, tok, err

}

}

func main() {

data := []byte("foo bar baz")

counter := ScanByteCounter{}

scanner := bufio.NewScanner(bytes.NewBuffer(data))

splitFunc := counter.Wrap(bufio.ScanWords)

scanner.Split(splitFunc)

for scanner.Scan() {

fmt.Printf("Split Text: %s\n", scanner.Text())

fmt.Printf("Bytes Read: %d\n\n", counter.BytesRead)

}

// Split Text: foo

// Bytes Read: 4

// Split Text: bar

// Bytes Read: 8

// Split Text: baz

// Bytes Read: 11

}

And the results were successful! Try this on the Go Playground

Here are some lessons learned:

- Function wrapping is a good way to extend the usefulness of standard library features

- Beware of making assumptions about how much data is being read from/to buffers (especially when Readers/Writers are consumed by other interfaces)

I’m also curious if there’s an official way for solving this problem. If there’s a cleaner solution, let me know! 😄

Cover: Pixabay

Footnotes

1. I also wanted to support the general case of an arbitrary SplitFunc, so anything based on delimiter size was out.

Top comments (0)