My team and I were working on an open-source customer project in the aerospace domain that provided scientific data for students and university data scientists. After several attempts to build a classic Web UI application, we moved to a simplified Bot Flow. And this is why and how.

The project has a short and straightforward data response. It contains only essential data like:

- Coordinates.

- Event time frames.

- Source type, etc.

However, some messages have links to the detailed report that is a classic Web UI. Having a chat with a bot is more natural as you feel like you are communicating with a person.

Azure Healthcare Bot

Here is another good example of how bot service can be convenient in our daily life. Recently Microsoft has launched the Healthcare Bot Service.

- Overview of the Healthcare Bot service.

- Technical documentation that includes architecture overview, how to build it, and service concepts.

Introduction

In this article, I build a simplified version of a Bot that aggregates data from NASA API and provides the user with a simple workflow and short data feedback. Also, I use Azure Language Recognition (LUIS) service to improve and simplify the conversation flow.

The NASA Bot contains the following functions:

- Gather data from the NASA API.

- Aggregate and simplify data payload.

- Recognize the user input with Azure Language Recognition (LUIS). We will go into the LUIS below.

Before we dive deep into the details, let’s examine why the Bot is better than a classic UI.

Using the bot approach may significantly improve the user experience if you have:

- Simplified and short data payload.

- Intuitive user interaction flow that looks like a Q&A dialog.

- No complex UI.

- Focus on content.

UI is better than a Bot if you have:

- Complex data payload.

- Complex UI forms (Admin Portals, Editors, Maps, News Portals, Calendars).

- Complex data visualization (Dashboards, Charts).

The best option may be to combine both approaches and use all benefits from a Bot and a classic UI.

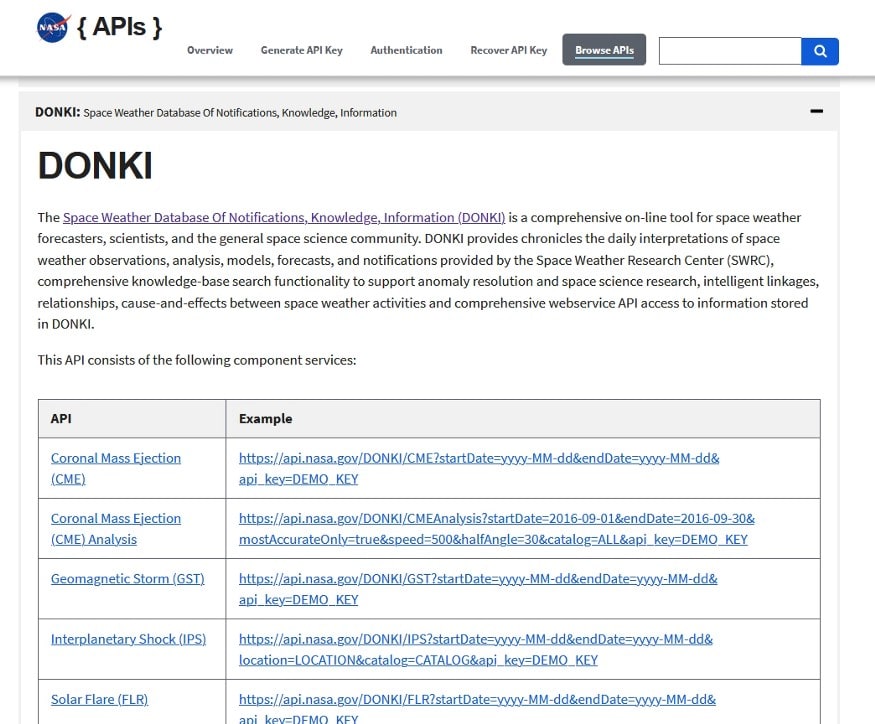

Why NASA API?

I have chosen NASA API because of:

- A granular and quite simple API design.

- Well-documented API calls.

- Easy and fast registration process.

- API key request.

NASA API is divided into categories or sub APIs, like:

- Space Weather Database Of Notifications, Knowledge, Information (DONKI)

- Mars Weather Service API

- Two-line element data earth-orbiting objects at a given point in time (TLE API)

- Satellite Situation Center

Why Azure Bot Service?

Azure Bot Service, alongside the Bot Framework, provides you with an opportunity to build, test, store your Bot. Bot Framework includes tools, SDK, AI services, and templates that allow you to make a bot quite quickly.

In my case, I have created a project with Visual Studio using the template and installed a toolset, SDK, and templates. I have used .NET Core and C#. However, this framework also allows using JavaScript and Python.

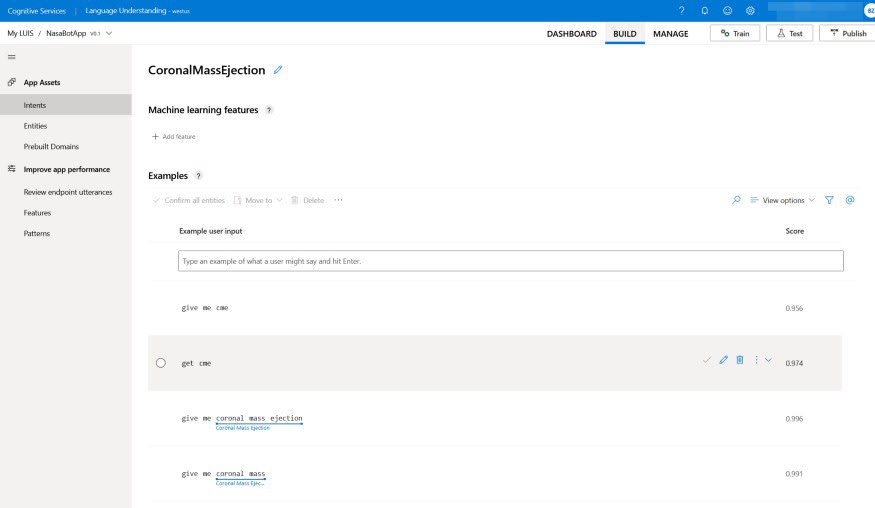

What is LUIS?

LUIS is a language understanding service that allows you to build and train your Bot to understand a natural language. I have included LUIS in my Bot to provide people with information from NASA API using different natural language construction.

For the current project, I have trained LUIS manually using the LUIS Portal. Below you can see that I added language sentences to train the LUIS to recognize user feedback and set the score. The LUIS portal also contains an option that allows you to test your data set and deploy it to different stages.

Of course, a manually trained model is suitable for small and middle-level bot projects. You should consider using pre-trained models that you quickly find in JSON format or set up for the production application.

You can also improve language understanding by adding QnA (Question and Answers) and language generation templates.

NASA Bot Architecture Overview

The NASA Bot architecture represents an N-tire structure based on an MVC pattern. The Bot includes API Controllers, Models, and Services.

The NASA bot consists of the following main components.

- Nasa bot class is a component that contains the conversation flow event handlers like: MembersAdded and MessageActivity. And it also serves as an entry point to start a conversation flow.

- External API aggregation service joins logic related to language recognition and fetches data from NASA API.

- NasaApi library subproject is a wrapper of Nasa API calls. It contains logic to obtain, parse data from API and build a proper response for the business layer.

You can find code examples and the NASA Bot project here.

Below is the list of other bot components with a link to the GIT repository:

- API (and BotController) is an endpoint to the Bot, and when you create your Bot with Visual Studio or VS Code, it creates a project that looks almost like a Web API project. The Web API is based on an MVC pattern.

- LanguageRecognitionService connects the Bot to the LUIS API and triggers the language recognition process.

- ObservationTypeModel contains all fields related to LUIS Response, including maximum intent score calculation logic.

Conversation workflow

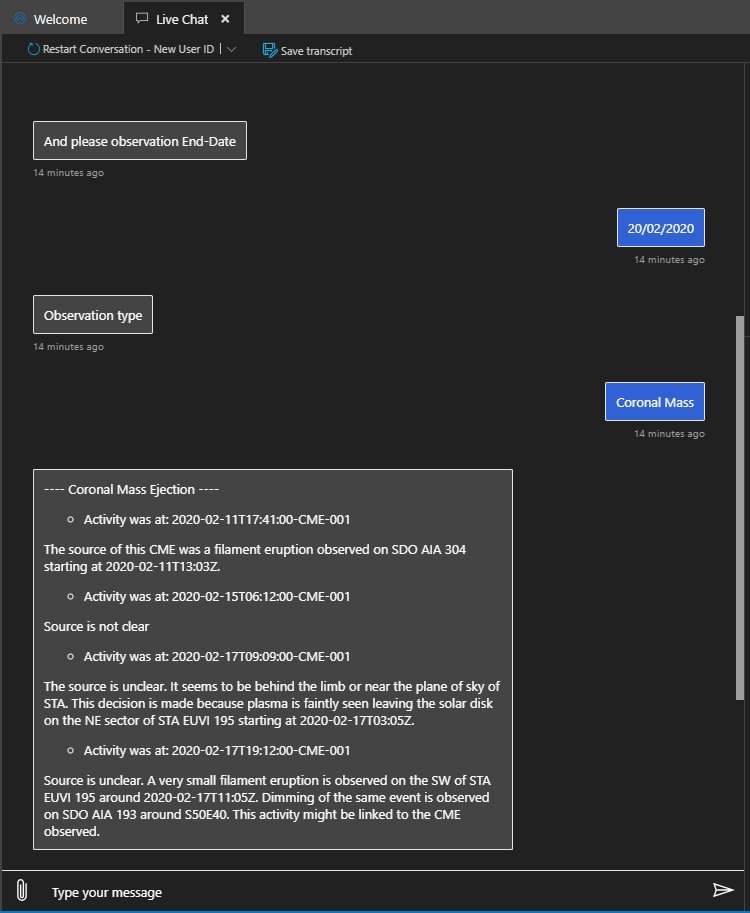

Below, I have added several screenshots that demonstrate an interaction with the Bot. As you see, here I used Bot Framework Emulator.

Conclusion

The article demonstrates the main advantages that bot services can bring in comparison to UI interfaces. In the next article, I will extend the Bot by adding more advanced features.

The link to the git repository where you can clone the project.

What is next

In the next article, I will upgrade the Bot by adding:

- QnA maker and models

- Unit Tests

- Other APIs

- Improved language recognition (Involve language generation)

- Bot deployment across multiple channels (Telegram, Slack)

Please feel free to suggest your ideas on what else should be added to the Bot or covered in the next article. Looking forward to hearing from you in the comments.

Top comments (0)