In this post, we will go through many of the options for building a React app that will get properly crawled by search engines and social media sites. This isn't totally exhaustive but it focuses on options that are serverless so you don't have to manage a fleet of EC2s or Docker containers.

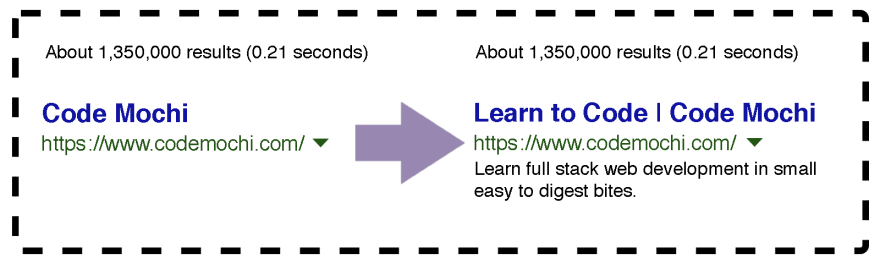

An often overlooked aspect when you are getting started with building full stack web applications in React is SEO because you have so many other components to build to even get the site working that it is easy to forget about it until the end. The tricky thing is that you can't even tell that it isn't working until you submit your site to Google and then come back a week later after it has crawled your site to see that none of your beautiful meta tags are showing up when you do a google search of your site. The left shows what the Google result shows up as, while the right is what you’d expect based on the dynamic tags that you are setting.

The cause for this is rooted in a common design pattern for starting your site with the create-react-app generator, so let's go through it. After creating the boilerplate, you can add page titles and meta tags with React Helmet. Here’s what a React Helmet component might look like for a typical static page:

const seo = {

title: 'About',

description: 'This is an awesome site that you definitely should check out.',

url: 'https://www.mydomain.com/about',

image: 'https://mydomain.com/images/home/logo.png',

}

<Helmet

title={`${seo.title} | Code Mochi`}

meta={[

{

name: 'description',

property: 'og:description',

content: seo.description,

},

{ property: 'og:title', content: `${seo.title} | Code Mochi` },

{ property: 'og:url', content: seo.url },

{ property: 'og:image', content: seo.image },

{ property: 'og:image:type', content: 'image/jpeg' },

{ property: 'twitter:image:src', content: seo.image },

{ property: 'twitter:title', content: `${seo.title} | Code Mochi` },

{ property: 'twitter:description', content: seo.description },

]}

/>

When seo is pulled from static data, there are no issues- Google will scrape all of it. We run into troubles when seo relies on fetching data from a server. This is the case if instead of a static about page, we wanted to make a blog page where we pull that data from an api using GraphQL or REST. In that case, seo would be empty initially and be filled in later after we receive data from the server. Here's what a blog page might look like with React Apollo:

const BlogPage = ({ match }) => {

<Query variables={{name: match.params.title}} query={BLOG_QUERY}>

{({ data, loading }) => {

const blog = _.get(data, 'blog');

if (loading || !blog) return <Loading />;

const { seo } = blog;

return (

<div>

<Helmet

title={`${seo.title} | Code Mochi`}

meta={[

{ name: 'description', property: 'og:description', content: seo.description },

{ property: 'og:title', content: `${seo.title} | Code Mochi` },

{ property: 'og:url', content: seo.url },

{ property: 'og:image', content: seo.image },

{ property: 'og:image:type', content: 'image/jpeg' },

{ property: 'twitter:image:src', content: seo.image },

{ property: 'twitter:title', content: `${seo.title} | Code Mochi` },

{ property: 'twitter:description', content: seo.description },

]} />

<div>

//Code for the Blog post.

</div>

</div>

)

}

</Query>

}

export default withRouter(BlogPage);

Initially, when the data is loading, the <BlogPage> will simply return the <Loading /> component. It's only when the loading is done that we move to the main portion of the code block, so the <Helmet> component will not be invoked until that point. Ideally, we’d like the Google crawler to wait on the page long enough until the data is loaded, but unfortunately, it is not something that we have control over.

There are a couple of approaches you can take to solve this problem and they all have their tradeoffs. We'll first go over some concepts:

Server-Side Rendering

This is where you have a server that runs your frontend website. When it receives a request for a page, the server will take the first pass at rendering the page before it sends you the HTML, js, and css. Any data that needs to be fetched from an api will be fetched by the frontend server itself and the page will be rendered before anything is delivered to the user's browser. This will ensure that a blog page has all of its title and meta tags rendered before it reaches the user. Since the Google web crawler acts like a user, the page that it receives will be all pre-populated with the correct title and meta tags as well so they will be ingested properly.

Static Site Rendering

This is where every page on your website will be pre-rendered at the time of building your site. This is distinguished from Server Side Rendering because instead of a server actively rendering a page when requested, all the possible site pages are pre-rendered and available without any further building required. This approach works especially well with static hosting solutions such as AWS S3 because an actively running server is not needed.

These are the two main classes of rendering, but there are several solutions for these two approaches:

Next.js

Next.js is a server-side rendering framework for React. It will render pages on the fly as they are requested from a user. There are two modes that it can operate in:

Option 1. Actively running a server.

This will run Next.js on an EC2 instance or possibly as a Docker Container.

Pros:

- Standard way of running Next.js.

Cons:

- Have to pay for an actively running server even if it isn't being used. Looking at \$15/month minimum.

- Need to manage scaling up and down server instances as the demand for your site goes up and down. This is where Docker, Kubernetes and a host of managed services come into play and things get complicated really fast at that point. The pro is that at that point your site is probably successful enough that you could pay a DevOps person to take care of this aspect if it is something you don't want to deal with.

- Not currently AWS Amplify compatible.

Option 2. As a lambda function.

Next.js recently introduced a new mode called serverless where you can build each individual page as a lambda function that gets hosted either through AWS or using Zeit's now service.

Pros:

- Serverless- you just pay for what you use. Likely will be in the free tier until you have hundreds or thousands of users (depending on usage patterns obviously).

- Scales up and down effortlessly.

Cons:

- Need to watch out for the payload size, can't have too many npm packages loaded.

- Can have a slow initial load time if the site hasn't been visited in a while. These so-called cold starts are based on the complexity of your page and the dependencies you have.

- Each page is an entire copy of your website, so it gets download each time someone navigates around (but gets cached in user's browser after).

- Not currently AWS Amplify compatible.

Gatsby

Gatsby is a static site rendered framework for React. It renders pages during the build time so all possible pages have already been rendered as separate html files and are ready to be downloaded before they are even uploaded to the server. This site is actually rendered using this method!

Pros:

- Blazingly fast: nothing to render so the page load times are super fast. Google PageSpeed Insights is going to love your site because it is so quick.

- Great for SEO- all title and metatags are generated during build time so Google has no trouble reading it.

- AWS Amplify compatible.

Cons:

- Can be bad for dynamic sites where not all possible page combinations are known at build time. An example might be an auction website or something where users are generating content.

- No good way to create all possible pages during build time because the data from an api can change in the future.

- Needs additional finagling to handle both static content and dynamic content because you'll have some api calls happening during build time and others during run time.

Gatsby can render dynamics routes, but since the pages are being generated by the client instead of on a server, they will not be populated with the correct metatags and title. Static content will still, however, load. If you had a site that was a Marketplace, as an example, Google would be able to fetch the tags for the static portions of the site, such as the home page or posts page, but it wouldn't be able to get the tags for the individual post page posts/:id, because its title and tags need data from the server to populate.

Prerender.cloud

This is a service that sits in front of your application and pre-renders the content before it delivers it back to the client or the Google web crawler. I’ve used this service before and it works great- PocketScholar, a science app I previously built uses this technique.

Pros:

- It will pre-render any webpage on demand so it is like Next.js but it will work with an existing create-react-app or statically generated site such as Gatsby or create-react-app's

build staticoption. - You deploy it yourself using a cloud formation stack on your AWS account.

- AWS Amplify compatible.

- You are serving your site from a static s3 bucket, so it will infinitely scale as you get more users and you only pay for what you use.

Cons:

- It's a service that you pay for based on the number of requests that your web application gets per month. It’s initially free but then is \$9/month for 600-20,000 requests.

- It doesn’t eliminate the cold starts that are present with AWS lambda- it can take a few seconds to load a website if the lambda hasn’t been used in the past 25 minutes or so.

Conclusion

There are a few ways to handle React and SEO and each has its benefits and drawbacks. Here is a table with the highlights:

| Benefits | Create-React-App | Prerender.cloud | Gatsby | Gatsby with dynamic routes | Next.js | Next.js serverless mode |

|---|---|---|---|---|---|---|

| Pay for what you use | X | X | X | X | X | |

| Seamlessly scale | X | X | X | X | X | |

| Fast initial load times | X | X | X | X | ||

| Blazingly fast initial load times | X | X | X | |||

| Render dyamic and static content | X | X | X | X | X | |

| Create new pages and routes without a rebuild | X | X | X | X | ||

| Webcrawler / Social Media scrapable (static) | X | X | X | X | X | X |

| Webcrawler / Social Media scrapable (dynamic) | X | * | * | X | X | |

| AWS Amplify Compatible | X | X | X | X |

* A Gatsby dynamic route will not set the metadata or title because it needs to fetch data from the server.

Starting with Create React App (CRA), we can see that while it is serverless which makes it easy for scalability and cost, that it fails for SEO purposes for any content that is dynamic. Prerender.cloud is a good option to put in front of a CRA app because it adds the rendering capability for search engines and social media sharing purposes, but it has the disadvantage of cold starts from the lambda function which can make it a little slow if the site hasn't been accessed in the past 25 minutes.

Gatsby is great for static sites and it wins in the speed department. It will allow you to have dynamic routes, but it won't allow you to benefit from SEO on those routes because it will need to fetch data from the server when rendering those routes on the user's browser.

Next.js is great for both dynamic and static routes but you've previously had to manage a running server instance. A glimmer of the best of all worlds lies in the serverless mode for Next.js. Although slower than Gatsby, pages get rendered on the fly so all relevant tags will be populated with their proper values for web crawlers. The only potential downside with this approach is that Next.js is currently not supported by AWS Amplify, so you can't use it with the automated deployment pipeline, authentication, or App Sync GraphQL endpoint.

There is more where that came from!

Click here to give us your email and we'll let you know when we publish new stuff. We respect your email privacy, we will never spam you and you can unsubscribe anytime.

Originally posted at Code Mochi.

Top comments (5)

Hi, great article ! Definetly worth reading.

That's a subject I've been curious about for some time but I never took the time to really get into it. In my peregrinations I've read that there are some ways to generate a sitemap for react apps through some tools like react-router-sitemap and then complete the generated result with the dynamic routes (which we can fetch and build from a database for example). I would be very grateful if you could share your thoughts about that and how you think it compares to the options you present in this article.

I realise that my remark might be a little beside the point since I don't how that would work in a AWS environment. Also it needs some server mechanism to fetch the dynamic content and update the sitemap.

Thank you for any insight you can share.

Thanks for the awesome suggestion! I actually just figured out sitemaps for Gatsby and Create-React-Apps so I'd be happy to create a walkthrough with screenshots and post that up. Out of curiosity, which kind of base boilerplate are you working off of (gatsby, nextjs, create react app)? I want to make sure I at least capture that one so it's helpful. :)

The technological stack I'm thinking about is the one we use at my company which has been satisfying so far. For you to have an idea of our base technological stack and principles I think the easiest way is to share with you one of our sources of inspiration : freecodecamp.org/news/how-to-get-s.... The server side is handled by a web service (ASP.Net Core WebAPI) which serves data stored in a SQL Server database.

We have some apps running with this stack but they are all only used in our customers' intranet. SEO has never been a concern so far. It's definetly going to become a subject at some point however. In our situation, I can surmize that the sitemap would be updated regularly by a planned task in corelation with the data in the database.

I'm not sure if it's relevant to stick with this stack for your walkthrough. I'm not sure how common/standard this stack is. Anyway having a walkthrough on the sitemap usage sor SEO would be amazing !

Thanks for the rundown of your stack Jean-Christophe. That's super interesting that you've coupled Gatsby together with ASP.Net, I haven't seen the blending of C# and javascript like that before. Luckily, from a frontend perspective, it doesn't actually matter what your backend uses as long as you are using Gatsby. I put together a post here that will take you through how to use a sitemap plugin to add generation to your website and then how to submit it to the Google search console. Enjoy and let me know if you have any more questions: codemochi.com/blog/2019-06-19-add-...

Really great guide.