While doing some work with Kubernetes (K8s) and studying for the CKAD exam, I came across a page on Matthew Palmer's website entitled "Practice Exam for Certified Kubernetes Application Developer (CKAD) Certification" and which contains five practice questions, which I'll go over here. If you see a problem with anything I've done below, including inefficient solutions, please let me know in the comments.

We will focus on not just showing a possible solution to each problem but also verifying our work.

Since we're limited to the kubernetes.io website when taking this test, this site is referenced where appropriate -- in particular the kubectl cheat sheet page is not a bad place to start and we can search for what we need here as well.

Lastly, with each of these questions, we need to pay particular attention to the namespace requirement.

This article relies on minikube running on Ubuntu along with the Oh My Zsh shell.

The k command is defined as follows:

alias k='kubectl'

Let's take a look at the first question.

Question #1

The first question from [1] is as follows:

"Create a namespace called ggckad-s0 in your cluster.

Run the following pods in this namespace.

1. A pod called pod-a with a single container running the kubegoldenguide/simple-http-server image

2. A pod called pod-b that has one container running the kubegoldenguide/alpine-spin:1.0.0 image, and one container running nginx:1.7.9

Write down the output of kubectl get pods for the ggckad-s0 namespace."

We can hack together a pod by hand or we can autogenerate it, which should be the preferred option as we don't want to waste time with this if we can avoid it. The following commands can be used to autogenerate each yaml file: one for the kubegoldenguide/simple-http-server container, one for the kubegoldenguide/alpine-spin:1.0.0 container, and one for the nginx:1.7.9 container.

kubectl run nginx --image=kubegoldenguide/simple-http-server --dry-run=client -o yaml > simple-http-server.yaml

kubectl run nginx --image=kubegoldenguide/alpine-spin:1.0.0 --dry-run=client -o yaml > alpine-spin.yaml

kubectl run nginx --image=nginx:1.7.9 --dry-run=client -o yaml > nginx.yaml

We can use these files to create the pod-a and pod-b yaml files, which are required for this question.

The configuration file for pod-a appears as follows:

And the configuration file for pod-b appears below:

We need to first create the namespace as per the instructions:

k create namespace ggckad-s0

Next, we can apply this configuration:

kubectl apply -f pod-[a or b].yaml --namespace ggckad-s0

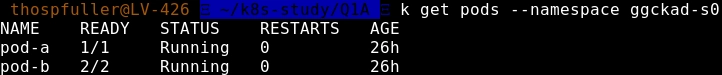

The final instruction for this question indicates that we need to "[w]rite down the output of kubectl get pods for the ggckad-s0 namespace." -- this is accomplished as follows:

k get pods --namespace ggckad-s0

which yields the following:

Verifying Our Solution

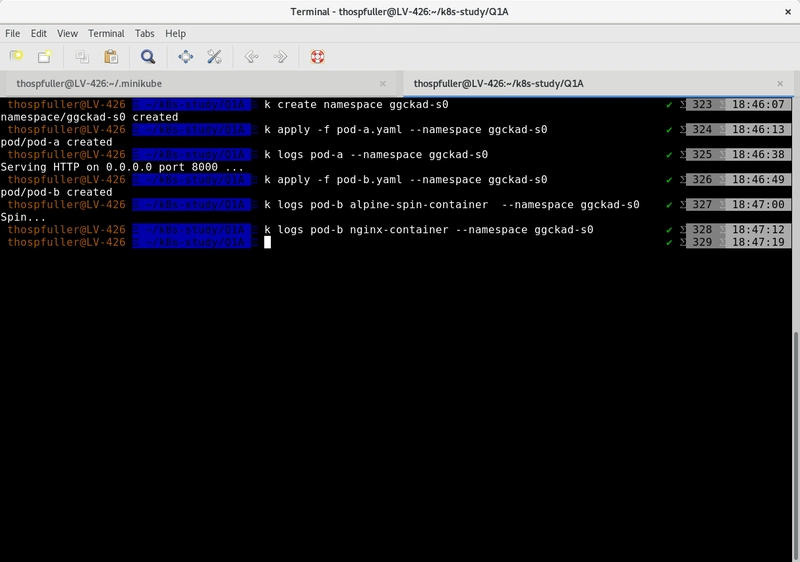

Below we have the entire script that we use in this example. We check the logs for all containers in order to see that they're running correctly.

k create namespace ggckad-s0

k apply -f pod-a.yaml --namespace ggckad-s0

k logs pod-a --namespace ggckad-s0

k apply -f pod-b.yaml --namespace ggckad-s0

k logs pod-b alpine-spin-container --namespace ggckad-s0

k logs pod-b nginx-container --namespace ggckad-s0

Running this script should yield the following output:

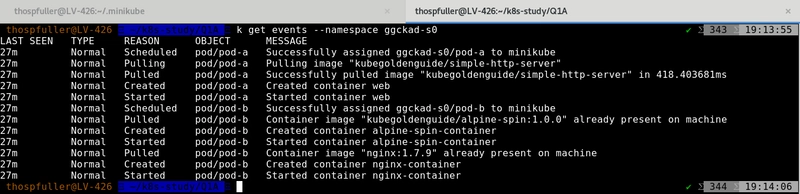

We can use the get events command to see the events that were collected.

k get events --namespace ggckad-s0

Running this command should yield the following output:

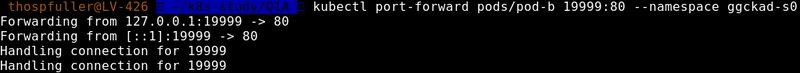

Notice that the nginx-container has started however there are no messages in the log that indicate that it's actually running so we'll check this by mapping port 19999 on the local machine to port 80 in the pod-b pod, which should point to the nginx-container which has the Nginx web server running on port 80.

kubectl port-forward pods/pod-b 19999:80 --namespace ggckad-s0

When we execute the line above, the output should look like what we have below:

Finally, we can now test that Nginx is running by browsing localhost:19999.

And that's it for this question.

Question #2

The second question from [1] is as follows:

"All operations in this question should be performed in the ggckad-s2 namespace.

Create a ConfigMap called app-config that contains the following two entries:

- 'connection_string' set to 'localhost:4096'

- 'external_url' set to 'google.com'

Run a pod called question-two-pod with a single container running the kubegoldenguide/alpine-spin:1.0.0 image, and expose these configuration settings as environment variables inside the container."

We need to first create the namespace as per the instructions:

k create namespace ggckad-s2

We need a ConfigMap, which can be created via the CLI as follows:

kubectl create configmap app-config --from-literal connection_string=localhost:4096 --from-literal external_url=google.com --dry-run -oyaml --namespace ggckad-s2

and which is defined in the following gist:

Next, we can apply this configuration making sure to include the specified namespace:

k apply -f ./app-config.yaml --namespace ggckad-s2

And the configuration file for the question-two-pod appears below:

We need to apply the pod configuration file, and we do so via the following command:

k apply -f ./question-two-pod.yaml --namespace ggckad-s2

Finally, we need to verify our work.

Verifying Our Solution

Verifying this solution is simple: In step one we'll get CLI access to the container and in step two we'll check the environment, if the environment variables have been set then we're finished.

Step one appears as follows:

k exec -it question-two-pod -c web --namespace ggckad-s2 -- /bin/sh

and step two can be accomplished using the env command and visually inspecting the results.

And that's it for this question.

Question #3

The third question from [1] is as follows:

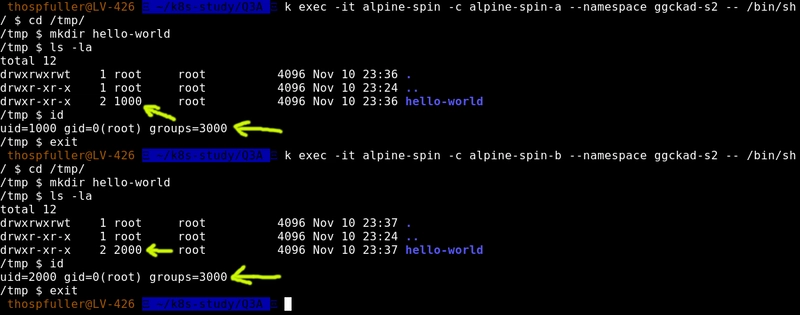

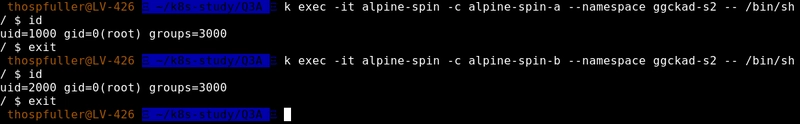

"All operations in this question should be performed in the ggckad-s2 namespace. Create a pod that has two containers. Both containers should run the kubegoldenguide/alpine-spin:1.0.0 image. The first container should run as user ID 1000, and the second container with user ID 2000. Both containers should use file system group ID 3000."

We need to first create the namespace as per the instructions:

k create namespace ggckad-s2

And the configuration file for the question-three-pod appears below -- review the namespace declaration -- this allows us to apply the configuration file and not include the namespace via the command line (CLI); also, and more importantly, pay particular attention to the fsGroup and runAsUser key/value pairs:

We can apply this configuration as follows:

k apply -f ./question-three-pod.yaml

Finally, we need to verify our work.

Verifying Our Solution

We need to get CLI access to our container and then verify that the settings are correct and the following command can be used to do exactly that:

k exec -it question-three-pod -c alpine-spin-[a or b] --namespace ggckad-s2 -- /bin/sh

Once we have access we can check that the fsGroup and runAsUser key/value pairs have been set correctly -- we'll get this information by using the id command.

And that's it for this question.

Question #4

The fourth question from [1] is as follows:

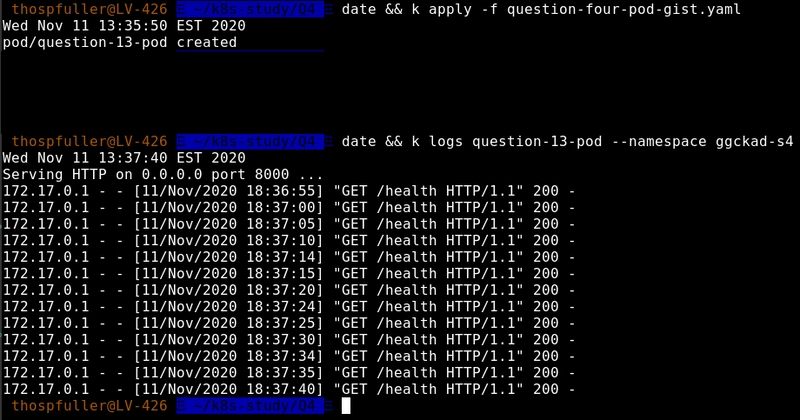

"All operations in this question should be performed in the ggckad-s4 namespace. This question will require you to create a pod that runs the image kubegoldenguide/question-thirteen. This image is in the main Docker repository at hub.docker.com.

This image is a web server that has a health endpoint served at '/health'. The web server listens on port 8000. (It runs Python’s SimpleHTTPServer.) It returns a 200 status code response when the application is healthy. The application typically takes sixty seconds to start.

Create a pod called question-13-pod to run this application, making sure to define liveness and readiness probes that use this health endpoint."

Unlike previous questions, this one specifically tells us that the kubegoldenguide/question-thirteen image is in the main Docker repository at hub.docker.com. According to the Kubernetes Images:Image Names documentation, "[i]f you don't specify a registry hostname, Kubernetes assumes that you mean the Docker public registry" so no further action is necessary with respect to this detail.

This question specifically requires liveness and readiness probes and we include the definitions of each below for convenience.

Readiness -- "the application is ready to receive traffic" [4]. As per [6]: "[i]f the readiness probe fails, the endpoints controller removes the Pod's IP address from the endpoints of all Services that match the Pod."

Liveness -- "the application is no longer serving requests and K8s will restart the offending pod" [4].

According to the section entitled Define a liveness HTTP request, "[a]ny code greater than or equal to 200 and less than 400 indicates success." -- so no action is required in our solution as the server running in the question-thirteen container will return an HTTP status response code of 200 when the application is healthy.

In our proposed solution, we'll add a buffer and start the livenessProbe at 75 seconds, which is 15 seconds after the typical expected startup time, as indicated in the question four details. The 15 second buffer is an arbitrarily chosen number and the assumption is that, realistically, the server should have been started by this time; a similar example appears in [5] and refer also to [7] and [8].

Note that as of Kubernetes 1.16 it is possible to define a startupProbe such that the livenessProbe will not be started until an initial OK is returned.

Our pod configuration can be seen here.

Finally, we need to verify our work.

Verifying Our Solution

In this example, we should expect to see log messages for the readinessProbe up until ~ 75 seconds after the pod was started, at which point we should see log messages appear for both the readinessProbe as well as the livenessProbe.

kubectl logs question-13-pod -c question-thirteen --v 4 --namespace ggckad-s4

Unfortunately, the output from this command does not tell us which probe is calling the endpoint.

As an exercise for the reader, can we determine exactly which probe is calling the health endpoint? The Kubernetes documentation seems to indicate that this is possible however at this time I do not have an example which demonstrates this.

And that's it for this question.

Question #5

The fifth question from [1] is as follows:

"All operations in this question should be performed in the ggckad-s5 namespace. Create a file called question-5.yaml that declares a deployment in the ggckad-s5 namespace, with six replicas running the nginx:1.7.9 image.

Each pod should have the label app=revproxy. The deployment should have the label client=user. Configure the deployment so that when the deployment is updated, the existing pods are killed off before new pods are created to replace them."

We need to check the labels for both the deployment and pods and then we need to, as per [1] "[c]onfigure the deployment so that when the deployment is updated, the existing pods are killed off before new pods are created to replace them".

We have two deployment strategies available: RollingUpdate (the default), and Recreate [14]. Given the definitions for RollingUpdate and Recreate in this case we do not want to use the default and instead need to assign Recreate to the .spec.strategy.type.

Our deployment configuration can be seen here:

We can apply the file the same as we did in the previous question:

k apply -f ./question-5.yaml

Finally, we need to verify our work.

Verifying Our Solution

We need to check the labels for both the deployment and pods and we also need to ensure that the requirement is met that when the deployment is updated existing pods are killed off before new pods are created to replace them.

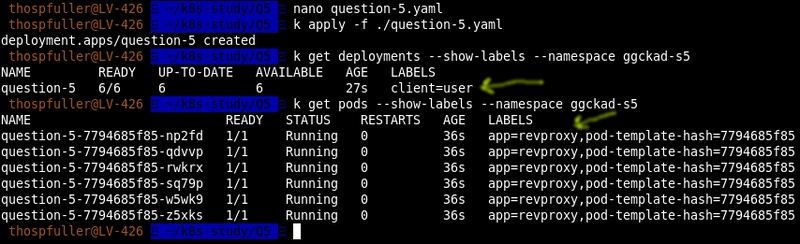

We can use the following command to view the deployment labels:

k get deployments --show-labels --namespace ggckad-s5

And we can use the following command to view the various pod labels:

k get pods --show-labels --namespace ggckad-s5

Below we can see the output of applying the question-5.yaml file and then executing these two commands; the yellow arrows show that the labels have been set as per the specification:

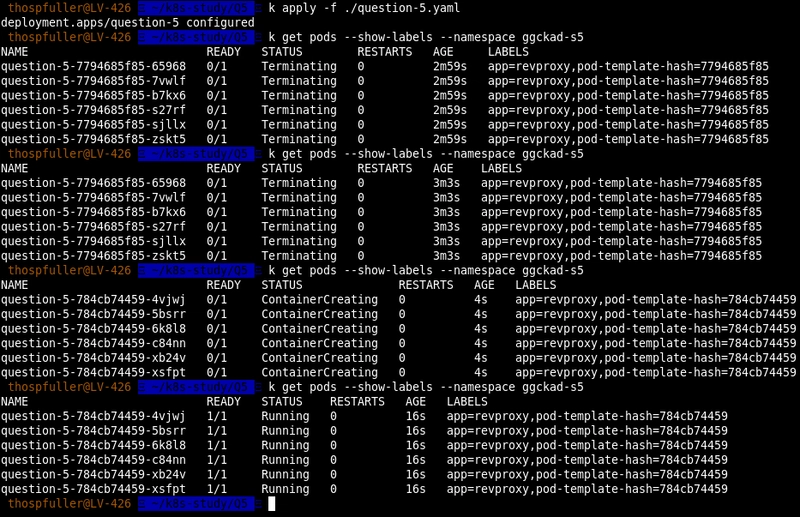

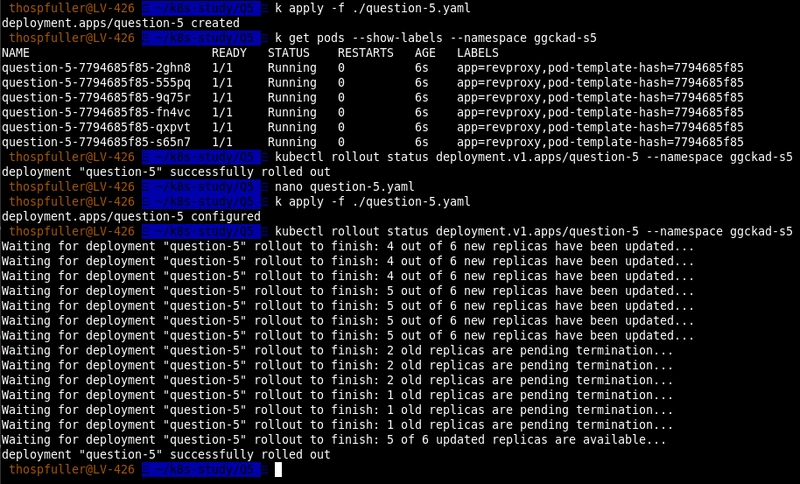

Note that our deployment relies on the nginx:1.7.9 image. This image is old so if we update this to nginx:latest and apply the question-5.yaml file again then we see that new containers are deployed while the old containers are gradually terminated. The following image demonstrates this behavior:

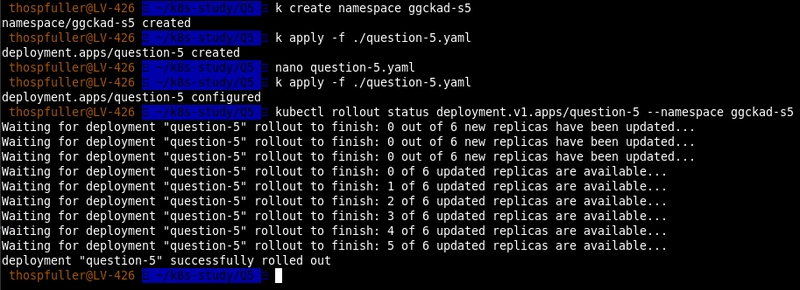

Next, we repeat the same exercise only here we check the rollout status using the following command [11]:

kubectl rollout status deployment.v1.apps/question-5 --namespace ggckad-s5

and we can see from a different angle that the old replicas are being terminated while the new ones are starting.

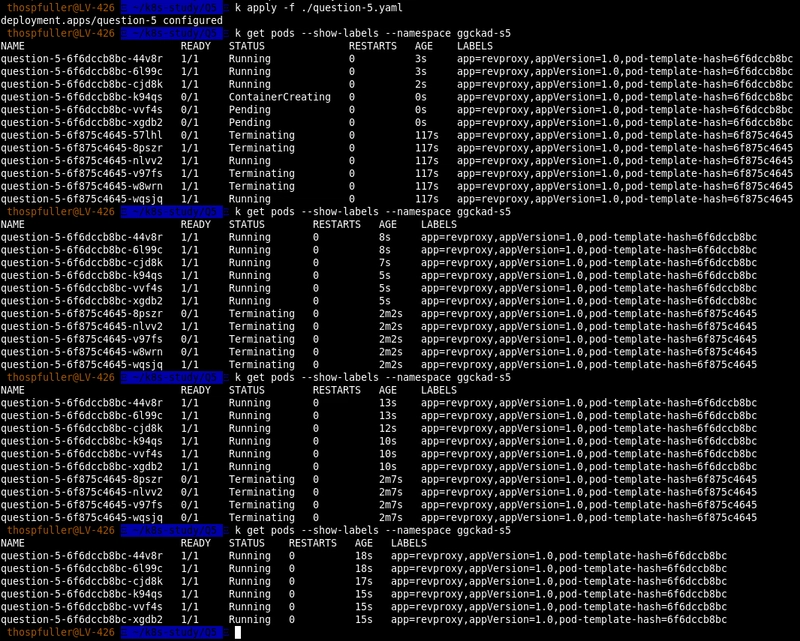

Lastly we can reproduce the same behavior by executing the following command:

kubectl rollout restart deployment question-5 --namespace ggckad-s5

and in this case we don't even need to update the nginx version in the question-5.yaml file.

Let's take a look at an update when the deployment strategy type is set to RollingUpdate -- this is the default and it is also the incorrect choice given the specification.

An Incorrect Solution

The same example but with a RollingUpdate deployment strategy would be incorrect. We include the output of this example below for comparison purposes.

Next, we repeat the same exercise only here we check the rollout status using the following command [11]:

kubectl rollout status deployment.v1.apps/question-5 --namespace ggckad-s5

and we can see from a different angle that the old replicas are being terminated while the new ones are starting.

And that's it for this question, and for this article for that matter -- on to the conclusion!

Conclusion

This article took several hours to write and was done in part as an investigation into how these problems could be solved as an introduction into studying for the CKAD exam for me, specifically.

There are several good articles available if you're beginning the process of preparing for this certification which provide guidance about the test structure and how to study for the exam and I include several links below.

- Which Kubernetes certification is right for you?

- Certified Kubernetes Application Developer (CKAD) exam preparation tips

- How to pass CKAD exam in first attempt ? Tips & Tricks in Kubernetes

- CKAD Exam Preparation Study Guide (Certified Kubernetes Application Developer)

- CKAD Exam Guide

- Be fast with Kubectl 1.19 CKAD/CKA

- Practice Enough With These 150 Questions for the CKAD Exam (noted in the comments, apparently these are not questions that would appear on the exam however this is still a good list)

If you have other resources or references that you've found helpful in preparing to sit for this exam, please include them in the comments.

Quiz

-

Refer to Question 1: Why don't we need to include the specific container in the port-forward command, copied below for convenience, when both the alpine-spin-container as well as the nginx-container both rely on containerPort 80?

kubectl port-forward pods/pod-b 19999:80 --namespace ggckad-s0

Note: port-forward only allows us to specify the pod and not the pod + container.

Refer to Question 1: What would happen if we tried to start pod-b but instead of the alpine-spin-container we had two nginx-container s?

What is the difference between a deployment strategy of "Recreate" juxtaposed with "RollingUpdate"?

Hat Tip

Rishi Malik, Ed McDonald, Aaron Friel, Scott Lowe, and Andy Suderman

References

- Practice Exam for Certified Kubernetes Application Developer (CKAD) Certification

- kelseyhightower / kubernetes-the-hard-way

- Connecting Applications with Services

- Configure Liveness, Readiness and Startup Probes

- Kubernetes Liveness and Readiness Probes: How to Avoid Shooting Yourself in the Foot

- Pod Lifecycle

- Kubernetes best practices: Setting up health checks with readiness and liveness probes

- Protect slow starting containers with startup probes

- Define a liveness HTTP request

- Configure a Pod to Use a ConfigMap

- Rollover (aka multiple updates in-flight)

- Kubernetes deployment strategies

- Proportional scaling

- Deployments : Strategy

- Kubernetes The Hard Way

- How To Restart Kubernetes Pods

Top comments (7)

Balancing work and study wasn’t easy while preparing for CKAD, but Dumpsforsure helped me stay on track. The question answers and practice tests really sharpened my skills. Today, I can confidently say I achieved success by passing from Dumpsforsure.

Very nice article, lots of learning, keep posting. I also created a full course on Kubernetes in 10hr on YouTube, please have a look and motivate me. Thanks

youtu.be/toLAU_QPF6o

Valid dumps for the CKAD exam by PASS4SUREXAMS. I suggest these to everyone. Quite informative and similar to the real exam.

I passed today, used

Some comments may only be visible to logged-in visitors. Sign in to view all comments.