AWS AppSync and AWS Amplify make it easy for anyone to build Web Application (or iOS/Android Applications) without having to manage the underlying infrastructure. Developers can focus on building their front-end web application while AppSync and Amplify handles the heavy-lifting on the backend infrastructure side.

What are pipeline resolvers?

AWS AppSync executes resolvers on a GraphQL field. In some cases, applications require executing multiple operations to resolve a single GraphQL field. With pipeline resolvers, developers can now compose operations (called Functions or Pipeline Functions) and execute them in sequence. Pipeline resolvers are useful for applications that, for instance, require performing an authorization check before fetching data for a field.

What are we building?

In this tutorial, you'll learn how to add a pipeline resolver in an AWS Amplify application. You'll build a new AWS Amplify backend with:

- A User model.

- A signupUser mutation that uses a pipeline resolver.

- A lambda function that takes the password field from input data and replaces it with a hashed password for security. It will be part of the pipeline resolver for signupUser mutation.

Prerequisites

To complete this tutorial, you will need:

- Node.js(>=10.x) and NPM(>=6.x) installed. Download from here.

- An AWS account. If you don't have one, you can create here.

- Amplify CLI installed and configured.

Step 1 - Create a new React App

$ npx create-react-app amplify-pipeline

$ cd amplify-pipeline

Step 2 - Initialize a new backend

$ amplify init

You'll be prompted for some information about the app.

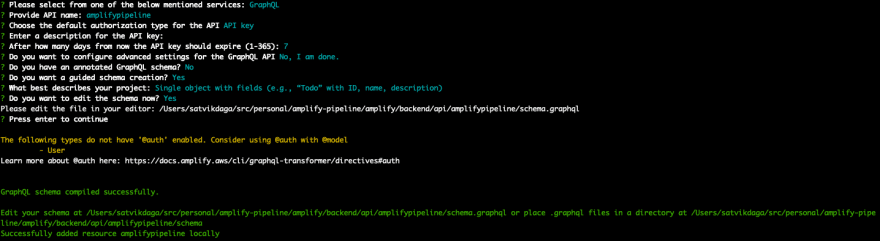

Step 3 - Add GraphQL API

- Run the following command.

$ amplify add api

-

Select the following options:

- Select GraphQL

- When asked if you have a schema, say No

- Select one of the default samples; you can change this later

- Choose to edit the schema and it will open the new schema.graphql in your editor

Update schema.graphql with the following code:

type User @model {

id: ID!

name: String!

email: AWSEmail!

password: String

}

Save the file and hit enter in your terminal window. If no error messages are thrown this means the transformation was successful and schema is valid.

Step 4 - Add a Lambda function to hash password

Create a lambda function that takes the password field from the input and returns hashed password in response.

- Run the following command.

$ amplify add function

- Complete the relevant prompts.

- Update the source code of the amplifypipelineHashPassword lambda function(i.e. index.js).

const bcrypt = require('bcryptjs');

exports.handler = async (event) => {

// get password field from input

const { password } = event.arguments.input;

// use bcrypt to hash password

const hash = await bcrypt.hash(password, 10);

// return the hashed password as response

return {

hash,

};

};

- Add bcryptjs node library to the lambda function. Run this from the root directory of your React app.

$ cd amplify/backend/function/amplifypipelineHashPassword/src

$ npm install bcryptjs

- Now you can deploy the API and lambda function to AWS.

$ amplify push

Step 5 - Add user signup mutation

You will add a custom mutation called signupUser that creates a new user.

For this, you will need to add a custom resolver that targets the User table.

Here instead of a custom resolver, you'll add a pipeline resolver and a pipeline function that targets the UserTable.

- Add signupUser mutation to schema.graphql.

type Mutation {

signupUser(input: SignupUserInput!): User

}

input SignupUserInput {

id: ID

name: String!

email: AWSEmail!

password: String

}

- Add a pipeline resolver and pipeline function resource to a stack(like CustomResources.json) in the stacks/ directory. The DataSourceName is auto-generated. In most cases, it’ll look like {MODEL_NAME}Table. To confirm the data source name, you can verify it from within the AppSync Console (

amplify console api) and clicking on the Data Sources tab.

// stacks/CustomResources.json

{

// ... The rest of the template

"Resources": {

"MutationCreateUserFunction": {

"Type": "AWS::AppSync::FunctionConfiguration",

"Properties": {

"ApiId": {

"Ref": "AppSyncApiId"

},

"Name": "MutationCreateUserFunction",

"DataSourceName": "UserTable",

"FunctionVersion": "2018-05-29",

"RequestMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/pipelineFunctions/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [".", ["MutationCreateUserFunction", "req", "vtl"]]

}

}

]

},

"ResponseMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/pipelineFunctions/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [".", ["MutationCreateUserFunction", "res", "vtl"]]

}

}

]

}

}

},

"MutationSignupUserResolver": {

"Type": "AWS::AppSync::Resolver",

"Properties": {

"ApiId": {

"Ref": "AppSyncApiId"

},

"TypeName": "Mutation",

"FieldName": "signupUser",

"Kind": "PIPELINE",

"PipelineConfig": {

"Functions": [

{

"Fn::GetAtt": ["MutationCreateUserFunction", "FunctionId"]

}

]

},

"RequestMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/resolvers/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [".", ["Mutation", "signupUser", "req", "vtl"]]

}

}

]

},

"ResponseMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/resolvers/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [".", ["Mutation", "signupUser", "res", "vtl"]]

}

}

]

}

},

"DependsOn": ["MutationCreateUserFunction"]

}

}

}

- Write the pipeline resolver templates in the resolvers directory. These are the before and after mapping templates that are run in the beginning and the end of the pipeline respectively.

## resolvers/Mutation.signupUser.req.vtl

## [Start] Stash resolver specific context.. **

$util.qr($ctx.stash.put("typeName", "Mutation"))

$util.qr($ctx.stash.put("fieldName", "signupUser"))

{}

## [End] Stash resolver specific context.. **

## resolvers/Mutation.signupUser.res.vtl

$util.toJson($ctx.prev.result)

- Write the pipelineFunction templates for MutationCreateUserFunction resource. Create a pipelineFunctions folder if it doesn't exist in your API resource. From your app root, run the following command.

$ cd amplify/backend/api/amplifypipeline

$ mkdir pipelineFunctions

In the req template, you access the previous function result to get the hashed password. You then replace the password field in the input with the hashed password. So the new user created will save the hashed password in the database instead.

Note: In a pipeline function, you can access the results of the previous pipeline function as $ctx.prev.result.

## pipelineFunctions/MutationCreateUserFunction.req.vtl

## [Start] Replace password in input with hash

## Set hash from previous pipeline function result

#set( $hash = $ctx.prev.result.hash )

## Set the password field as hash if present

$util.qr($context.args.input.put("password", $util.defaultIfNull($hash, $context.args.input.password)))

## [End] Replace password in input with hash

## [Start] Set default values. **

$util.qr($context.args.input.put("id", $util.defaultIfNull($ctx.args.input.id, $util.autoId())))

#set( $createdAt = $util.time.nowISO8601() )

## Automatically set the createdAt timestamp. **

$util.qr($context.args.input.put("createdAt", $util.defaultIfNull($ctx.args.input.createdAt, $createdAt)))

## Automatically set the updatedAt timestamp. **

$util.qr($context.args.input.put("updatedAt", $util.defaultIfNull($ctx.args.input.updatedAt, $createdAt)))

## [End] Set default values. **

## [Start] Prepare DynamoDB PutItem Request. **

$util.qr($context.args.input.put("__typename", "User"))

#set( $condition = {

"expression": "attribute_not_exists(#id)",

"expressionNames": {

"#id": "id"

}

} )

#if( $context.args.condition )

#set( $condition.expressionValues = {} )

#set( $conditionFilterExpressions = $util.parseJson($util.transform.toDynamoDBConditionExpression($context.args.condition)) )

$util.qr($condition.put("expression", "($condition.expression) AND $conditionFilterExpressions.expression"))

$util.qr($condition.expressionNames.putAll($conditionFilterExpressions.expressionNames))

$util.qr($condition.expressionValues.putAll($conditionFilterExpressions.expressionValues))

#end

#if( $condition.expressionValues && $condition.expressionValues.size() == 0 )

#set( $condition = {

"expression": $condition.expression,

"expressionNames": $condition.expressionNames

} )

#end

{

"version": "2017-02-28",

"operation": "PutItem",

"key": #if( $modelObjectKey ) $util.toJson($modelObjectKey) #else {

"id": $util.dynamodb.toDynamoDBJson($ctx.args.input.id)

} #end,

"attributeValues": $util.dynamodb.toMapValuesJson($context.args.input),

"condition": $util.toJson($condition)

}

## [End] Prepare DynamoDB PutItem Request. **

In the res template, convert to JSON and return the result.

## pipelineFunctions/MutationCreateUserFunction.res.vtl

#if( $ctx.error )

$util.error($ctx.error.message, $ctx.error.type)

#end

$util.toJson($ctx.result)

Step 6 - Add hash password lambda to pipeline

To add a lambda function to an AppSync pipeline resolver, you need:

- A lambda function. You already created the hash password lambda function.

- An AppSync DataSource HashPasswordLambdaDataSource that targets the lambda function.

- An AWS IAM role HashPasswordLambdaDataSourceRole that allows AppSync to invoke the lambda function on your behalf to the stack’s Resources block.

- A pipeline function InvokeHashPasswordLambdaDataSource resource that invokes the HashPasswordLambdaDataSource.

- Update MutationSignupUserResolver resource block and add lambda function in the pipeline. Here is the complete resources block in a stack(like CustomResources.json) in the stacks/ directory.

// stacks/CustomResources.json

{

// ... The rest of the template

"Resources": {

"InvokeHashPasswordLambdaDataSource": {

"Type": "AWS::AppSync::FunctionConfiguration",

"Properties": {

"ApiId": {

"Ref": "AppSyncApiId"

},

"Name": "InvokeHashPasswordLambdaDataSource",

"DataSourceName": "HashPasswordLambdaDataSource",

"FunctionVersion": "2018-05-29",

"RequestMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/pipelineFunctions/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [".", ["InvokeHashPasswordLambdaDataSource", "req", "vtl"]]

}

}

]

},

"ResponseMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/pipelineFunctions/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [".", ["InvokeHashPasswordLambdaDataSource", "res", "vtl"]]

}

}

]

}

},

"DependsOn": "HashPasswordLambdaDataSource"

},

"HashPasswordLambdaDataSourceRole": {

"Type": "AWS::IAM::Role",

"Properties": {

"RoleName": {

"Fn::If": [

"HasEnvironmentParameter",

{

"Fn::Join": [

"-",

[

"HashPasswordLambdabf85",

{

"Ref": "AppSyncApiId"

},

{

"Ref": "env"

}

]

]

},

{

"Fn::Join": [

"-",

[

"HashPasswordLambdabf85",

{

"Ref": "AppSyncApiId"

}

]

]

}

]

},

"AssumeRolePolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "appsync.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

},

"Policies": [

{

"PolicyName": "InvokeLambdaFunction",

"PolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": ["lambda:InvokeFunction"],

"Resource": {

"Fn::If": [

"HasEnvironmentParameter",

{

"Fn::Sub": [

"arn:aws:lambda:${AWS::Region}:${AWS::AccountId}:function:amplifypipelineHashPassword-${env}",

{

"env": {

"Ref": "env"

}

}

]

},

{

"Fn::Sub": [

"arn:aws:lambda:${AWS::Region}:${AWS::AccountId}:function:amplifypipelineHashPassword",

{}

]

}

]

}

}

]

}

}

]

}

},

"HashPasswordLambdaDataSource": {

"Type": "AWS::AppSync::DataSource",

"Properties": {

"ApiId": {

"Ref": "AppSyncApiId"

},

"Name": "HashPasswordLambdaDataSource",

"Type": "AWS_LAMBDA",

"ServiceRoleArn": {

"Fn::GetAtt": ["HashPasswordLambdaDataSourceRole", "Arn"]

},

"LambdaConfig": {

"LambdaFunctionArn": {

"Fn::If": [

"HasEnvironmentParameter",

{

"Fn::Sub": [

"arn:aws:lambda:${AWS::Region}:${AWS::AccountId}:function:amplifypipelineHashPassword-${env}",

{

"env": {

"Ref": "env"

}

}

]

},

{

"Fn::Sub": ["arn:aws:lambda:${AWS::Region}:${AWS::AccountId}:function:amplifypipelineHashPassword", {}]

}

]

}

}

},

"DependsOn": "HashPasswordLambdaDataSourceRole"

},

"MutationCreateUserFunction": {

"Type": "AWS::AppSync::FunctionConfiguration",

"Properties": {

"ApiId": {

"Ref": "AppSyncApiId"

},

"Name": "MutationCreateUserFunction",

"DataSourceName": "UserTable",

"FunctionVersion": "2018-05-29",

"RequestMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/pipelineFunctions/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [".", ["MutationCreateUserFunction", "req", "vtl"]]

}

}

]

},

"ResponseMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/pipelineFunctions/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [".", ["MutationCreateUserFunction", "res", "vtl"]]

}

}

]

}

}

},

"MutationSignupUserResolver": {

"Type": "AWS::AppSync::Resolver",

"Properties": {

"ApiId": {

"Ref": "AppSyncApiId"

},

"TypeName": "Mutation",

"FieldName": "signupUser",

"Kind": "PIPELINE",

"PipelineConfig": {

"Functions": [

{

"Fn::GetAtt": ["InvokeHashPasswordLambdaDataSource", "FunctionId"]

},

{

"Fn::GetAtt": ["MutationCreateUserFunction", "FunctionId"]

}

]

},

"RequestMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/resolvers/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [".", ["Mutation", "signupUser", "req", "vtl"]]

}

}

]

},

"ResponseMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/resolvers/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [".", ["Mutation", "signupUser", "res", "vtl"]]

}

}

]

}

},

"DependsOn": ["MutationCreateUserFunction", "InvokeHashPasswordLambdaDataSource"]

}

}

}

- Add templates for InvokeHashPasswordLambdaDataSource pipeline function.

## pipelineFunctions/InvokeHashPasswordLambdaDataSource.req.vtl

## [Start] Invoke AWS Lambda data source: HashPasswordLambdaDataSource. **

{

"version": "2018-05-29",

"operation": "Invoke",

"payload": {

"typeName": "$ctx.stash.get("typeName")",

"fieldName": "$ctx.stash.get("fieldName")",

"arguments": $util.toJson($ctx.arguments),

"identity": $util.toJson($ctx.identity),

"source": $util.toJson($ctx.source),

"request": $util.toJson($ctx.request),

"prev": $util.toJson($ctx.prev)

}

}

## [End] Invoke AWS Lambda data source: HashPasswordLambdaDataSource. **

## pipelineFunctions/InvokeHashPasswordLambdaDataSource.res.vtl

## [Start] Handle error or return result. **

#if( $ctx.error )

$util.error($ctx.error.message, $ctx.error.type)

#end

$util.toJson($ctx.result)

## [End] Handle error or return result. **

- Now deploy the latest code to AWS.

$ amplify push

Step 7 - Test your mutation

- Run the following command. It will open the AppSync console where you can run graphQL queries and mutation under Queries tab.

$ amplify console api

- Create new user using the signupUser mutation.

mutation {

signupUser(

input: {

name: "Satvik Daga",

email: "test@example.com",

password: "test123"

}) {

id

name

email

password

}

}

The password of the newly created user is hashed.

First, the password field is hashed by the HashPasword Lambda function, and then a user with hashed password is created by the pipeline resolver for signupUser mutation.

Note: You can also test your app locally by running the following command amplify mock. Click here for more details.

Conclusion

Congratulations! You have successfully added a pipeline resolver to your AWS Amplify application. Now you can easily add a pipeline resolver for any graphQL operation.

You can find the complete source code for this tutorial on GitHub.

dagasatvik10

/

amplify-pipeline-tutorial

dagasatvik10

/

amplify-pipeline-tutorial

Add pipeline resolver in AWS Amplify

This project is part of the tutorial to add pipeline resolvers to AWS amplify.

You can find the complete tutorial here

Prerequisites

To complete this tutorial, you will need:

- Node.js(>=10.x) and NPM(>=6.x) installed. Download from here.

- An AWS account. If you don't have one, you can create here.

- Amplify CLI installed and configured.

Getting Started

- Clone this repository

- Initialize a new AWS backend

$ amplify init

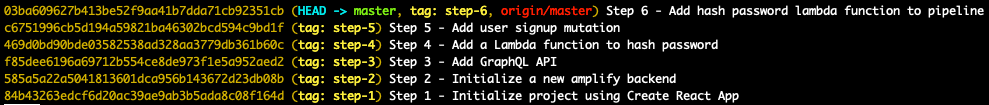

- Each commit corresponds to a complete step in the tutorial.

- Run the following if you want to deploy the code

$ amplify push

Built With

- AWS Amplify

- React

Top comments (4)

Great post, exactly what I was looking for! When we create a DynamoDB table with the @model annotation, Amplify creates the create/update/delete mutations with the VTL templates for us. Is it possible to use these auto-generated templates in a pipeline resolver, so that we can invoke a lambda before the original create/update VTL template?

It's not possibly right now as those templates are linked to a mutation resolver and not a pipeline function in the cloudformation stack generated by amplify. You will need to create a pipeline function configuration as mentioned in the article.

But there is a way using which amplify creates pipeline based resolvers for all queries and mutations although it's not supported by default. Check this link docs.amplify.aws/cli/reference/fea...

Please note it's an experimental feature currently.

Hope it helps!

I'll check that out! Thank you very much for the link!

Can the same be accomplished with new version of graphQL transformer (v2)?

docs.amplify.aws/cli/graphql/custo...