Have you ever had that time when you want to just get the information that you want from a website automatically, without going inside the website? What we will make today is a crawler bot that will crawl, or get information for you automatically from any website that you want. This might be useful for those who wants to get updated information from the website, or get data from a website without all those copy and paste hasle.

What we are going to use

In this tutorial, we are going to use Scrapy, an open source python framework for extracting data from websites. For more information on Scrapy, I recommend visiting their official website. https://scrapy.org/

For our development environment, I am going to use goormIDE so that I can share my container so that others can see. I'll share the container link with the completed code down below. You are free to use any development environment that you wish.

Scrapy in 5 minutes

Setting up the container

For those who wish to use goormIDE, you can check out my previous guide on how to set up a Python development environment in goormIDE here: https://dev.to/daniel2231/a-python-ide-that-you-can-use-anywhere-26cg

Installing Scrapy

Now, lets install scrapy. Write the following command in the command line.

pip install scrapy

Making a new spider

After you are done installing scrapy, we are going to use scrapy to make a scrapy project. On your parent directory (or wherever you want to put your project), run the following command.

scrapy startproject scraper

You can change scraper to any name that you want.

The default project directory will look like this:

scraper/

scrapy.cfg # Deploy configuration file

scraper/ # Project's Python module

__init__.py

items.py # Project items definition file

middlewares.py # Project middewares file

pipelines.py # Project pipelines file

settings.py # Project settings file

spiders/ # A directory for your spiders

__init__.py

Now, lets get inside our spiders folder.

cd scraper/spiders/

Making a new spider

A Spider is a set of classes which contains instructions on how a certain site or sites will be scraped.

"Spiders are the place where you define the custom behaviour for crawling and parsing pages for a particular site (or, in some cases, a group of sites)." (source: https://docs.scrapy.org/en/latest/topics/spiders.html)

We will now make a new spider that will crawl this site: https://www.tiobe.com/tiobe-index/

What I want to get from this site is the ranking of each programming language and its ratings.

Note: Before you crawl a site, make sure to check their policy on crawling. Some sites might have restrictions on what you can crawl or not. For more clear instructions, check a site's robots.txt file (You can check for them like this: www.sitename.com/robots.txt) Check this article out for more information: https://www.cloudflare.com/learning/bots/what-is-robots.txt/

What we will make is a crawler that will get the rank, name of programming language, and ratings from the website.

To make a new spider, enter the following commands:

scrapy genspider crawlbot www.tiobe.com/tiobe-index

To break up, scrapy genspider creates a new spider for us, crawlbot is the name of the spider, and the link will tell the spider where we will crawl the information.

Finding out what to crawl

Inside your spider folder, you will see that a new spider has been created.

What we must do now is to find out how we are going to tell the scraper what must be crawled.

Go to the website: https://www.tiobe.com/tiobe-index/

Right click and select inspect.

Here, we can see that the information that we need is stored inside a table.

When we open the table body tag, we can see that the information is stored inside each table row, as a form of table data.

There are two basic ways to tell our crawler what to crawl. The first way is to tell the crawler what class attribute the tag has. The second way is to get the Xpath of the tag that we would like to crawl. We are going to use the second method.

To get a tag Xpath, simply right click on the tag (on the developer console) and select Copy, Copy Xpath.

When I copy the Xpath of the rating, I get:

//*[@id="top20"]/tbody/tr[1]/td[1]

Now, remove the number behind tr and add /text() at the end of the Xpath.

//*[@id="top20"]/tbody/tr/td[1]/text()

What I just did tells the crawler that I will get the text of every first table data of every table row.

Now, lets get the Xpath of the ratings, programming language, and rank.

Rank: //*[@id="top20"]/tbody/tr/td[1]/text()

Programming language: //*[@id="top20"]/tbody/tr/td[4]/text()

Ratings: //*[@id="top20"]/tbody/tr/td[5]/text()

Now, open your crawlbot spider and add the following codes:

# -*- coding: utf-8 -*-

import scrapy

class CrawlbotSpider(scrapy.Spider):

name = 'crawlbot'

allowed_domains = ['https://www.tiobe.com/tiobe-index/']

start_urls = ['https://www.tiobe.com/tiobe-index/']

def parse(self, response):

rank = response.xpath('//*[@id="top20"]/tbody/tr/td[1]/text()').extract()

name = response.xpath('//*[@id="top20"]/tbody/tr/td[4]/text()').extract()

ratings = response.xpath('//*[@id="top20"]/tbody/tr/td[5]/text()').extract()

for item in zip(rank, name, ratings):

scraped_info = {

'Rank': item[0].strip(),

'Name': item[1].strip(),

'Ratings': item[2].strip()

}

yield scraped_info

Let's break this code up. First, we are telling the crawler where to crawl by providing a url link. Then, we make a variable where we will store the crawled data. Don't forget to put .extract() behind!

Lastly, we are returning each crawled data by using yield scraped_info.

We are now done!

Let's try running the crawler.

scrapy crawl crawlbot

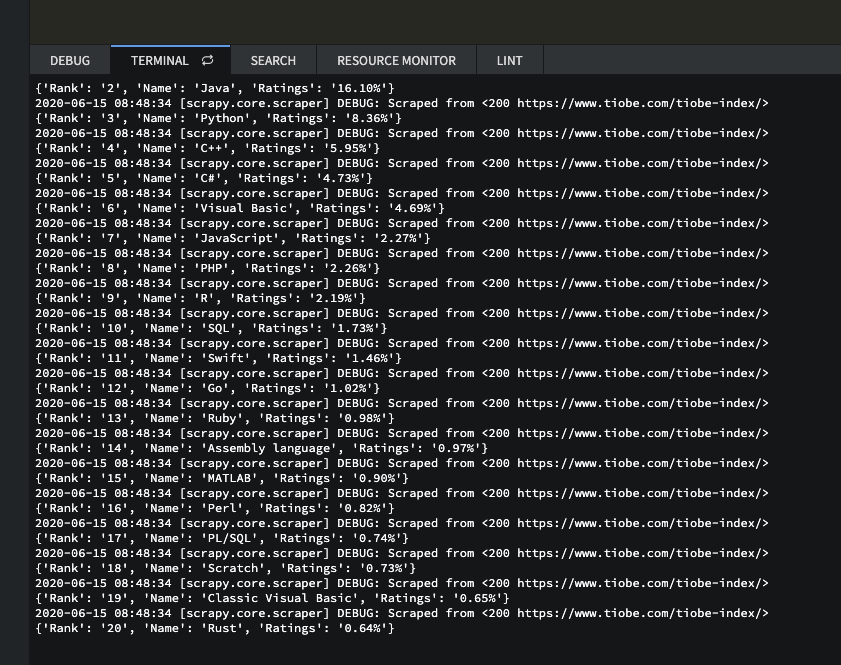

We can see that the crawler gets all the data that we need from the website. However, it is a bit inconvenient to see the data in our terminal. Let's tell our crawler to export the data in JSON format.

Export to JSON

Go to settings.py on your project directory and add this two lines of code:

FEED_FORMAT = "json"

FEED_URI = "rank.json"

Now, run the crawler again and you will see that all the data you crawled will show up in rank.json.

That's it! I'll leave my container link down below for those who wants to see the code.

Container link: https://goor.me/KqvR6

That's all folks! Happy coding!

Top comments (0)