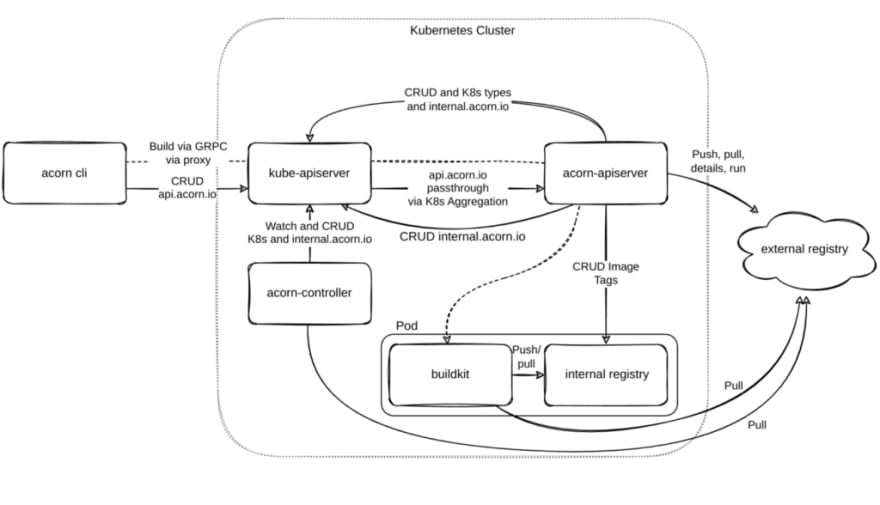

Acorn est un cadre pour packager et déployer des applications, qui simplifie l’exécution des applications sur Kubernetes. Acorn est capable de regrouper toutes les images Docker, la configuration et les spécifications de déploiement d’une application dans un seul artefact d’image Acorn.

Cela fait visiblement suite au projet Rancher Rio abandonné (un MicroPaaS pour Kubernetes) comme je l’avais décrit dans un précédent article :

Rancher Rio, un MicroPaaS basé sur Kubernetes …

Cet artefact peut être publié dans n’importe quel registre de conteneurs OCI, ce qui lui permet d’être déployé sur n’importe quel cluster Kubernetes de développement, de test ou de production.

La portabilité des images Acorn permet aux développeurs de développer des applications localement et de les mettre en production sans avoir à changer d’outil ou de pile technologique.

GitHub - acorn-io/acorn: A simple application deployment framework for Kubernetes

Application avec le déploiement d’une série de conteneur LXC dans une instance Ubuntu 22.04 LTS dans Linode :

root@localhost:~# snap install lxd --channel=5.9/stable

2022-12-21T21:06:55Z INFO Waiting for automatic snapd restart...

lxd (5.9/stable) 5.9-76c110d from Canonical✓ installed

root@localhost:~# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa

Your public key has been saved in /root/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:ULOLCYZJ5PCK0Em5/MTLUxqaOnyrLfI6jYsLRQBURvQ root@localhost

The key's randomart image is:

+---[RSA 3072]----+

|*oB* o |

| Oo+. . o |

|..OooE. . |

|ooo.+..+ . |

|o .* =o S |

| .o * |

|o+ . |

|Ooo. |

|*O=o. |

+----[SHA256]-----+

root@localhost:~# lxd init --minimal

root@localhost:~# lxc profile show default > lxd-profile-default.yaml

root@localhost:~# cat lxd-profile-default.yaml

config:

user.user-data: |

#cloud-config

ssh_authorized_keys:

- @@SSHPUB@@

environment.http_proxy: ""

user.network_mode: ""

description: Default LXD profile

devices:

eth0:

name: eth0

network: lxdbr0

type: nic

root:

path: /

pool: default

type: disk

name: default

used_by: []

root@localhost:~# sed -ri "s'@@SSHPUB@@'$(cat ~/.ssh/id_rsa.pub)'" lxd-profile-default.yaml

root@localhost:~# lxc profile edit default < lxd-profile-default.yaml

root@localhost:~# lxc profile create microk8s

Profile microk8s created

root@localhost:~# wget https://raw.githubusercontent.com/ubuntu/microk8s/master/tests/lxc/microk8s.profile -O microk8s.profile

--2022-12-21 21:15:06-- https://raw.githubusercontent.com/ubuntu/microk8s/master/tests/lxc/microk8s.profile

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 2606:50c0:8000::154, 2606:50c0:8001::154, 2606:50c0:8002::154, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|2606:50c0:8000::154|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 816 [text/plain]

Saving to: ‘microk8s.profile’

microk8s.profile 100%[=============================================================================================>] 816 --.-KB/s in 0s

2022-12-21 21:15:06 (62.5 MB/s) - ‘microk8s.profile’ saved [816/816]

root@localhost:~# cat microk8s.profile | lxc profile edit microk8s

root@localhost:~# rm microk8s.profile

Je peux alors lancer 3 conteneurs LXC avec le profile kubernetes dans LXD :

root@localhost:~# for i in {1..3}; do lxc launch -p default -p microk8s ubuntu:22.04 k3s$i; done

Creating k3s1

Starting k3s1

Creating k3s2

Starting k3s2

Creating k3s3

Starting k3s3

root@localhost:~# lxc ls

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+

| k3s1 | RUNNING | 10.124.110.151 (eth0) | fd42:37c8:8415:72cb:216:3eff:febc:239d (eth0) | CONTAINER | 0 |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+

| k3s2 | RUNNING | 10.124.110.5 (eth0) | fd42:37c8:8415:72cb:216:3eff:feb8:1b00 (eth0) | CONTAINER | 0 |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+

| k3s3 | RUNNING | 10.124.110.108 (eth0) | fd42:37c8:8415:72cb:216:3eff:fe46:666f (eth0) | CONTAINER | 0 |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+

et le cluster lui même avec k3s :

ubuntu@k3s1:~$ curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="--disable traefik --disable servicelb" K3S_TOKEN=acornpwd123 sh -s -

[INFO] Finding release for channel stable

[INFO] Using v1.25.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3s

ubuntu@k3s2:~$ curl -sfL https://get.k3s.io | K3S_URL=https://10.124.110.151:6443 K3S_TOKEN=acornpwd123 sh -

[INFO] Finding release for channel stable

[INFO] Using v1.25.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

ubuntu@k3s3:~$ curl -sfL https://get.k3s.io | K3S_URL=https://10.124.110.151:6443 K3S_TOKEN=acornpwd123 sh -

[INFO] Finding release for channel stable

[INFO] Using v1.25.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

Cluster k3s actif et opérationnel :

ubuntu@k3s1:~$ sudo rm /usr/local/bin/kubectl

ubuntu@k3s1:~$ mkdir .kube

ubuntu@k3s1:~$ sudo snap install kubectl --classic

kubectl 1.26.0 from Canonical✓ installed

ubuntu@k3s1:~$ sudo cp /etc/rancher/k3s/k3s.yaml .kube/config && sudo chown -R $USER .kube/*

ubuntu@k3s1:~$ kubectl cluster-info

Kubernetes control plane is running at https://127.0.0.1:6443

CoreDNS is running at https://127.0.0.1:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Metrics-server is running at https://127.0.0.1:6443/api/v1/namespaces/kube-system/services/https:metrics-server:https/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

ubuntu@k3s1:~$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k3s2 Ready <none> 3m3s v1.25.4+k3s1 10.124.110.5 <none> Ubuntu 22.04.1 LTS 5.15.0-47-generic containerd://1.6.8-k3s1

k3s3 Ready <none> 2m32s v1.25.4+k3s1 10.124.110.108 <none> Ubuntu 22.04.1 LTS 5.15.0-47-generic containerd://1.6.8-k3s1

k3s1 Ready control-plane,master 5m46s v1.25.4+k3s1 10.124.110.151 <none> Ubuntu 22.04.1 LTS 5.15.0-47-generic containerd://1.6.8-k3s1

ubuntu@k3s1:~$ kubectl get po,svc -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/local-path-provisioner-79f67d76f8-5rxs8 1/1 Running 0 5m36s

kube-system pod/coredns-597584b69b-g2rbs 1/1 Running 0 5m36s

kube-system pod/metrics-server-5c8978b444-hp822 1/1 Running 0 5m36s

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 5m52s

kube-system service/kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 5m48s

kube-system service/metrics-server ClusterIP 10.43.249.247 <none> 443/TCP 5m47s

Installation de MetalLB ensuite :

ubuntu@k3s1:~$ kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.13.7/config/manifests/metallb-native.yaml

namespace/metallb-system created

customresourcedefinition.apiextensions.k8s.io/addresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created

customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created

customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created

serviceaccount/controller created

serviceaccount/speaker created

role.rbac.authorization.k8s.io/controller created

role.rbac.authorization.k8s.io/pod-lister created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/controller created

rolebinding.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

secret/webhook-server-cert created

service/webhook-service created

deployment.apps/controller created

daemonset.apps/speaker created

validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration created

ubuntu@k3s1:~$ cat dhcp.yaml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool

namespace: metallb-system

spec:

addresses:

- 10.124.110.210-10.124.110.250

ubuntu@k3s1:~$ cat l2.yaml

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: example

namespace: metallb-system

ubuntu@k3s1:~$ cat ippool.yaml

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: example

namespace: metallb-system

spec:

ipAddressPools:

- first-pool

ubuntu@k3s1:~$ kubectl apply -f dhcp.yaml && kubectl apply -f l2.yaml && kubectl apply -f ippool.yaml

ipaddresspool.metallb.io/first-pool created

l2advertisement.metallb.io/example created

l2advertisement.metallb.io/example configured

ubuntu@k3s1:~$ kubectl get po,svc -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/local-path-provisioner-79f67d76f8-5rxs8 1/1 Running 0 13m

kube-system pod/coredns-597584b69b-g2rbs 1/1 Running 0 13m

kube-system pod/metrics-server-5c8978b444-hp822 1/1 Running 0 13m

metallb-system pod/controller-84d6d4db45-j49mj 1/1 Running 0 5m52s

metallb-system pod/speaker-pnzxw 1/1 Running 0 5m52s

metallb-system pod/speaker-rs7ds 1/1 Running 0 5m52s

metallb-system pod/speaker-pr4rq 1/1 Running 0 5m52s

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 13m

kube-system service/kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 13m

kube-system service/metrics-server ClusterIP 10.43.249.247 <none> 443/TCP 13m

metallb-system service/webhook-service ClusterIP 10.43.4.24 <none> 443/TCP 5m53

Suivi de NGINX Ingress Controller :

ubuntu@k3s1:~$ ubuntu@k3s1:~$ curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

100 11345 100 11345 0 0 45436 0 --:--:-- --:--:-- --:--:-- 45562

Downloading https://get.helm.sh/helm-v3.10.3-linux-amd64.tar.gz

Verifying checksum... Done.

Preparing to install helm into /usr/local/bin

helm installed into /usr/local/bin/helm

ubuntu@k3s1:~$ helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

ubuntu@k3s1:~$ helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

"ingress-nginx" has been added to your repositories

ubuntu@k3s1:~$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "ingress-nginx" chart repository

Update Complete. ⎈Happy Helming!⎈

ubuntu@k3s1:~$ helm install ingress-nginx ingress-nginx/ingress-nginx \

--create-namespace \

--namespace ingress-nginx

NAME: ingress-nginx

LAST DEPLOYED: Wed Dec 21 21:39:08 2022

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace ingress-nginx get services -o wide -w ingress-nginx-controller'

ubuntu@k3s1:~$ kubectl get po,svc -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/local-path-provisioner-79f67d76f8-5rxs8 1/1 Running 0 17m

kube-system pod/coredns-597584b69b-g2rbs 1/1 Running 0 17m

kube-system pod/metrics-server-5c8978b444-hp822 1/1 Running 0 17m

metallb-system pod/controller-84d6d4db45-j49mj 1/1 Running 0 10m

metallb-system pod/speaker-pnzxw 1/1 Running 0 10m

metallb-system pod/speaker-rs7ds 1/1 Running 0 10m

metallb-system pod/speaker-pr4rq 1/1 Running 0 10m

ingress-nginx pod/ingress-nginx-controller-8574b6d7c9-frtw7 1/1 Running 0 2m47s

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 18m

kube-system service/kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 18m

kube-system service/metrics-server ClusterIP 10.43.249.247 <none> 443/TCP 18m

metallb-system service/webhook-service ClusterIP 10.43.4.24 <none> 443/TCP 10m

ingress-nginx service/ingress-nginx-controller-admission ClusterIP 10.43.125.197 <none> 443/TCP 2m47s

ingress-nginx service/ingress-nginx-controller LoadBalancer 10.43.110.31 10.124.110.210 80:32381/TCP,443:30453/TCP 2m47s

Il ne me reste plus qu’à récupérer et installer Acorn dans ce cluster Kubernetes :

ubuntu@k3s1:~$ curl https://get.acorn.io | sh

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 8390 100 8390 0 0 10323 0 --:--:-- --:--:-- --:--:-- 10319

[INFO] Finding release for channel latest

[INFO] Using v0.4.2 as release

[INFO] Downloading hash https://github.com/acorn-io/acorn/releases/download/v0.4.2/checksums.txt

[INFO] Downloading archive https://github.com/acorn-io/acorn/releases/download/v0.4.2/acorn-v0.4.2-linux-amd64.tar.gz

[INFO] Verifying binary download

[INFO] Installing acorn to /usr/local/bin/acorn

ubuntu@k3s1:~$ acorn install --ingress-class-name nginx

✔ Running Pre-install Checks

✔ Installing ClusterRoles

✔ Installing APIServer and Controller (image ghcr.io/acorn-io/acorn:v0.4.2)

✔ Waiting for controller deployment to be available

✔ Waiting for API server deployment to be available

✔ Running Post-install Checks

✔ Installation done

ubuntu@k3s1:~$ kubectl get po,svc -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/local-path-provisioner-79f67d76f8-5rxs8 1/1 Running 0 21m

kube-system pod/coredns-597584b69b-g2rbs 1/1 Running 0 21m

kube-system pod/metrics-server-5c8978b444-hp822 1/1 Running 0 21m

metallb-system pod/controller-84d6d4db45-j49mj 1/1 Running 0 13m

metallb-system pod/speaker-pnzxw 1/1 Running 0 13m

metallb-system pod/speaker-rs7ds 1/1 Running 0 13m

metallb-system pod/speaker-pr4rq 1/1 Running 0 13m

ingress-nginx pod/ingress-nginx-controller-8574b6d7c9-frtw7 1/1 Running 0 5m59s

acorn-system pod/acorn-controller-56ccd55df7-26tc9 1/1 Running 0 71s

acorn-system pod/acorn-api-5dbf678bb4-j4lts 1/1 Running 0 71s

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 21m

kube-system service/kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 21m

kube-system service/metrics-server ClusterIP 10.43.249.247 <none> 443/TCP 21m

metallb-system service/webhook-service ClusterIP 10.43.4.24 <none> 443/TCP 13m

ingress-nginx service/ingress-nginx-controller-admission ClusterIP 10.43.125.197 <none> 443/TCP 5m59s

ingress-nginx service/ingress-nginx-controller LoadBalancer 10.43.110.31 10.124.110.210 80:32381/TCP,443:30453/TCP 5m59s

acorn-system service/acorn-api ClusterIP 10.43.242.173 <none> 7443/TCP 72s

On part d’un fichier de description nommé Acornfile pour packager et déployer son application dans le cluster Kubernetes selon le modèle suivant :

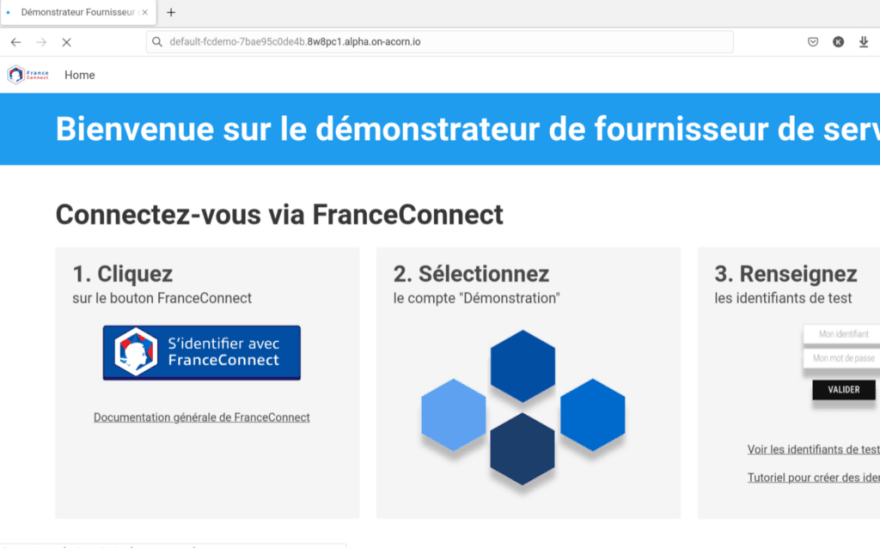

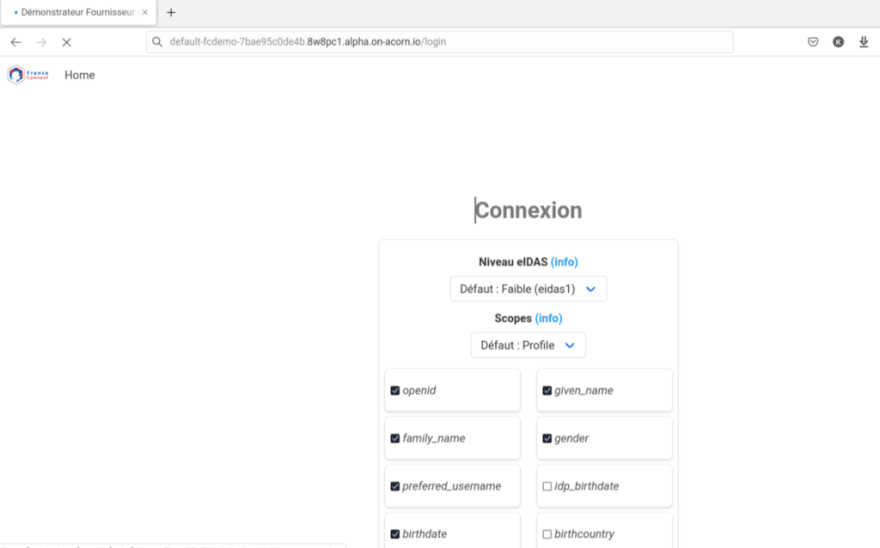

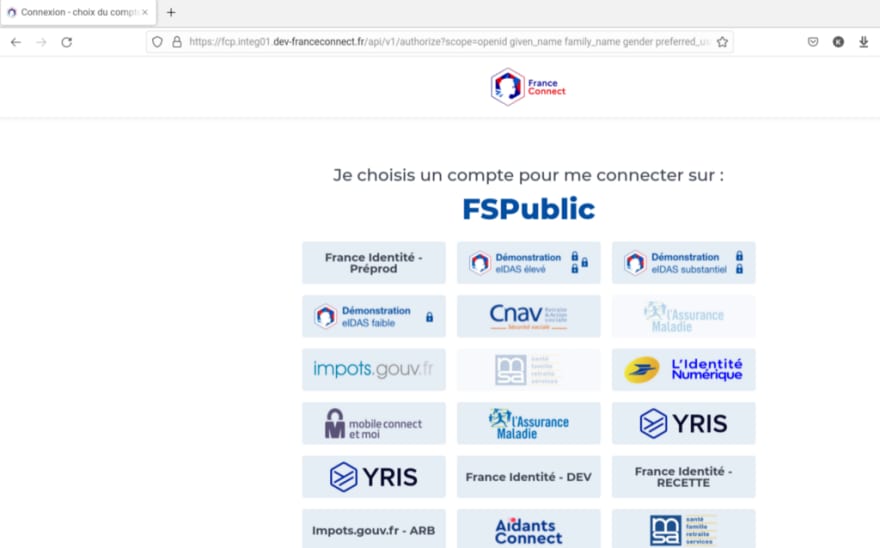

J’en crée avec le sempiternel démonstrateur FC :

ubuntu@k3s1:~$ cat Acornfile

containers: {

"default": {

image: "mcas/franceconnect-demo3:latest"

ports: publish: "3000/http"

}

}

et déploiement via Acorn :

ubuntu@k3s1:~$ acorn run --name fcdemo .

Waiting for builder to start... Ready

[+] Building 3.0s (5/5) FINISHED

=> [internal] load build definition from acorn-dockerfile-1106727846 0.1s

=> => transferring dockerfile: 91B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/mcas/franceconnect-demo3:latest 1.4s

=> [1/1] FROM docker.io/mcas/franceconnect-demo3:latest@sha256:829057d8cd074cbfbd5efa49f3ccf4efc38179dcee5c62db491b74094534d772 1.3s

=> => resolve docker.io/mcas/franceconnect-demo3:latest@sha256:829057d8cd074cbfbd5efa49f3ccf4efc38179dcee5c62db491b74094534d772 0.0s

=> => sha256:784cd1fd612b7ef9870aa1d85ca7285010993bc089f1a00ae1dcd6644074a398 34.81MB / 34.81MB 1.2s

=> => sha256:97518928ae5f3d52d4164b314a7e73654eb686ecd8aafa0b79acd980773a740d 2.82MB / 2.82MB 0.3s

=> => sha256:0ec5d186b713b05c9f9ebb40df5c7c3f3b67133a2effbf3e3215756220136363 2.35MB / 2.35MB 0.8s

=> => sha256:aac42da2cde0a8ec63ca5005c110662456b1d7883a73cf353e8fcca29dc48c4d 12.82MB / 12.82MB 1.0s

=> => sha256:98dc27ad6276f18e415689e0d39332e7cac56ed95b678093f61193bb0bd9db01 451B / 451B 0.2s

=> exporting to image 1.6s

=> => exporting layers 0.0s

=> => exporting manifest sha256:51e3257180eaaa39034a45d4b29450057eff5119f7054dc365e369633d1414ee 0.0s

=> => exporting config sha256:16c10ecf24a289a494ccf9b1b45010900b36b15e1622090e0943b23ab9834e46 0.0s

=> => pushing layers 1.5s

=> => pushing manifest for 127.0.0.1:5000/acorn/acorn:latest@sha256:51e3257180eaaa39034a45d4b29450057eff5119f7054dc365e369633d1414ee 0.0s

[+] Building 0.2s (5/5) FINISHED

=> [internal] load .dockerignore 0.0s

=> => transferring context: 64B 0.0s

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 58B 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 331B 0.0s

=> [1/1] COPY . / 0.0s

=> exporting to image 0.1s

=> => exporting layers 0.0s

=> => exporting manifest sha256:9410a94c83404b7f5c080a5720c51a4fdf1e82ac6db8ddbdc105cb3ca66fdfdb 0.0s

=> => exporting config sha256:35cd6451fe8d59e1f2669329a150fc5579ccbf0f8f6d4394d9e4e7eafd1ad2ea 0.0s

=> => pushing layers 0.0s

=> => pushing manifest for 127.0.0.1:5000/acorn/acorn:latest@sha256:9410a94c83404b7f5c080a5720c51a4fdf1e82ac6db8ddbdc105cb3ca66fdfdb 0.0s

fcdemo

STATUS: ENDPOINTS[] HEALTHY[] UPTODATE[]

STATUS: ENDPOINTS[] HEALTHY[0] UPTODATE[0] pending

STATUS: ENDPOINTS[] HEALTHY[0/1] UPTODATE[1] [containers: default ContainerCreating]

STATUS: ENDPOINTS[http://<Pending Ingress> => default:3000] HEALTHY[0/1] UPTODATE[1] [containers: default ContainerCreating]

STATUS: ENDPOINTS[http://<Pending Ingress> => default:3000] HEALTHY[0/1] UPTODATE[1] [containers: default is not ready]

┌───────────────────────────────────────────────────────────────────────────────────────┐

| STATUS: ENDPOINTS[http://<Pending Ingress> => default:3000] HEALTHY[1] UPTODATE[1] OK |

└───────────────────────────────────────────────────────────────────────────────────────┘

┌───────────────────────────────────────────────────────────────────────────────────────┐

| STATUS: ENDPOINTS[http://<Pending Ingress> => default:3000] HEALTHY[1] UPTODATE[1] OK |

└───────────────────────────────────────────────────────────────────────────────────────┘

ubuntu@k3s1:~$ kubectl get po,svc,ing -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/local-path-provisioner-79f67d76f8-5rxs8 1/1 Running 0 36m

kube-system pod/coredns-597584b69b-g2rbs 1/1 Running 0 36m

kube-system pod/metrics-server-5c8978b444-hp822 1/1 Running 0 36m

metallb-system pod/controller-84d6d4db45-j49mj 1/1 Running 0 29m

metallb-system pod/speaker-pnzxw 1/1 Running 0 29m

metallb-system pod/speaker-rs7ds 1/1 Running 0 29m

metallb-system pod/speaker-pr4rq 1/1 Running 0 29m

ingress-nginx pod/ingress-nginx-controller-8574b6d7c9-frtw7 1/1 Running 0 21m

acorn-system pod/acorn-controller-56ccd55df7-26tc9 1/1 Running 0 16m

acorn-system pod/acorn-api-5dbf678bb4-j4lts 1/1 Running 0 16m

acorn-system pod/containerd-config-path-jjplx 1/1 Running 0 12m

acorn-system pod/containerd-config-path-h9lfd 1/1 Running 0 12m

acorn-system pod/buildkitd-7c9494f484-hmhp9 2/2 Running 0 12m

acorn-system pod/containerd-config-path-j68qk 1/1 Running 0 12m

fcdemo-854f3c52-95c pod/default-5699575bff-f5lkf 1/1 Running 0 12m

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 37m

kube-system service/kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 37m

kube-system service/metrics-server ClusterIP 10.43.249.247 <none> 443/TCP 37m

metallb-system service/webhook-service ClusterIP 10.43.4.24 <none> 443/TCP 29m

ingress-nginx service/ingress-nginx-controller-admission ClusterIP 10.43.125.197 <none> 443/TCP 21m

ingress-nginx service/ingress-nginx-controller LoadBalancer 10.43.110.31 10.124.110.210 80:32381/TCP,443:30453/TCP 21m

acorn-system service/acorn-api ClusterIP 10.43.242.173 <none> 7443/TCP 16m

acorn-system service/registry NodePort 10.43.86.63 <none> 5000:31187/TCP 12m

fcdemo-854f3c52-95c service/default ClusterIP 10.43.12.33 <none> 3000/TCP 12m

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

fcdemo-854f3c52-95c ingress.networking.k8s.io/default nginx default-fcdemo-7bae95c0de4b.8w8pc1.alpha.on-acorn.io 10.124.110.210 80 12m

ubuntu@k3s1:~$ acorn apps

NAME IMAGE HEALTHY UP-TO-DATE CREATED ENDPOINTS MESSAGE

fcdemo 194894d2698f 1 1 70s ago http://default-fcdemo-7bae95c0de4b.8w8pc1.alpha.on-acorn.io => default:3000 OK

L’endpoint fourni répond localement :

ubuntu@k3s1:~$ curl http://default-fcdemo-7bae95c0de4b.8w8pc1.alpha.on-acorn.io

<!doctype html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, user-scalable=no, initial-scale=1.0, maximum-scale=1.0, minimum-scale=1.0">

<meta http-equiv="X-UA-Compatible" content="ie=edge">

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/bulma/0.7.1/css/bulma.min.css" integrity="sha256-zIG416V1ynj3Wgju/scU80KAEWOsO5rRLfVyRDuOv7Q=" crossorigin="anonymous" />

<link rel="stylesheet" href="https://unpkg.com/bulma-tooltip@2.0.2/dist/css/bulma-tooltip.min.css" />

<title>Démonstrateur Fournisseur de Service</title>

</head>

<body>

<nav class="navbar" role="navigation" aria-label="main navigation">

<div class="navbar-start">

<div class="navbar-brand">

<a class="navbar-item" href="/">

<img src="/img/fc_logo_v2.png" alt="Démonstrateur Fournisseur de Service" height="28">

</a>

</div>

<a href="/" class="navbar-item">

Home

</a>

</div>

<div class="navbar-end">

<div class="navbar-item">

<div class="buttons">

<a class="button is-light" href="/login">Se connecter</a>

</div>

</div>

</div>

</nav>

<section class="hero is-info is-small">

<div class="hero-body">

<div class="container">

<h1 class="title is-1">

Bienvenue sur le démonstrateur de fournisseur de service

</h1>

</div>

</div>

</section>

<section class="section is-small">

<div class="container">

<h2 class="title is-2">Connectez-vous via FranceConnect</h2>

<div class="tile is-ancestor">

<div class="tile is-vertical">

<div class="tile">

<div class="tile is-parent">

<article class="tile is-child notification">

<p class="title">1. Cliquez</p>

<p class="subtitle">sur le bouton FranceConnect</p>

<div class="has-text-centered content is-vcentered">

<!-- FC btn -->

<a href="/login" data-role="login">

<img src="/img/FCboutons-10.svg" alt="">

</a>

<p style="margin-top: 2em;">

<a href="https://partenaires.franceconnect.gouv.fr/fcp/fournisseur-service">

Documentation générale de FranceConnect

</a>

</p>

</div>

</article>

</div>

<div class="tile is-parent">

<article class="tile is-child notification">

<p class="title">2. Sélectionnez</p>

<p class="subtitle">le compte "Démonstration"</p>

<div class="has-text-centered content">

<figure class="image">

<img src="/img/logoPartner-v2.png">

</figure>

</div>

</article>

</div>

<div class="tile is-parent">

<article class="tile is-child notification">

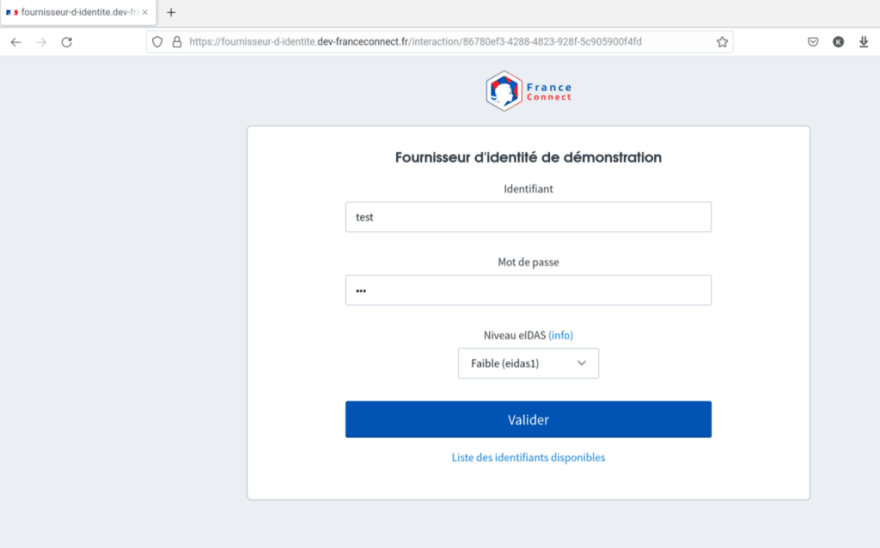

<p class="title">3. Renseignez</p>

<p class="subtitle">les identifiants de test</p>

<div class="has-text-centered content">

<figure class="image">

<img src="/img/how-it-works-form.png" class="image is-128x128">

</figure>

<p style="margin-top: 2em;">

<a href="https://github.com/france-connect/identity-provider-example/blob/master/database.csv">

Voir les identifiants de test disponibles

</a>

</p>

<p style="margin-top: 1em;">

<a href="Comment_créer_de_nouveaux_comptes_test.docx.pdf" target="_blank">

Tutoriel pour créer des identifiants test

</a>

</p>

</div>

</article>

</div>

</div>

</div>

</div>

</div>

</section>

<section class="section is-small">

<div class="container">

<h2 class="title is-2">Récupérez vos données via une API FranceConnectée</h2>

<div class="tile is-ancestor">

<div class="tile is-vertical">

<div class="tile">

<div class="tile is-parent">

<article class="tile is-child notification">

<p class="title">1. Cliquez</p>

<p class="subtitle">sur le bouton ci-dessous</p>

<div class="has-text-centered content">

<!--

FC btn

WARNING : if change keep this id(id="dataBtn"),

because it is used in cypress integration test suite in FC core

-->

<a href="/data-authorize" id="dataBtn">

<img src="img/FCboutons-data.svg" alt="">

</a>

<p style="margin-top: 2em;">

<a href="https://partenaires.franceconnect.gouv.fr/fcp/fournisseur-service">

Documentation générale de FranceConnect

</a>

</p>

</div>

</article>

</div>

<div class="tile is-parent">

<article class="tile is-child notification">

<p class="title">2. Sélectionnez</p>

<p class="subtitle">le compte "Démonstration"</p>

<div class="has-text-centered content">

<figure class="image">

<img src="/img/identity-provider-button.jpg">

</figure>

<p style="margin-top: 2em;">

<a href="https://github.com/france-connect/identity-provider-example">

Voir le code source du fournisseur d'identité "Démonstration"

</a>

</p>

</div>

</article>

</div>

<div class="tile is-parent">

<article class="tile is-child notification">

<p class="title">3. Renseignez</p>

<p class="subtitle">les identifiants de test</p>

<div class="has-text-centered content">

<figure class="image">

<img src="/img/test-credentials.jpg">

</figure>

<p style="margin-top: 2em;">

<a href="https://github.com/france-connect/identity-provider-example/blob/master/database.csv">

Voir les identifiants de test disponibles

</a>

</p>

</div>

</article>

</div>

</div>

</div>

</div>

</div>

</section>

<footer class="footer custom-content">

<div class="content has-text-centered">

<p>

<a href="https://github.com/france-connect/identity-provider-example/blob/master/database.csv">

Modifier les comptes tests

</a>

</p>

<p>

<a href="https://github.com/france-connect/data-provider-example/blob/master/database.csv">

Modifier les données associées aux comptes tests

</a>

</p>

<p>

<a href="https://github.com/france-connect/service-provider-example">

Voir le code source de cette page

</a>

</p>

<hr>

<p>

<a href="https://partenaires.franceconnect.gouv.fr/fcp/fournisseur-service">

Documentation générale de FranceConnect

</a>

</p>

</div>

</footer>

<!-- This script brings the FranceConnect tools modal which enable "disconnect", "see connection history" and "see FC FAQ" features -->

<script src="https://fcp.integ01.dev-franceconnect.fr/js/franceconnect.js"></script>

<link rel="stylesheet" href="css/highlight.min.css">

<script src="js/highlight.min.js"></script>

<script>hljs.initHighlightingOnLoad();</script>

</body>

</html>

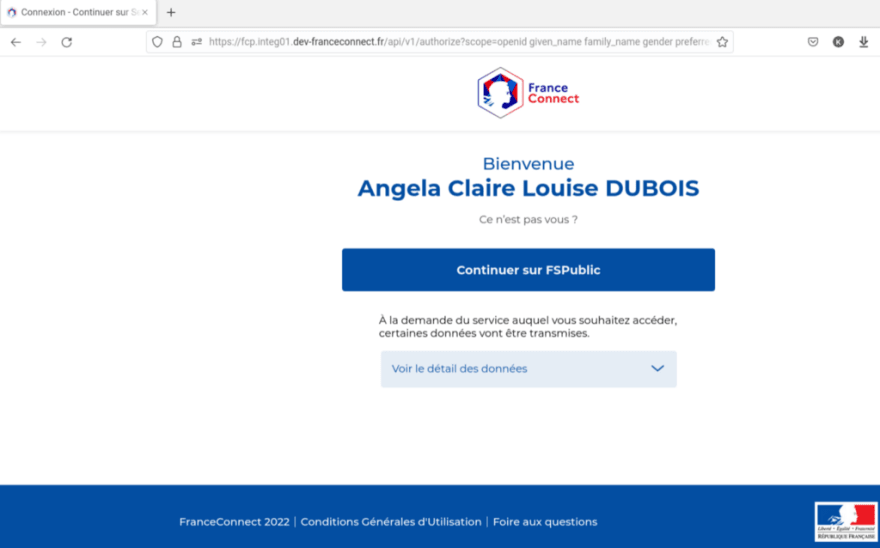

En modifiant son fichier host, on peut récupérer l’accès au démonstrateur FC :

On peut utiliser Acorn avec GitHub actions avec ces deux exemples dont le dernier qui permet d’enchainer la construction et le déploiement d’une image Docker :

name: My Workflow

on:

push: {}

jobs:

publish:

steps:

- uses: actions/checkout@v3

- uses: acorn-io/actions-setup@v1

- run: |

# Do something with the CLI

acorn --version

name: My Workflow

on:

push:

tags:

- "v*"

jobs:

publish:

steps:

- uses: actions/checkout@v3

- uses: acorn-io/actions-setup@v1

- uses: acorn-io/actions-login@v1

with:

registry: docker.io

username: yourDockerHubUsername

password: ${{ secrets.DOCKERHUB_PASSWORD }}

Acorn est une solution en évolution au fur et à mesure et ses fonctionnalités évolueront au fil du temps. Il se peut donc que des changements mineurs soient apportés entre les versions …

Pur aller plus loin :

- Deploying Stateful Web Apps with Acorn

- Securing Acorn App Endpoints with TLS Certificates

- Converting Docker Compose files to Acornfiles

- Getting Started with Acorn and Azure Kubernetes Service (AKS)

À suivre !

Top comments (0)