“What’s the biggest set of items in terms of tech debt. I can attack it, scope it into the code quality sprints and then start incrementally evolving” - Badri elaborated.

In Good Code Podcast, we talk to experienced developers and technical leaders about the art and science of writing good code and building software. In episode 5, we talk to Badri Rajasekar, who has spent almost 20 years building software and is currently the founder and CEO of Jamm - a rapidly growing video collaboration app.

The Microsoft executive who alarmed everyone with a baseball bat

While speaking about code quality and code review processes, Badri shared an incident from his initial days at Microsoft, when somebody broke the Windows Build and had hundreds of developers waiting for it to get fixed. Soon enough, a Microsoft executive stormed into the office with a baseball bat shouting “Who broke the build?”

With this hilarious (not at the time 😅) incident, Badri expounded on how code quality reviews have evolved over the past decade. Earlier, with strongly typed languages and no automation in place, errors could easily become blunders. Today, with the introduction of automated code reviews, various tooling, microservices, the concept of nightlies, and continuous integrations, there’s much more tolerance for both errors and blunders.

Quick Read: Code Review Best Practices

The flip side: With the flexibility that these modern processes offer, it becomes even more critical to be careful in order to ensure code quality at every step of the way.

In Badri’s words:

It’s just as possible for organizations in this era of continuous delivery to ship a lot of buggy code.

The takeaway: It’s extremely important to be structured around automation testing.

Slow down today and move 10X faster tomorrow onwards

While talking about how inexperienced developers or new startups that don’t bother about integration testing for the sake of quick deployments, end up accumulating a lot of tech debt before they realize it, Badri shared another example of a rapidly growing startup that couldn’t slow down, hence making the problem worse for itself every day.

Badri’s advice for situations like this - get as organized as possible, and try to not fix everything in one shot.

Have a strong process around all of your basic unit tests. Integration tests have to pass before a pull request gets merged in. Eg. Don’t submit a PR until the integration tests have passed. Be firm - either the tests have to pass or if they’re not passing, you’ve got to fix the test.

The key is to introduce code quality into the DNA of the company and encourage everyone on the team to think of it as an integral part of the process, and not rely on QA teams or site reliability engineers. Badri also shared his insights on how to do this — introducing quality sprints, having regular performance checks, taking care of the meta-questions, and maintaining documentation.

He spoke about his time at TokBox and how they used to manage the code quality. They used to have a unified framework for instrumenting everything from platform health to analytics. They also made it fun by introducing the “Load Time Lama” - a fanciful creature who encouraged everyone to take care of the loading times.

Pro Tip: Avoid premature optimization of architecture, as you’ll miss out on crucial problems that haven’t cropped up since you haven’t seen scale yet.

The similarity between pilots and programmers

Badri drew an analogy between pilots and programmers while explaining how to steer through massive amounts of data. When flying an airplane, a pilot majorly looks at a couple of indicators — the airspeed indicator, altimeter, heading indicator, etc. And when one of such indicators indicates a warning, only then he delves deeper into the issues.

Similarly, while having analytics and continuous testing are critical, developers should not get lost in the data that accumulates over time. The way out is to simply stick to the basics. Have a “four-metric dashboard” that defines the code quality benchmarks for a team.

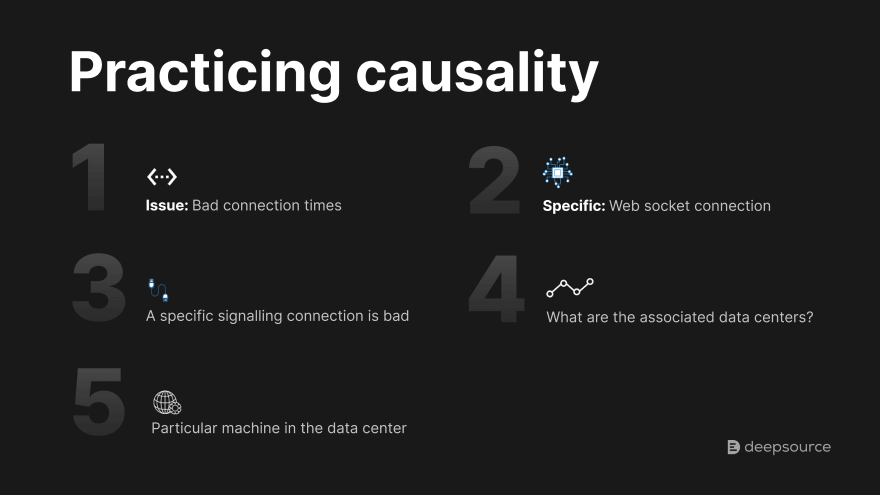

At the same time, practicing causality where you reverse engineer the issues, rather than looking at a sea of graphs, makes it easier to figure out causal patterns.

The conversation wrapped up with golden advice:

Golden Advice: Keep your architecture simple!

Badri advocated the KISS (keep it simple silly) principle when it comes to architectures. This improves scalability and helps with faster resolution of unforeseen problems, because these are easy to reason about.

After a lot of anecdotes, jokes, advisements, and laughter, the chat concluded.

Interested in listening to the entire conversation?

Listen to the podcast here.

Stay tuned for the next episode of the Good Code Podcast!

Top comments (0)