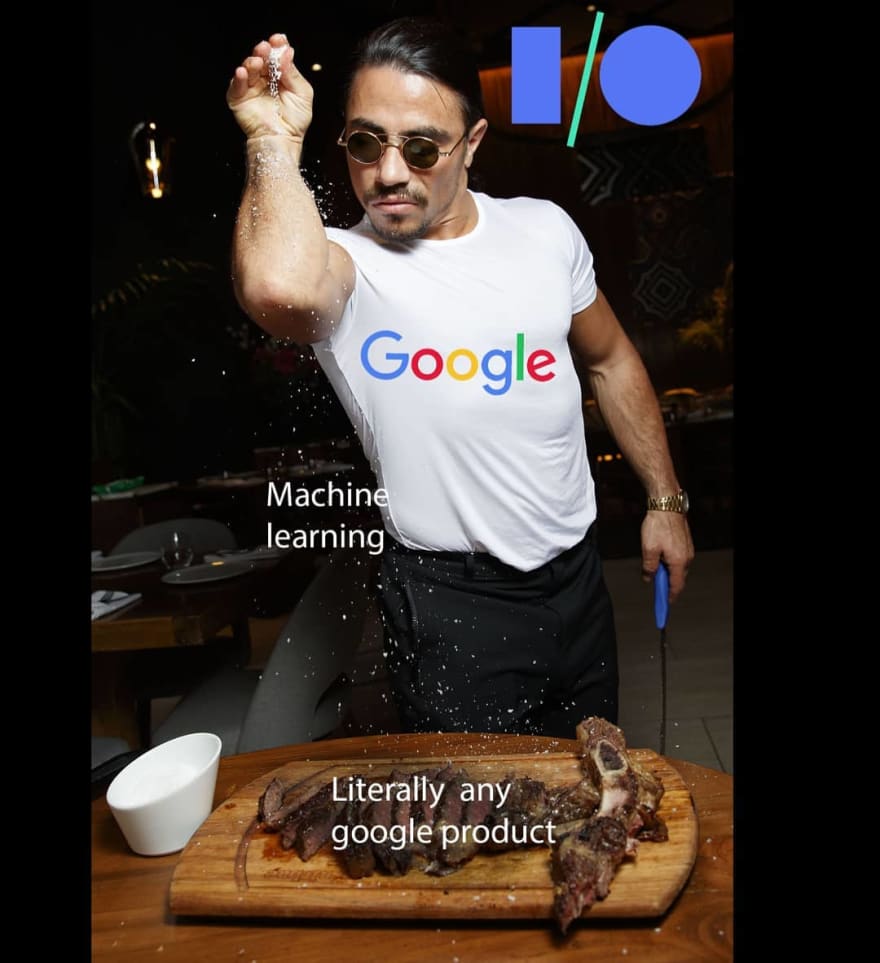

So Google's Devfest was last week, very exciting and all and this week whilst preparing the sample app for this post I saw this on instagram that's a nice summation of the conference:

So Machine Learning, Machine Learning everywhere. And I don't blame them, ML is this "magical" tool that makes everything work so much better for humans.It takes a lot of effort, sometimes hours/days of "training", but the final result is, for instance, a virtual assistance that can set hair appointments for you and uses freakishnessly (is this even a word) realistic onomatopoeic sounds...

But let's focus on the matter at hand.

I love Firebase ❤. I know sometimes it's a headache, but it has its uses and they're very versatile. You can either use all of them in one project or just some parts of it. For example, I'm currently working on an app that uses the Real Time Database, Cloud Functions and FCM to implement a chat feature. The rest is integrated with a different Backend, but for the chat feature we use Firebase and it works like a charm (with some caveats, of course).\

This year, Google has added the MLKit beta version to Firebase with three "simple" key capabilities:

Ready to use APIs for common problems faced by ML users in real world applications such as: Text Recognition, Face Detection, Bar Code Scanning, Image Labelling, Landmark recognition

Possibility to use these models on-device and in the cloud.

You can deploy a custom model (if you have it ready in Tensor Flow Lite)

So it all looks quite interesting and in my mind this is how the conversation goes.

- Google: Do you want to use Machine Learning in your app?

- Dev: Yes, sir.

- Google: Here are some ready-to-use APIs. If you use the horse-power in your phone to run the model, you won't have to pay anything. Now, if you want to use our machines in the cloud, then let's see those green bills..

- Dev: These models don't work for my specific problem.

- Google: Okay, then write one using our other product and you can deploy using this product.

You might be saying now, please just show me the code...\

The example I'm going to use in this app is a very simple smile detector. I'm an Android Dev so I'll use Kotlin because:

First thing, dependencies:

dependencies {

...

implementation 'com.google.firebase:firebase-core:15.0.2'

implementation 'com.google.firebase:firebase-ml-vision:15.0.0'

...

}

Pretty simple, just Firebase Core and ML-Vision.

The method bellow is the one I used after acquiring the image. In order to process your images you need to convert them to an object that the API understands, in this case a FirebaseVisionImage:

private fun faceDetect(image: Bitmap) {

val firebaseImage = FirebaseVisionImage.fromBitmap(image)

val detector = FirebaseVision.getInstance().getVisionFaceDetector(firebaseOptions)

detector.detectInImage(firebaseImage)

.addOnSuccessListener {

processFaces(it)

}.addOnFailureListener {

it.printStackTrace()

Toast.makeText(this, "Something bad happended. Cry in a corner.", Toast.LENGTH_SHORT).show()

}

}

In this method I use a bitmap (for simplicity) to create the FirebaseVisionImage but you can use other formats like byteArray, byteBuffer, filePath, mediaImage. Also, to create the detector which will be used to actually classify our images you need an instance of FirebaseVisionFaceDetectorOptions to configure your detector. The one I'm using looks like this:

val firebaseOptions = FirebaseVisionFaceDetectorOptions.Builder()

.setModeType(FirebaseVisionFaceDetectorOptions.ACCURATE_MODE)

.setLandmarkType(FirebaseVisionFaceDetectorOptions.ALL_LANDMARKS)

.setClassificationType(FirebaseVisionFaceDetectorOptions.ALL_CLASSIFICATIONS)

.setMinFaceSize(0.15f)

.setTrackingEnabled(true)

.build()

Here I set the mode type to ACCURATE_MODE meaning I want to favor accuracy over speed. The landmarks type is set to ALL_LANDMARKS because it improved pose estimation. The classification is set to ALL_CLASSIFICATIONS because I need it to detect the smiling feature in images. The min face size tells what's the smaller face it will be able to detect, I've set this to 15% of the size of the image. Enabling tracking helps us keep track of the faces that had be found so far (if the model is presented with multiple images).

Everyone who uses Firebase knows that most async APIs there work under Task objects. It's no different here, we attach a listener to receive the result of the detection when it completes successfully and another for when something wrong happens and you should shed a tear...

As a result you'll receive a list (filled or not) with "Faces" that you can check many properties in. For example, you can call getBoundingBox() to check the location of the face within the image, or getSmilingProbability() which is the probability (from 0.0 to 1.0, if -1 it didn't find a smile) of this face to be smiling.

For me I'm simply doing this:

private fun processFaces(faces: List<FirebaseVisionFace>) {

if (faces.isEmpty()) {

Toast.makeText(this, "Is there a face here?", Toast.LENGTH_SHORT).show()

} else {

for (face in faces) {

if (face.smilingProbability != FirebaseVisionFace.UNCOMPUTED_PROBABILITY) {

val smileProb = face.smilingProbability

if (smileProb > 0.5) {

Toast.makeText(this, "Smily face :)", Toast.LENGTH_SHORT).show()

} else {

Toast.makeText(this, "Not a Smily face :(", Toast.LENGTH_SHORT).show()

}

} else {

Toast.makeText(this, "Is there a face here?", Toast.LENGTH_SHORT).show()

}

}

}

}

So for each face it finds it will check if the probability of a smile is larger than 0.5 (I could use a larger number), if so then it shows a smiley toast :). If not :(.

So as a tool for speeding up development of ML models in mobile apps I reckon ML Kit does a decent job, I've written this app in a couple of hours and only because I was worried the UI was too ugly (it's still is lol). The built-in models are quite accurate (from what I've tested) and (not tested by me) the custom models are a great option for very specific applications. Let's see how much the usage and the tools will evolve in the next few months...

That's it, I hope you liked this post because it was a lot of fun writing it ;)

Top comments (0)