We are thrilled to team up with Timescale to bring the community our newest challenge. We think you'll like this one.

Running through November 10, the Open Source AI Challenge with pgai and Ollama provides an opportunity to build with AI and experience the power of Postgres – all within the open source ecosystem!

Whether you’re new to coding or have been in the industry for years, this will be a fun way to learn something new and stretch your creativity. There is one prompt for this challenge but four ways to win.

We hope you give it a try!

Our Prompt

Your mandate is to build an AI application using open-source tools and the open-source database PostgreSQL as your vector database, using two or more of the following related tools: pgvector, pgvectorscale, pgai, and pgai Vectorizer.

PostgreSQL offers a number of extensions for AI (pgvector, pgvectorscale, and pgai) making it possible to power AI applications like search, RAG, and AI Agents without using a separate vector database.

Additional Prize Categories

In addition to being able to win the overall prompt, we have three additional prize categories you can work towards!

- Open-source Models from Ollama: Awarded to a project that utilizes open-source LLMs via Ollama for embedding and/or generation models.

- Vectorizer Vibe: Awarded to a project that leverages the pgai Vectorizer tool for embedding creation in their RAG application.

- All the Extensions!: Awarded to a project that leverages all three of the PostgreSQL extensions for AI: pgvector, pgvectorscale, and pgai.

Be sure to indicate all the categories your project may qualify for as part of your submission.

Judging Criteria and Prizes

All three prompts will be judged on the following:

- Use of underlying technology

- Usability and User Experience

- Accessibility

- Creativity

- If applicable, additional prize category requirements

Overall Prompt Winner (1) will receive:

- $1,500 USD

- 6 Month DEV++ Membership

- Exclusive DEV Badge

- A gift from the DEV Shop

Prize Category Winners (3) will receive:

- $500 USD

- 6 Month DEV++ Membership

- Exclusive DEV Badge

- A gift from the DEV Shop

All Participants with a valid submission will receive a completion badge on their DEV profile.

Additional Resources

We encourage everyone participating in the challenge to join the pgai Discord community to connect with fellow builders.

- Get started with a free hosted PostgreSQL database on Timescale Cloud

- Note: Timescale Cloud is accessible globally and runs on these AWS regions.

- For self-host guidance, check out the following repositories:

timescale

/

pgvectorscale

timescale

/

pgvectorscale

A complement to pgvector for high performance, cost efficient vector search on large workloads.

pgvectorscale

pgvectorscale builds on pgvector with higher performance embedding search and cost-efficient storage for AI applications.

pgvectorscale complements pgvector, the open-source vector data extension for PostgreSQL, and introduces the following key innovations for pgvector data:

- A new index type called StreamingDiskANN, inspired by the DiskANN algorithm, based on research from Microsoft.

- Statistical Binary Quantization: developed by Timescale researchers, This compression method improves on standard Binary Quantization.

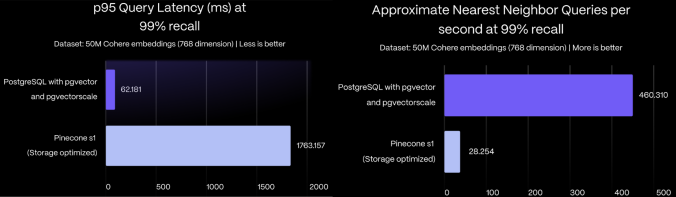

On a benchmark dataset of 50 million Cohere embeddings with 768 dimensions

each, PostgreSQL with pgvector and pgvectorscale achieves 28x lower p95

latency and 16x higher query throughput compared to Pinecone's storage

optimized (s1) index for approximate nearest neighbor queries at 99% recall

all at 75% less cost when self-hosted on AWS EC2.

To learn more about the performance impact of pgvectorscale, and details about benchmark methodology and results, see the pgvector vs Pinecone comparison blog post.

In contrast to pgvector, which…

timescale

/

pgai

timescale

/

pgai

A suite of tools to develop RAG, semantic search, and other AI applications more easily with PostgreSQL

pgai simplifies the process of building search Retrieval Augmented Generation (RAG), and other AI applications with PostgreSQL. It complements popular extensions for vector search in PostgreSQL like pgvector and pgvectorscale, building on top of their capabilities.

Overview

The goal of pgai is to make working with AI easier and more accessible to developers. Because data is the foundation of most AI applications, pgai makes it easier to leverage your data in AI workflows. In particular, pgai supports:

Working with embeddings generated from your data:

- Automatically create and sync vector embeddings for your data (learn more)

- Search your data using vector and semantic search (learn more)

- Implement Retrieval Augmented Generation inside a single SQL statement (learn more)

- Perform high-performance, cost-efficient ANN search on large vector workloads with pgvectorscale…

Important Dates

- October 30: Open Source AI Challenge with pgai and Ollama begins!

- November 10: Submissions due at 11:59 PM PDT

-

November 12November 14: Winners Announced

We can’t wait to see what you build with PostgreSQL and open-source AI tools! Questions about the challenge? Ask them below.

Good luck and happy coding!

Top comments (26)

This looks awesome! I love hackathons!

Good luck everyone!

111

I don't think my 5 years old laptop with 512mb of GPU ram will be able to run a 7B LLM 😂

If we want to utilize Ollama model, does that mean that we have to host the model ourselves and open the access to public?

Did you get an answer for your question? I think we have to deploy the model, otherwise how would the judges try the mout?

So many questions since I'm not familiar with LLMs at all:

No need to write in Python. You can create a Posgres function and invoke this function as SQL statement from any PG client.

This means the chat response is generated from pgai and you are getting it back via Postgres.

Is this compatible with the Open Source AI Definition just ratified by the OSI, please? I don't see Ollama on the list of endorsements there.

Depends on the model you are using. Ollama is basically just a wrapper for llama.cpp and llama.cpp serves as a host for the interference with an LLM. Both, Ollama and Llama.cpp are published under open source licenses, but there are models which are not open source.

Yes, so the answer is (I think) that this contest is not compatible with the OSI Open Source AI Definition. Thanks.

If I use

pgaiand create vector embeddings, does this mean that I've already used two tools:pgaiandpgai vectorizer, thereby meeting the prompt requirement?YAY!!!

Hey guys, please fix the url for dev.to/timescale

Thanks, fixed!

Pre-built UI components to help you create stunning websites in no time with bardui.com.

Some comments may only be visible to logged-in visitors. Sign in to view all comments.