My Outreachy internship ended, It was fast and I learned a lot of things in this period. I've never programmed in Python like I did in Apache Airflow. My mentor Jarek Potiuk guide me write my first lines of code for Open Source, I am very grateful for his patience 🙌.

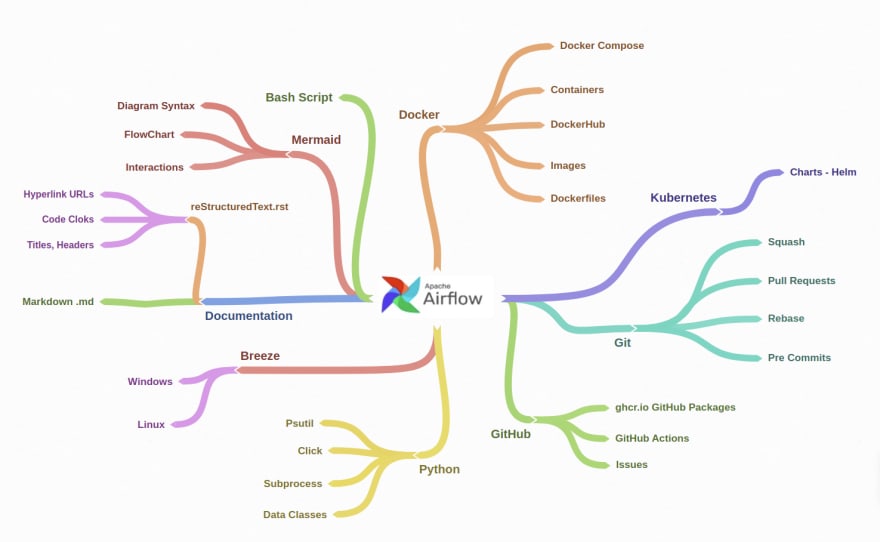

This is basically what encompasses my learning, on the technical skills side.

Apache Airflow is an open source workflow management platform for data engineering pipelines by creating workflows as directed acyclic graphs (DAGs) of tasks written in Python. It is considered one of the most robust platforms used by data engineers.

Apache Airflow has a very complete and complex local development environment written mainly in Bash, it's called Breeze. However, most project contributors are proficient in Python, so the local development environment is not good for maintenance and debugging.

🎯 Our goal Convert Airflow Local Development environment (Breeze) from Bash-based to Python-based and also make it possible for Windows users to run the Breeze environment.

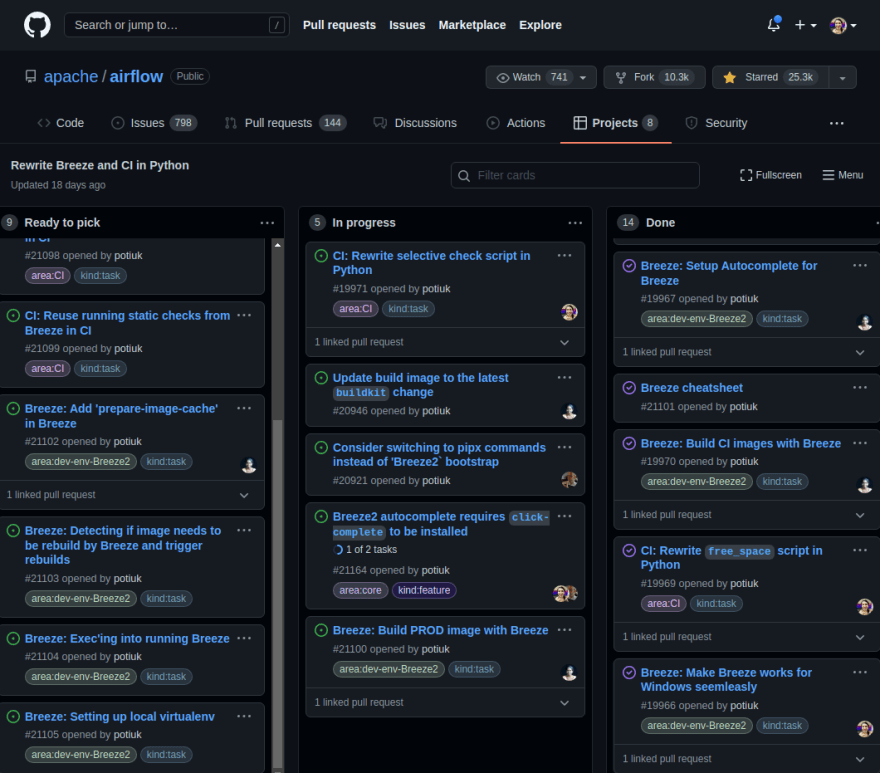

My mentors detailed each task in the backlog using GitHub Projects. ShoutOut to my mentors: Jarek Potiuk, Elad Kalif, Nasser Kaze for giving me as much detail to get started.

Backlog of our tasks on GitHub

Technical Skills

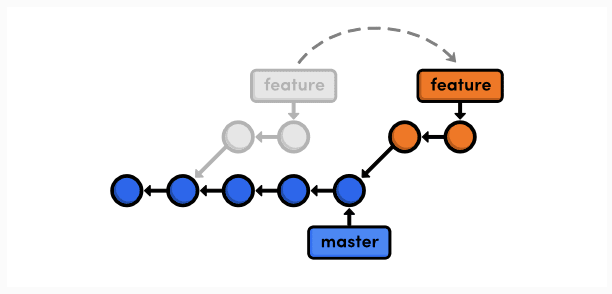

To start working on a feature, we create a separate branch git checkout -b <new-branch>. We always “rebase”, to place our changes at the end of the latest updates that were in the original repository.

git remote add apache git@github.com:apache/airflow.git

git fetch apache

git fetch --all

# This will print the HASH of the base commit which you

# should use to rebase your feature from

git merge-base my-branch apache/main

git rebase HASH --onto apache/main

git push --force-with-lease

One of the challenges for me was understanding how to resolve conflicts with Git, and rebasing was one of the techniques that worked quite well for me.

Through discussions, my mentor and I analyze the bash script code and we defined what is the best way to approach it. Before I can rewrite it in Python, there are things that can definitely be done differently because of the flexibility of Python.

Before pushing, we use pre-commits, to review the code before it goes to the main repository of the version control system

pre-commit install

pre-commit --version

pre-commit uninstall

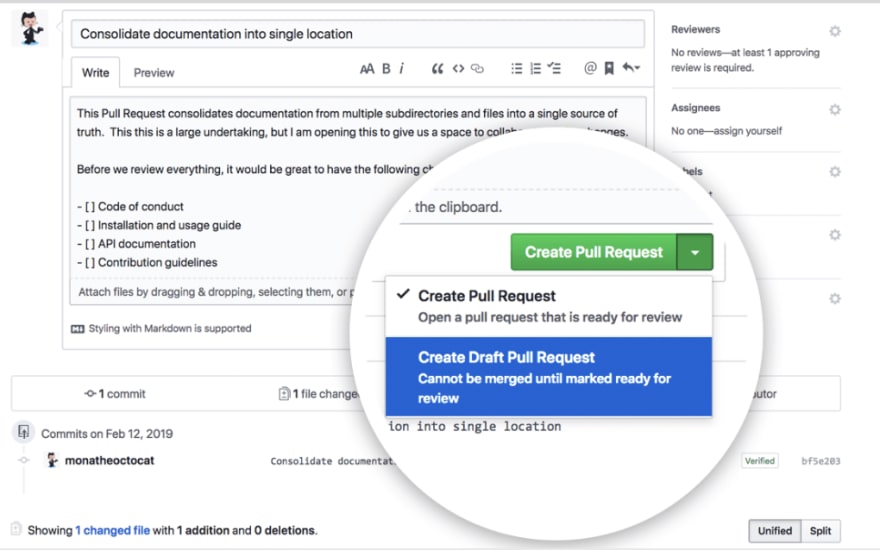

After finishing the implementation, we can create a Pull Request, if it is not ready, we can set it as a DRAFT to indicate that it is Work in Progress and that it is not yet ready for review.

> Image by Luke Hefson

> Image by Luke Hefson

Once our Pull Request is created, test flows in GitHub Actions are executed, they validates many things, depending on the content that is modified. Example:

If the change is related to documentation, validation is quite simple and awaits the approval of our mentor.

If the core of Apache Airflow is changed, it runs all the necessary tests with different versions of Python.

This is an amazing flowchart that describes all the test cases workflow.

Mermaind flowchart created by Jarek Potiuk

Once all the Jobs finish successfully, our Pull Request is ready to be approved and merged.

During my contribution stage I have used different Python libraries, these are some of them:

PSutil

Library to retrieve information on running processes and system utilization (CPU, memory, disks, network, sensors) in Python.Click

Click is a Python package for creating beautiful command line interfaces in a composable way with as little code as necessary.Subprocess

module allows you to spawn new processes, connect to their input/output/error pipes, and obtain their return codes.Data Class

Data classes are just regular classes that are geared towards storing state, rather than containing a lot of logic.

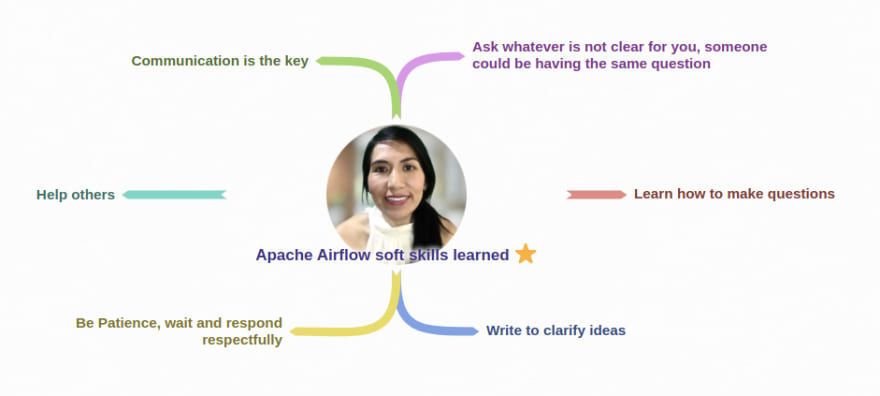

Soft Skills

These were some of the Soft Skills I focused on, I'm still learning from this.

One of the great challenges for me was to "communicate" effectively orally and in writing. I have been able to perform well in writing, as I have taken the time to detail my thoughts and let my mentor know. Orally, the weekly meetings and the one-on-ones with my mentor were a great opportunity to practice. I am quite happy because it is my first professional experience in an English-Speaking Environment.

I have tried to summarize all my experience in one post, I have left many without mentioning, but I will do it little by little through other posts.

Some technical things I am exploring these days are Docker, Kubernetes, Helm Chart,GHCR.io in Apache Airflow environment.

I am still working in Open Source in Apache Airflow, to change the script which executes all the tests in GitHub actions, it is called Selective Check which is in Bash Script. My mentor and I wanted to make it easier in Python.

🌿 My lasts words:

Thanks Outreachy, and thanks to my mentors to gave me this opportunity in Apache Airflow. 🥲🙏😊🙋🙈

I hope that you have learned something from this, and if you have questions about these topics please do not hesitate to write to me.

🌱 Edi LinkedIn

🌱 Edi GitHub

Top comments (1)

Thnak you so much, If I can help in something please feel free to contact to me. :)