Hi! The following post is generated using some super cool LLMs, and hey it’s also about LLMs!

Introduction

In this blog post, we’ll explore a practical use case for LLMs: generating alt text for images automatically, ensuring your applications are more inclusive and accessible.

By the end of this post, you’ll learn how to:

- Leverage Llama 3.2 Vision for image analysis.

- Implement the solution in a .NET project.

- Test the application using a sample GitHub repository.

This is the main repo: https://github.com/elbruno/Image-AltText-Generator

Let’s dive in!

Main Demo Repo

What Does the Application Do?

The application generates descriptive alt text for images using gpt-40-mini (online) or Llama3.2-Vision (locally). Alt text is essential for accessibility, enabling visually impaired users to understand the content of an image. This solution uses AI to:

- Upload and analyze an image.

- Generate an accurate, human-readable description of the image content.

- Provide the description as alt text that can be used in web applications or reports.

Technology Stack

- .NET: main desktop app.

- ollama: to run LLMs locally.

- Llama 3.2 Vision: Visual language model for understanding and describing images.

- OpenAI APIs: Integration with advanced LLMs to enhance text generation capabilities.

How to Run the Test Using the Sample Code

Prerequisites

Before running the application, ensure you have the following:

- .NET SDK : Version 9.0 or higher.

- Git : To clone the sample repository.

- To analyze the image, 2 options:

- OpenAI API Key : For text generation.

- Ollama : To run the Llama 3.2 Vision model.

Step-by-Step Guide

1. Clone the Repository

Start by cloning the sample repository:

git clone https://github.com/elbruno/Image-AltText-Generator.git

cd Image-AltText-Generator

2. For local use, set Up the Llama 3.2 Vision Model

- Installing Ollama: To install Ollama, follow the instructions provided on the Ollama website.

- Download the Llama3.2-vision Model: After installing Ollama, download the Llama3.2-vision model by following the instructions on the Ollama website or using the Ollama CLI

4. Run the Application

Launch the .NET application:

dotnet run

5. Test the Application

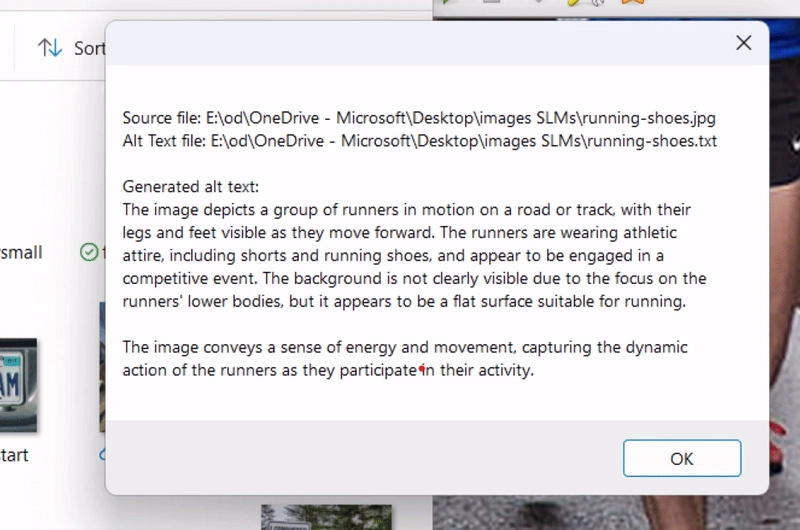

Copy an image to the clipboard and run the application. The application will:

- Analyze the image using a Vision model.

- Generate alt text .

For example, uploading a picture of a cat might return:

“A fluffy orange cat sitting on a wooden bench surrounded by green plants.”

Conclusions

Integrating AI models like Llama 3.2 Vision into .NET applications unlocks powerful, real-world use cases. By automating tasks like generating alt text, developers can enhance user experiences and build more inclusive applications effortlessly.

This tutorial demonstrates how easy it is to use LLMs for image understanding and description in .NET. With tools like Docker, OpenAI APIs, and accessible GitHub repositories, you’re just a few steps away from embedding AI capabilities into your projects.

So, what are you waiting for? Clone the repo, test the application, and start building your next AI-powered solution!

Resources:

Happy coding!

Greetings

El Bruno

More posts in my blog ElBruno.com.

More info in https://beacons.ai/elbruno

Top comments (0)