By Nikolay S.

In this article, we’ll try to explain what digital video is and how it works. We’ll be using a lot of examples, so even if you wanna run away before reading something difficult – fear not, we’ve got you. So lean back and enjoy the explanation on video from Nikolay, our CEO. 😉

Analog and digital video

Video can be analog and digital.

All of the real world information around us is analog. Waves in the ocean, sound, clouds floating in the sky. It’s a continuous flow of information that’s not divided into parts and can be represented as waves. People perceive exactly analog information from the world around them.

The old video cameras, which recorded on magnetic cassettes, recorded information in analog form. Reel-to-reel tape and cassette recorders worked on the same principle. Magnetic tape was passed through the turntable’s magnetic heads, and this allowed sound and video to be played. Vinyl records were also analog.

Such records were played back strictly in the order in which they were recorded. Further editing was very difficult. So was the transfer of such recordings to the Internet.

With the ubiquity of computers, almost all video is in digital format, as zeros and ones. When you shoot video on your phone, it’s converted from analog to digital media and stored in memory, and when you play it back, it’s converted from digital to analog. This allows you to stream your video over a network, store it on your hard drive, and edit and compress it.

What a digital video is made of

Video consists of a sequence of pictures or frames that, as they change rapidly, make it appear as if objects are moving on the screen.

This here is an example of a how a video clip is done.

What is Frame Rate

Frames on the screen change at a certain rate. The number of frames per second is the frame rate or framerate. The standard for TV is 24 frames per second, and 50 frames per second for IMAX in movie theaters.

The higher the number of frames per second, the more detail you can see with fast-moving objects in the video.

Check out the difference between 15, 30, and 60 FPS.

What is pixel

All displays on TVs, tablets, phones and other devices are made up of little glowing bulbs – pixels. Let’s say that each pixel can display one color (technically different manufacturers implement this differently).

In order to display an image on a display, it is necessary for each pixel on the screen to glow a certain color.

Thanks to this technical device of screens, in digital video each frame is a set of colored dots or pixels.

The number of such dots horizontally and vertically is called the picture resolution. The resolution is recorded as 1024×768. The first number is the number of pixels horizontally and the second number, vertically.

The resolution of all frames in a video is the same and this in turn is called the video resolution.

Let’s take a closer look at a single pixel. On the screen it’s a glowing dot of a certain color, but in the video file itself a pixel is stored as digital information (numbers). With this information the device will understand what color the pixel should light up on the screen.

What are color spaces

There are different ways of representing the color of a pixel digitally, and these ways are called color spaces.

Color spaces are set up so that any color is represented by a point that has certain coordinates in that space.

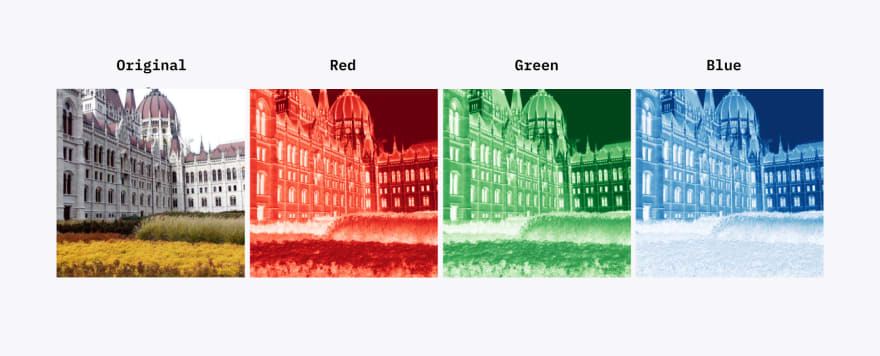

For example, the RGB (Red Green Blue) color space is a three-dimensional color space where each color is described by a set of three coordinates – each of them is responsible for red, green and blue colors.

Any color in this space is represented as a combination of red, green, and blue.

Here is an example of an RGB image decomposed into its constituent colors:

There are many color spaces, and they differ in the number of colors that can be encoded with them and the amount of memory required to represent the pixel color data.

The most popular spaces are RGB (used in computer graphics), YCbCr (used in video), and CMYK (used in printing)

CMYK is very similar to RGB, but has 4 base colors – Cyan, Magenta, Yellow, Key or Black.

_RGB and CMYK spaces are not very efficient, because they store redundant information.

Video uses a more efficient color space that takes advantage of human vision._

The human eye is less sensitive to the color of objects than it is to their brightness.

On the left side of the image, the colors of squares A and B are actually the same. It just seems to us that they are different. The brain forces us to pay more attention to brightness than to color. On the right side there is a jumper of the same color between the marked squares – so we (i.e., our brain) can easily determine that, in fact, the same color is there.

Using this feature of vision, it is possible to display a color image by separating the luminosity from the color information. Subsequently, half or even a quarter of the color information can simply be discarded in compression (representing the luminosity with a higher resolution than the color). The person will not notice the difference, and we will essentially save on storage of the information about color.

About how exactly color compression works, we will talk in the next article.

The best known space that works this way is YCbCr and its variants: YUV and YIQ.

Here is an example of an image decomposed into components in YCbCr. Where Y’ is the luminance component, CB and CR are the blue and red color difference components.

It is YCbCr that is used for color coding in video. Firstly, this color space allows compressing color information, and secondly, it is well suited for black and white video (e.g. surveillance cameras), as the color information (CB and CR) can simply be omitted.

What is Bit Depth

The more bits, the more colors can be encoded, and the more memory space each pixel occupies. The more colors, the better the picture looks.

For a long time it has been standard for video to use a color depth of 8 bits (Standard Dynamic Range or SDR video). Nowadays, 10-bit or 12-bit (High Dynamic Range or HDR video) is increasingly used.

It should be taken into account that in different color spaces, with the same number of bits allocated per pixel, you can encode a different number of colors.

What is Bit Rate

Bit rate is the number of bits in memory that one second of video takes. To calculate the bit rate for uncompressed video, take the number of pixels in the picture or frame, multiply by the color depth and multiply by the number of frames per second

1024 pixels X 768 pixels X 10 bits X 24 frames per second = 188743680 bits per second

That’s 23592960 bytes, 23040 kilobytes or 22.5 megabytes per second.

Those 5 minute videos would take up 6,750 mega or 6.59 gigabytes of memory.

This brings us to why video compression methods are needed and why they appeared. Without compression it’s impossible to store and transmit that amount of information over a network. YouTube videos would take forever to download.

Conclusion

This is a quick introduction in the world of video. Now that we know what it consists of and the basics of its work, we can move on to the more complicated stuff. Which will still be presented in a comprehensive way 🙂

Top comments (0)