The Deepfake War Has Only Just Begun

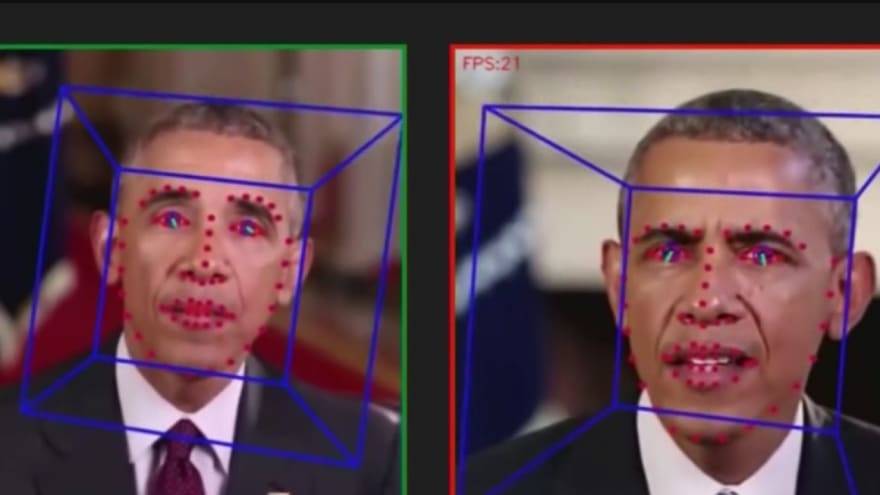

By now, most of us have seen a Deepfake video. Although they are usually presented now as a novelty, including an authentic-looking video of Barack Obama saying things he didn't say (with the help of a convincing Jordan Peele impression), or every member of Full House being replaced by an impish Ron Swanson, Deepfake videos have the potential to be powerful and malicious agents of misinformation during upcoming election cycles. While we may consider ourselves savvy enough to see through the facade (we are Dev.to readers, to be fair), others may have more difficulty discerning what is real, and what is fake - I'm looking at you here, MOTHER.

In order to protect ourselves from what will be an inevitable tool in the War Against Information, we have to find ways to combat the effectiveness of Deepfake technology. In order to do this, some of the major players in the tech market are beginning to pool their considerable resources to develop systems using machine learning to detect the presence of Deepfakes. In other words, right now the plan is to fight fire with fire. In this case, that means utilizing one emergent technology against another in the race to beat Deepfakes.

Google, in order to do its part, is releasing a huge dataset of Deepfakes. This dataset currently holds around 3,000 examples of uses of Deepfake technology. The intent is to train machines to recognize the signs of a Deepfake - changes in body movement, blinking irregularities, etc so that they can automatically detect and flag Deepfakes upon recognizing them. 3,000 may seem like a massive amount of videos, but it may not be enough to properly train the machines to respond to the videos with a high enough accuracy. To this extent, Google has promised to continue adding new videos to this dataset in order to allow the machines to achieve a higher level of accuracy.

Other companies are jumping into the fray as well. Facebook, already accused of allowing misinformation to spread during the 2016 presidential election, has eagerly partnered with Microsoft and MIT to improve the processes of finding Deepfakes. Not only are they also building their own library of Deepfakes - pointedly using only 'paid, consenting actors' (This technology is so new that we haven't allowed ethics to catch up yet, but I think Facebook only using paid actors is a gestural line in the sand and a step in the right direction for creating industry best practices), but they also host the Deepfake Detection Challenge, an academic contest geared towards the advancement of anti-Deepfake technology.

A problem, however, rises immediately. Experts are already decrying the Google dataset as out of date. According to some, Deepfake processes have already surpassed what is represented by the Google and Facebook videos. If that's the case, then using these videos as a machine learning training tool would be for moot. This is the cycle that will continue until we've exhausted all resources, a technological race to the deepest levels of "deep learning".

To exacerbate the situation even worse, soon we will have Deepfake technology that works for audio as well. Ironically, a lot of this audio technology is being pioneered by Facebook AI, the same group that is preparing to fight Deepfake video. This technology allows a machine to learn a person's vocal pattern from listening to recorded audio of a person, such as speeches, TedTalks, or even recorded conversations. The implications of this technology paired with Deepfake video should send a shiver down all of our spines (if you thought the CGI Princess Leia and CGI Grand Moff Tarkin from Rogue One were bad...well, you're right. But that kind of stuff is about to get a whole lot worse).

In essence, the white hats are going to have a hard time competing against the black hats in the Deepfake arms race. There are just too many opportunities for exploitation for a deepfake antivirus to cover. And the technology is rapidly growing, with few signs of slowing down. Machine learning presents us with so many fantastic opportunities, but Deepfakes might be the unfortunate side effect of embracing these technologies. Coincidently, one of the biggest problems with the introduction of Deepfakes is an analog one: the ability to deny tangible evidence as false despite its overwhelming presence (The Donald Trump/R. Kelly Defense, as it were). When any video might be synthesized, the "Fake News" argument becomes more reasonable with every passing day.

https://www.engadget.com/2019/09/25/google-deepfake-database/

https://www.engadget.com/2019/09/05/facebook-microsoft-mit-fight-deepfakes/

https://www.wired.com/story/ai-deepfakes-cant-save-us-duped/

https://www.washingtonpost.com/technology/2019/06/12/top-ai-researchers-race-detect-deepfake-videos-we-are-outgunned/

https://thenextweb.com/facebook/2019/09/06/facebook-wants-to-combat-deepfakes-by-making-its-own/

Top comments (0)