In expanding the scope of what developer relations means, I sought to find the shortcomings of AI in writing code, specifically Google Apps Script.

Generating Code

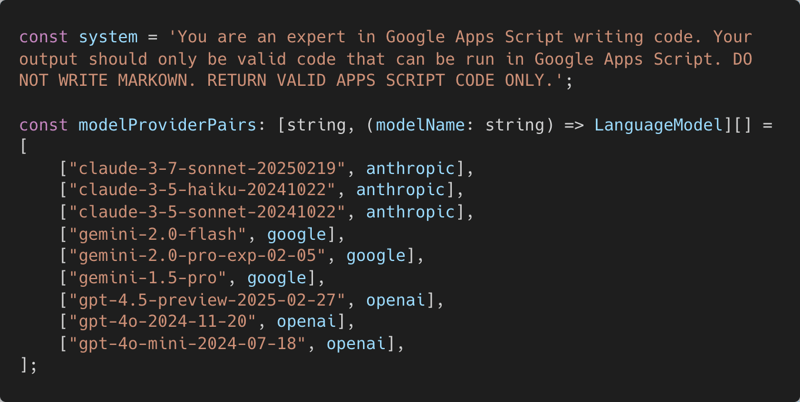

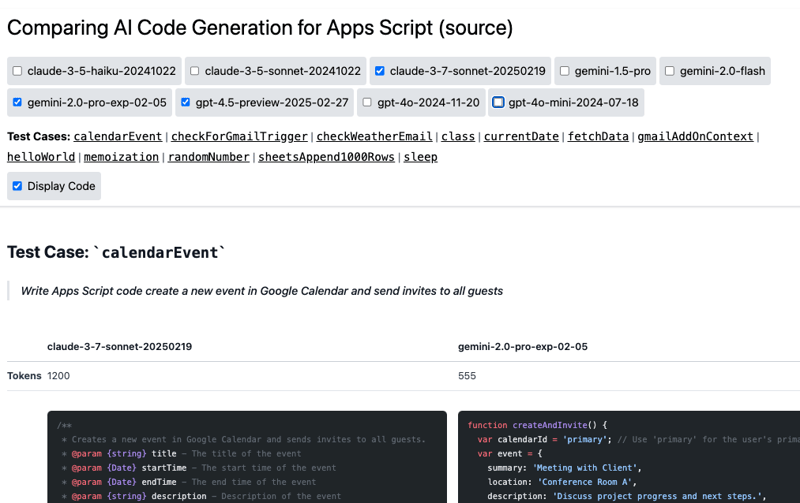

I put together a script to call multiple models with different prompts and a website to show the different outputs.

To simplify things, I used the NPM ai package by Vercel.

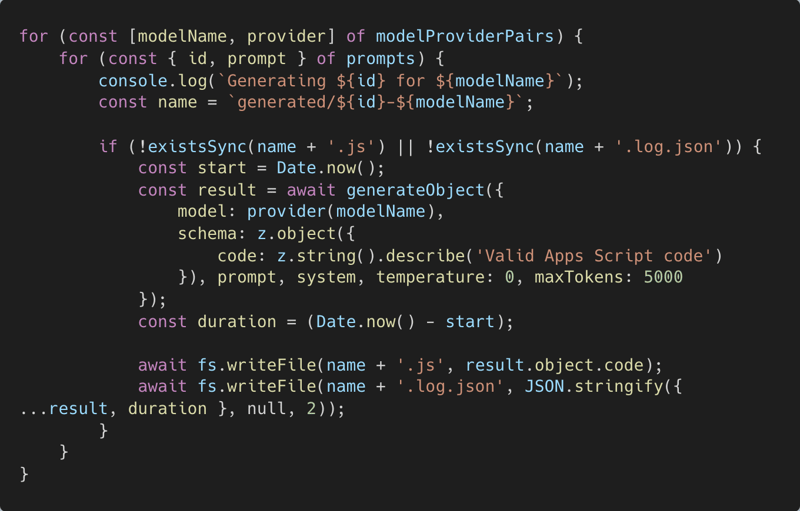

I then just iterated through the prompts and models saving the outputs to files locally.

There are some things to note here:

- Requesting an object with a particular schema using Zod.

- A 5000 token limit. (started with 1000, but Claude was verbose)

- Temperature is

0.

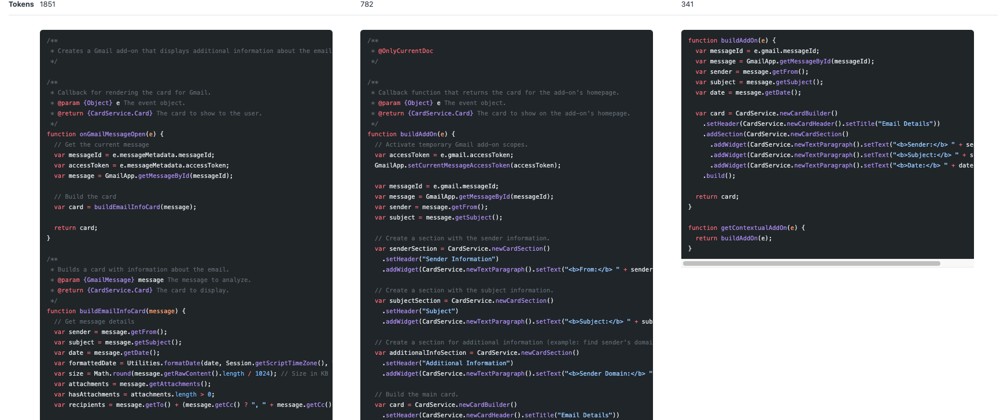

Visualizing Results

I wanted a better way to see the differences side-by-side, so I created a web app using Svelte at https://apps-script-ai-testing.jpoehnelt.dev! (best on desktop)

Editorial Impressions

As to actual takeaways from this analysis, here are a few:

- none of the models used CacheService for memoization

- claude-3-7-sonnet is very verbose

- only gemini 1.5 pro tried to used an Editor add-on in Gmail (wrong), all other models used

CardService(correct) - all of them failed to do a robust

UrlFetchAppget ofexample.com. they all let the non 200 error be caught and then raised again (this usually hides the actual root cause) - gpt-4o-mini decided to sleep with a while loop

Why do this?

As I mentioned earlier, I believe developer relations and the definition of developer is evolving quickly. The term "vibe coding" captures this phenomena by shifting the focus to the intent instead of writing. Either way, as a member of a developer relations team, my goal is to reduce friction from intent to implementation.

Next Steps

- Explore creating rules files for the AI LLMs,

apps-script.(txt|md). - Improve documentation for my editorial takes, e.g. make sure

UrlFetchAppTypeScript types and reference docs are complete.

Top comments (0)