🚀 What I Built (and What it Does)

Riffr helps you make awesome, awesome montages!

Okay, that's an oversimplification. Let me clarify how.

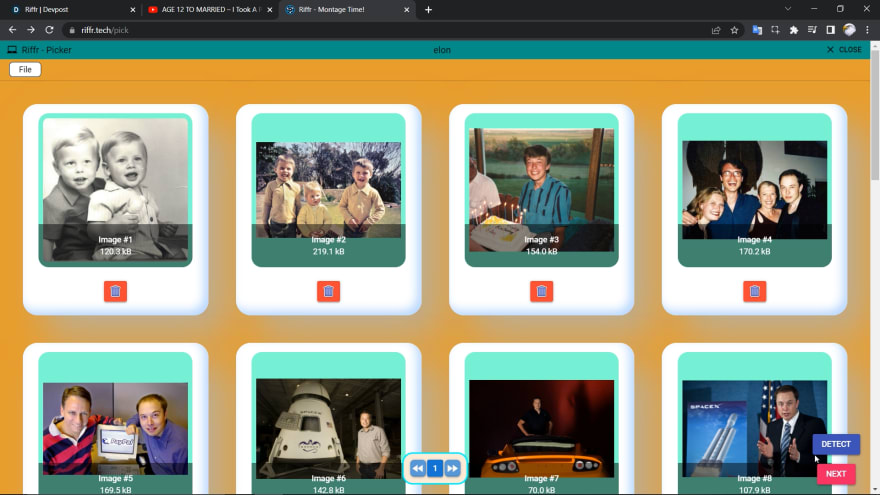

- You're allowed to choose up to 120 images(nice, right?), run face detection models on them

- Photos with faces detected are then configured around a specific face, zoom in/out levels & a frame rate, that is, how fast the images go by, is set.

- When you're done, the tool compiles these cached images and their configs and wraps it all up together in a neat little

webmor agifmedia file.

Take this gif, for example. (in-app link)

It uses 26 images, cycled at 9 frames per second, and positioned around a specific face(Elon's, in this case).

🐱👤 Category Submission:

Choose Your Own Adventure

🐱🏍 App Link

😏 Background: Some Context

(What made you decide to build this particular app? What inspired you?)

Time for a trip down the memory lane.

Remember this 🔽 video from wayyy back, in 2017?

For long, I had been really captivated by how the dedicated Youtuber Hugo Cornellier, who took photos in front of a camera every day for 9 years, and ended up making a time-lapse collection of over 2500 photos, placed perfectly around himself. This video spoke to me, as it probably did to you. It showed a person's journey - of growth, of being a kid, of going through phases, and finally, finding love.

However, it turns out, capturing this together was a painstakingly slow and difficult process. Aligning along a specific face, resizing images, and compiling these to make a meaningful video. It took Hugo 50+ hours just to make this!

Being inspired by his pain, I decided to lend a hand and put out a tool that can automate all this configuration, detect and cycle between faces, and generate a video with it all!

Psst...With this app, a user doesn't even have to click photos repeatedly at the same place or in the same position - the ML model adjusts all sorts of photos together 🤭

Sounds easy enough to implement, right?

Spoiler Alert: It was not easy at all.

🛠 How I Built It

(How did you utilize MongoDB Atlas? Did you learn something new along the way? Pick up a new skill?)

Since my first implementation (nearer to the beginning of the Atlas hackathon) was focused on preserving user privacy, I went for a pre-trained ML model that was loaded right into the browser, and compiled using the native MediaRecorder Web API. Additionally, I learned how to cache images in the browser using Web Workers and draw them blazing-fast onto the canvas with ImageBitmap object.

Note - For more details, check out my write-up on that earlier implementation that I published on Devpost (here) & submitted to another hackathon.

Nevertheless, the limitations caught up, and while various bug fixes ensured good quality (using VP8 media codecs) and speed, on mobile devices, it was just too much sometimes, and they'd just freeze due to large memory & computing requirements and start burning up!

After a while, I decided to overcome this by using the power of Tensorflow-Node in a custom Node.js backend, one that I could fully manage and let users share, upload and detect without worries about their phones. The compilation is still done on the front end, though, since it is easier there, in both gif & webm formats. Even though it might seem like a privacy-performance trade-off, I have structured the application in such a way with MongoDB that it's really easy to maintain privacy.

Plus, now it's as easy as toggling a button to switch between in-browser and on-server ML detection!

Lastly, the VueUse library made it so easier to tackle memory heap allocation debugging, QR Code generation, color picking, web workers etc.

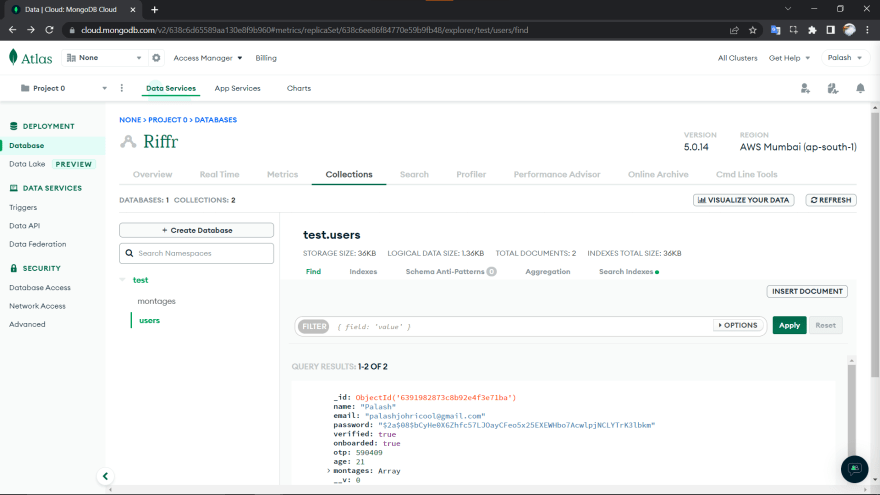

💪 Using MongoDB Atlas

I learned the Mongoose ODM to integrate with Atlas, and used it as a back end database (with possible future search functionalities) to create 2 collections, Montages & Users.

The server generates unique, secret urls that are inherently public but as secure & private as uuid using the nanoid module, and storea them publicly in both the database and storage solution. This way, fetching is easier, data can be aggregated in one place or distributed accordingly, and indexes for search purposes can be generated, too!

📸 Screenshots & Details

Signup/Signin & Onboarding Processes

| Landing Page with Signup/Signin | Onboarding Intro |

|

|

| Onboarding Avatar & Email Verification | Onboarding Details |

|

|

Pick, Edit & Compile

| User Aggregated Dashboard | Add your pictures, choose output & model |

|

|

| Select the right faces, frame rate, zoom, background | Compile, and Voila! Your montage is ready |

|

|

Viewer Page (with Publicly Accessible Links)

👨💻 Tech Stack

Basically a MEVN stack web application. However, there are additionally a large number of APIs used, too.

- Front End - Quasar Framework built on top of Vue 3.

- Back End - Node.js, Express.js & MongoDB Atlas

- Important APIs - ml5 for in-browser detection, face-api that uses

tensorflow-nodeto accelerate on-server detection. VueUse for a bunch of useful component tools like theQR Codegenerator. Yahoo's Gifshot for creatinggiffiles in-browser etc. - Storage Vendor - Google Cloud Storage

- Hosting - Azure Virtual Machine for the

Expressserver, and Vercel forQuasarbuild. - Transactional - Mailgun to send verification emails

🔗 Link to Source Code

holy-script

/

riffr

holy-script

/

riffr

Riffr is a great tool to literally 'riff' through a bunch of images and create photo montages around centered face

Riffr - Montage Time! (riffr)

Riffr helps you literally 'riff' through images - centered around a face and creating a photo montage.

A bit more detail about Riffr

You're allowed to choose up to 120 images(nice, right?), run the detection on them, and then you edit photos with faces detected and size them up accordingly. Also, you choose a frame rate, that is, how fast the images go by, and you can play and pause the editor to check out a preview with those settings. When you're done, the tool compiles these cached images and their configs and wraps it all up together in a neat little video.

The final product will be something like this

Do you want to spin this up locally and experiement with it?

Installation Guide

Install the dependencies

yarn

# or…📃 Permissive License

MIT License

🤫 Additional Resources/Info

Riffr's original submission page (on Devpost) that won 1st spot 🏆 in an MLH Weekend Hackathon.

Top comments (0)