are built around it.

This time we continue examining how slab s memory is allocated.

Firstly we look at the two arguments for slabs_init, which were passed over in the previous article. The first one is settings.maxbytes. It limits the overall memory that can be used by the memcached instance. In slabs_init, the value of settings.maxbytes is assigned to the global variable mem_limit which will be used very soon.

void slabs_init(const size_t limit, const double factor, const bool prealloc, const uint32_t *slab_sizes) {

...

mem_limit = limit; // scr: here

...

...

settings.maxbytes = 64 * 1024 * 1024; /* default is 64MB */

...

case 'm':

settings.maxbytes = ((size_t)atoi(optarg)) * 1024 * 1024;

break;

...

static size_t mem_limit = 0;

The other argument is preallocate. It determines whether to preallocate slab for each slab class. This argument is toggled with L command line argument.

...

bool preallocate = false;

...

case 'L' :

if (enable_large_pages() == 0) {

preallocate = true;

} else {

fprintf(stderr, "Cannot enable large pages on this system\n"

"(There is no Linux support as of this version)\n");

return 1;

}

break;

...

Next, we look at the method for slabs memory allocation itself.

New slab

do_slabs_newslab

More specific, this method allocates one 1M sized slab for the slab class indicated by the parameter id.

static int do_slabs_newslab(const unsigned int id) {

slabclass_t *p = &slabclass[id]; // scr: ----------------------------> 1)

slabclass_t *g = &slabclass[SLAB_GLOBAL_PAGE_POOL]; // scr: ---------> *)

int len = settings.slab_reassign ? settings.item_size_max // scr: ---> 2)

: p->size * p->perslab;

char *ptr;

if ((mem_limit && mem_malloced + len > mem_limit && p->slabs > 0 // -> 3)

&& g->slabs == 0)) {

mem_limit_reached = true;

MEMCACHED_SLABS_SLABCLASS_ALLOCATE_FAILED(id);

return 0;

}

if ((grow_slab_list(id) == 0) || // scr: ----------------------------> 4)

(((ptr = get_page_from_global_pool()) == NULL) && // scr: -------> *)

((ptr = memory_allocate((size_t)len)) == 0))) { // scr: ---------> 5)

MEMCACHED_SLABS_SLABCLASS_ALLOCATE_FAILED(id);

return 0;

}

memset(ptr, 0, (size_t)len);

split_slab_page_into_freelist(ptr, id); // scr: ---------------------> 6)

p->slab_list[p->slabs++] = ptr; // scr: -----------------------------> 7)

MEMCACHED_SLABS_SLABCLASS_ALLOCATE(id);

return 1;

}

1) slabclass[id] is one of the slab class , the initialization of which is discussed in last article.

2) settings.slab_reassign determines whether to enlist a rebalancing mechanism, which recycles the unused slabs and redistributes them across slab classes. This requires that slabs contained in all slab classes be of the same size, hence this setting also decides whether to use unanimous (i.e., settings.item_size_max, or 1M as mentioned before) or heterogeneous (i.e., p->size * p->perslab) slabs. Besides its associated command-line argument "slab_reassign", the value can be controlled by another argument "modern". For the positivity the name “modern” implies, 1M will be used throughout the text.

...

settings.slab_reassign = false;

...

case SLAB_REASSIGN:

settings.slab_reassign = true;

break;

...

case MODERN:

/* Modernized defaults. Need to add equivalent no_* flags

* before making truly default. */

settings.slab_reassign = true;

settings.slab_automove = 1;

...

break;

N.b. *, rebalancing mechanism will be discussed later when we have a better understanding of the LRU module.

3) Check if the memory usage will exceed the upper limit.

4) grow_slab_list checks if we need to increase slabclass_t.slab_list, if so, grows it.

static int grow_slab_list (const unsigned int id) {

slabclass_t *p = &slabclass[id];

if (p->slabs == p->list_size) {

size_t new_size = (p->list_size != 0) ? p->list_size * 2 : 16;

void *new_list = realloc(p->slab_list, new_size * sizeof(void *));

if (new_list == 0) return 0;

p->list_size = new_size;

p->slab_list = new_list;

}

return 1;

}

5) memory_allocate allocates the actual memory for the slab. As discussed, here the value of len is 1M.

static void *memory_allocate(size_t size) {

void *ret;

if (mem_base == NULL) {

/* We are not using a preallocated large memory chunk */

ret = malloc(size);

} else { // scr: when preallocate is set to true

...

6) split_slab_page_into_freelist initializes (frees) the newly allocated slab preparing for objects storing. This method will be discussed in the next section.

7) Add the newly allocated slab to the slabclass_t.slab_list.

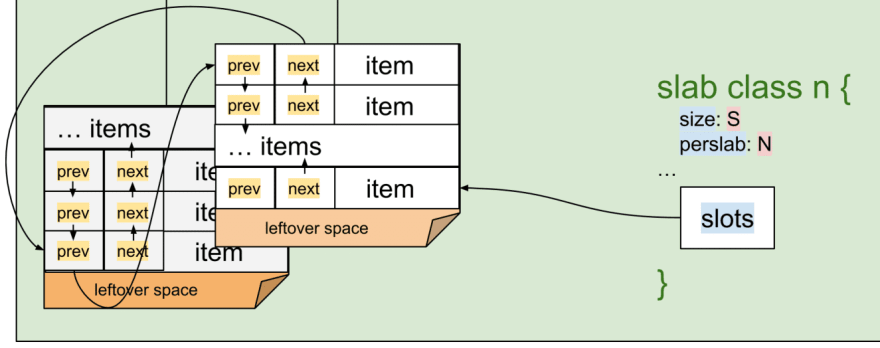

What has happened so far can be summarized with the following figure, (we assume do_slabs_newslab(n) is called two times)

Now we look inside the 1M slab in step 6).

split_slab_page_into_freelist

static void split_slab_page_into_freelist(char *ptr, const unsigned int id) {

slabclass_t *p = &slabclass[id];

int x;

for (x = 0; x < p->perslab; x++) {

do_slabs_free(ptr, 0, id);

ptr += p->size;

}

}

This method goes through all the _item chunk_s (in the size of slabclass_t.size) within a slab. And for each of them, the method initializes its meta data by calling do_slabs_free. Another way to interpret this process is “split a slab into item free list”. As you might have already figured out, this “free list” will be used by item allocation in the future.

do_slabs_free

static void do_slabs_free(void *ptr, const size_t size, unsigned int id) {

slabclass_t *p;

item *it;

...

p = &slabclass[id];

it = (item *)ptr;

it->it_flags = ITEM_SLABBED; // scr: ---------------> 1)

it->slabs_clsid = 0;

it->prev = 0; // scr: ------------------------------> 2)

it->next = p->slots;

if (it->next) it->next->prev = it;

p->slots = it;

p->sl_curr++; // scr: ------------------------------> 3)

p->requested -= size;

return;

}

This method works on item meta data that is populated at the beginning of an item chunk.

typedef struct _stritem {

/* Protected by LRU locks */

struct _stritem *next;

struct _stritem *prev;

...

uint8_t it_flags; /* ITEM_* above */

uint8_t slabs_clsid;/* which slab class we're in */

...

} item;

1) Initialize some fields. item is another core data structure, we will come back to item data structure later.

2) Add the item to the front of the linked list (a.k.a., free list). And update the list head, slabclass_t.slots.

3) Update the available (free list) slot count, slabclass_t.sl_curr; and updates the slabclass_t.requested for statistic. Note that here we are not actually releasing an item , so the passed size is 0.

Slab preallocate

Next we look at how do_slabs_newslab is used. One place it gets called is from the discussed slabs_init when preallocate is set to true,

void slabs_init(const size_t limit, const double factor, const bool prealloc, const uint32_t *slab_sizes) {

...

if (prealloc) {

slabs_preallocate(power_largest);

}

}

static void slabs_preallocate (const unsigned int maxslabs) {

int i;

unsigned int prealloc = 0;

/* pre-allocate a 1MB slab in every size class so people don't get

confused by non-intuitive "SERVER_ERROR out of memory"

messages. this is the most common question on the mailing

list. if you really don't want this, you can rebuild without

these three lines. */

for (i = POWER_SMALLEST /* scr: 1 */; i < MAX_NUMBER_OF_SLAB_CLASSES; i++) {

if (++prealloc > maxslabs)

return;

if (do_slabs_newslab(i) == 0) {

fprintf(stderr, "Error while preallocating slab memory!\n"

"If using -L or other prealloc options, max memory must be "

"at least %d megabytes.\n", power_largest);

exit(1);

}

}

}

This method simply goes through the slabclass starting from the POWER_SMALLEST, i.e., 1st entry, and allocate one slab for each of them. Note that the 0th is a special slab class used by mentioned rebalancing mechanism.

#define POWER_SMALLEST 1

#define POWER_LARGEST 256 /* actual cap is 255 */

#define SLAB_GLOBAL_PAGE_POOL 0 /* magic slab class for storing pages for reassignment */

References

Same to the last article.

Top comments (0)