Concurrency refers to the execution of multiple tasks simultaneously in a program. There are primarily three ways to introduce concurrency in Python - Multithreading, Multiprocessing and Asyncio. Each approach has its advantages and disadvantages.

Choosing the right concurrency model for your Python program depends on the specific requirements and use cases. This comprehensive guide will provide an overview of all three approaches with code examples to help you decide when to use Multithreading, Multiprocessing or Asyncio in Python.

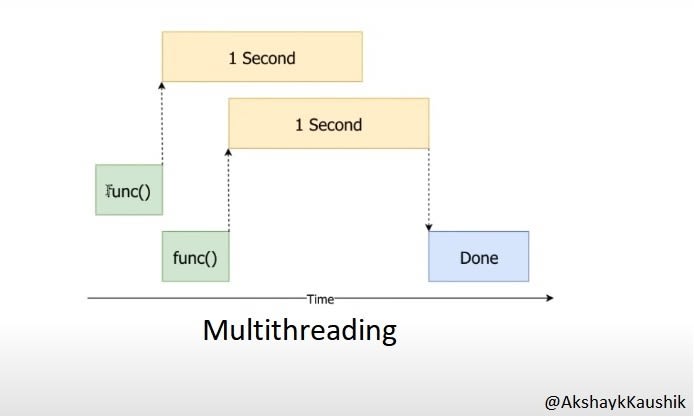

Multithreading in Python

Multithreading refers to concurrently executing multiple threads within a single process. The Python thread module allows you to spawn multiple threads in your program.

Here is an example code to understand multithreading in Python:

import threading

def print_cube(num):

"""

Function to print cube of given num

"""

print("Cube: {}".format(num * num * num))

def print_square(num):

"""

Function to print square of given num

"""

print("Square: {}".format(num * num))

if __name__ == "__main__":

# creating thread

t1 = threading.Thread(target=print_square, args=(10,))

t2 = threading.Thread(target=print_cube, args=(10,))

# starting thread 1

t1.start()

# starting thread 2

t2.start()

# wait until thread 1 is completely executed

t1.join()

# wait until thread 2 is completely executed

t2.join()

print("Done!")

In the above code, we create two threads t1 and t2. t1 calls print_square() function while t2 calls print_cube().

We start both the threads using start() method. join() ensures that the main thread waits for these threads to complete before terminating.

The key advantage of multithreading is that it allows maximum utilization of a single CPU core by executing threads concurrently. All threads share the same process resources like memory. Context switching between threads is lightweight.

However, multithreading also comes with challenges like race conditions, deadlocks etc. when multiple threads try to access shared resources. Careful synchronization is needed to avoid these issues.

Example of using MultiThreading in Action

Multiprocessing in Python

Multiprocessing refers to executing multiple processes concurrently. The multiprocessing module in Python allows spawning processes using an API similar to threading.

Here is an example:

import multiprocessing

def print_cube(num):

"""

Function to print cube of given num

"""

print("Cube: {}".format(num * num * num))

def print_square(num):

"""

Function to print square of given num

"""

print("Square: {}".format(num * num))

if __name__ == "__main__":

# creating process

p1 = multiprocessing.Process(target=print_square, args=(10,))

p2 = multiprocessing.Process(target=print_cube, args=(10,))

# starting process 1

p1.start()

# starting process 2

p2.start()

# wait until process 1 is finished

p1.join()

# wait until process 2 is finished

p2.join()

print("Done!")

Here, we create two processes p1 and p2 to execute the print_square() and print_cube() functions concurrently.

The main difference between multithreading and multiprocessing is that threads run within a single process while processes run independently in separate memory spaces.

Multiprocessing avoids GIL limitations and allows full utilization of multicore CPUs. However, processes have higher memory overhead compared to threads. Interprocess communication is more complicated compared to thread synchronization primitives.

Example of MultiProcessing in Action

Asyncio in Python

Asyncio provides a single-threaded, non-blocking concurrency model in Python. It uses cooperative multitasking and an event loop to execute coroutines concurrently.

Here is a simple asyncio example:

import asyncio

async def print_square(num):

print("Square: {}".format(num * num))

async def print_cube(num):

print("Cube: {}".format(num * num * num))

async def main():

# Schedule coroutines to run concurrently

await asyncio.gather(

print_square(10),

print_cube(10)

)

asyncio.run(main())

We define coroutines using async/await syntax. The event loop schedules the execution of coroutines and runs them consecutively.

Asyncio is best suited for IO-bound tasks and use cases where execution consists of waiting on network responses, database queries etc. It provides high throughput and minimizes blocking.

However, asyncio doesn't allow true parallellism on multicore systems. CPU-bound processing may suffer performance issues. It has a steep learning curve compared to threads and processes.

Threading is about workers; asynchrony is about tasks.

Key Differences

Here is a quick comparison of the three concurrency models:

Use Cases

Use multithreading when you need to run I/O-bound or CPU-bound jobs concurrently in a single process. Examples - serving concurrent requests in a web server, parallel processing in data science apps etc.

Leverage multiprocessing for CPU-bound jobs that require truly parallel execution across multiple cores. Examples - multimedia processing, scientific computations etc.

Asyncio suits network applications like web servers, databases etc. where blocking I/O operations limit performance. Asyncio minimizes blocking for high throughput.

So in summary:

Multithreading provides concurrent execution in a single process, limited by GIL

Multiprocessing provides full parallelism by executing separate processes

Asyncio uses an event loop for cooperative multitasking and minimized blocking

Choose the right concurrency model based on your application requirements and use cases.

Conclusion

Multithreading, multiprocessing and asyncio provide different approaches to concurrency and parallelism in Python.

Multithreading uses threads in a single process, multiprocessing spawns separate processes while asyncio leverages an event loop and coroutines for cooperative multitasking.

Each model has its strengths and limitations. Understanding the key differences is important to make an informed decision based on the specific problem you are trying to solve.

The code examples and comparisons highlighted in this guide should help you pick the appropriate concurrency framework for your Python programs.

FAQs

Q: What is the Global Interpreter Lock (GIL) in Python?

A: The GIL is a mutex that allows only one thread to execute Python bytecodes at a time even in multi-threaded programs. This prevents multiple threads from running simultaneously on multiple CPU cores.

Q: How can I achieve true parallelism in Python?

A: The multiprocessing module allows spawning multiple processes which can leverage multiple CPUs and cores for parallel execution. It avoids GIL limitations.

Q: When should I use multithreading vs multiprocessing?

A: Use multithreading for I/O bound tasks. Multiprocessing is best for CPU-intensive work that needs parallel speedup across multiple CPU cores.

Q: What are the differences between multiprocessing and multithreading?

A: Key differences are separate vs shared memory, different synchronization primitives, parallelism capabilities etc. Multiprocessing provides full parallelism while multithreading is limited by GIL.

Q: How does asyncio work and when should it be used?

A: Asyncio uses an event loop and coroutines for a cooperative multitasking model suited for I/O bound apps. It minimizes blocking and provides high throughput.

Top comments (0)