Published as part of The Python Scrapy Playbook.

Scrapyd is the defacto spider management tool for developers who want a free and effective way to manage their Scrapy spiders on multiple servers without having to configure cron jobs or use paid tools like Scrapy Cloud.

The one major drawback with Scrapyd, however, that the default dashboard that comes with Scrapyd is basic to say the least.

Because of this, numerous web scraping teams have had to build their own Scrapyd dashboards to get the functionality that they need.

In this guide, we're going to go through the 5 Best Scrapyd Dashboards that these developers have decided to share with the community so you don't have to build your own.

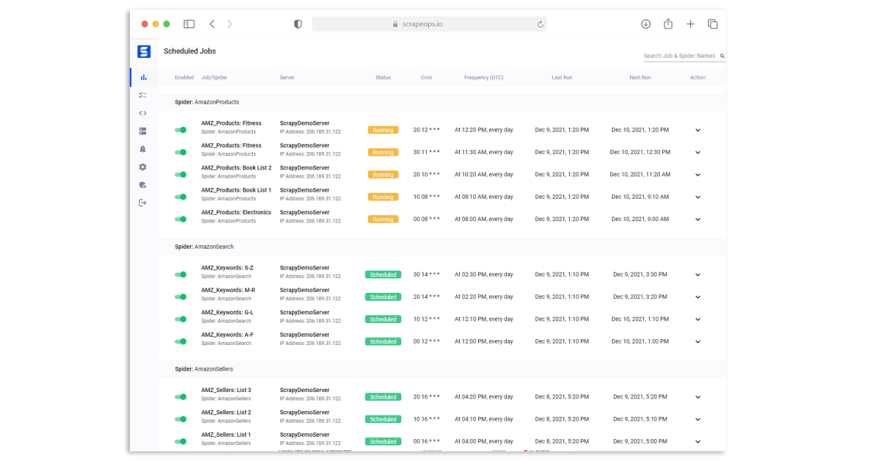

#1 ScrapeOps

ScrapeOps is a new Scrapyd dashboard and monitoring tool for Scrapy.

With a simple 30 second install ScrapeOps gives you all the monitoring, alerting, scheduling and data validation functionality you need for web scraping straight out of the box.

Live demo here: ScrapeOps Demo

The primary goal with ScrapeOps is to give every developer the same level of scraping monitoring capabilities as the most sophisticated web scrapers, without any of the hassle of setting up your own custom solution.

Unlike the other options on this list, ScrapeOps is a full end-to-end web scraping monitoring and management tool dedicated to web scraping that automatically sets up all the monitors, health checks and alerts for you.

If you have an issue with integrating ScrapeOps or need advice on setting up your scrapers then they have a support team on-hand to assist you.

Features

Once you have completed the simple install (3 lines in your scraper), ScrapeOps will:

- 🕵️♂️ Monitor - Automatically monitor all your scrapers.

- 📈 Dashboards - Visualise your job data in dashboards, so you see real-time & historical stats.

- 💯 Data Quality - Validate the field coverage in each of your jobs, so broken parsers can be detected straight away.

- 📉 Auto Health Checks - Automatically check every jobs performance data versus its 7 day moving average to see if its healthy or not.

- ✔️ Custom Health Checks - Check each job with any custom health checks you have enabled for it.

- ⏰ Alerts - Alert you via email, Slack, etc. if any of your jobs are unhealthy.

- 📑 Reports - Generate daily (periodic) reports, that check all jobs versus your criteria and let you know if everything is healthy or not.

Job stats tracked include:

- ✅ Pages Scraped & Missed

- ✅ Items Parsed & Missed

- ✅ Item Field Coverage

- ✅ Runtimes

- ✅ Response Status Codes

- ✅ Success Rates

- ✅ Latencies

- ✅ Errors & Warnings

- ✅ Bandwidth

Integration

There are two steps to integrate ScrapeOps with your Scrapyd servers:

- Install ScrapeOps Logger Extension

- Connect ScrapeOps to Your Scrapyd Servers

Note: You can't connect ScrapeOps to a Scrapyd server that is running locally, and isn't offering a public IP address available to connect to.

Once setup you will be able to schedule, run and manage all your Scrapyd servers from one dashboard.

Step 1: Install Scrapy Logger Extension

For ScrapeOps to monitor your scrapers, create dashboards and trigger alerts you need to install the ScrapeOps logger extension in each of your Scrapy projects.

Simply install the Python package:

pip install scrapeops-scrapy

And add 3 lines to your settings.py file:

## settings.py

## Add Your ScrapeOps API key

SCRAPEOPS_API_KEY = 'YOUR_API_KEY'

## Add In The ScrapeOps Extension

EXTENSIONS = {

'scrapeops_scrapy.extension.ScrapeOpsMonitor': 500,

}

## Update The Download Middlewares

DOWNLOADER_MIDDLEWARES = {

'scrapeops_scrapy.middleware.retry.RetryMiddleware': 550,

'scrapy.downloadermiddlewares.retry.RetryMiddleware': None,

}

From there, your scraping stats will be automatically logged and automatically shipped to your dashboard.

Step 2: Connect ScrapeOps to Your Scrapyd Servers

The next step is giving ScrapeOps the connection details of your Scrapyd servers so that you can manage them from the dashboard.

Within your dashboard go to the Servers page and click on the Add Scrapyd Server at the top of the page.

In the dropdown section then enter your connection details:

- Server Name

- Server Domain Name (optional)

- Server IP Address

Once setup, you can now schedule your scraping jobs to run periodically using the ScrapeOps scheduler and monitor your scraping results in your dashboards.

Summary

ScrapeOps is a powerful web scraping monitoring tool, that gives you all the monitoring, alerting, scheduling and data validation functionality you need for web scraping straight out of the box.

Live demo here: ScrapeOps Demo

Pros

- Free unlimited community plan.

- Simple 30 second install.

- Hosted solution, so don't need to spin up a server.

- Full Scrapyd JSON API support.

- Includes most fully featured scraping monitoring, health checks and alerts straight out of the box.

- Customer support team, available to help you get setup and add new features.

Cons

- Not open source, if that is your preference.

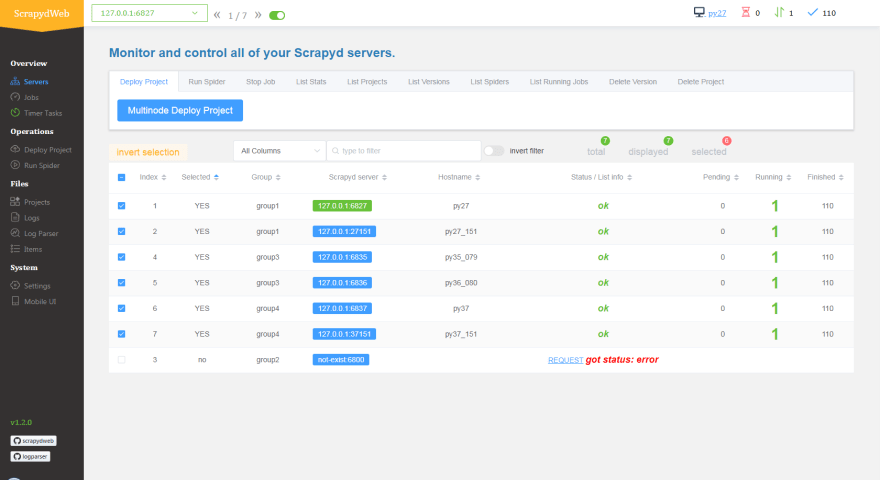

#2 ScrapydWeb

The most popular open source Scrapyd dashboard, ScrapydWeb is a great solution for anyone looking for a robust spider management tool that can be integrated with their Scrapyd servers.

With ScrapydWeb, you can schedule, run and see the stats from all your jobs across all your servers on a single dashboard. ScrapydWeb supports all the Scrapyd JSON API endpoints so can also stop jobs mid-crawl and delete projects without having to log into your Scrapyd server.

When combined with LogParser, ScrapydWeb will also extract your Scrapy logs from your server and parse them into an easier to understand way.

A powerful feature that ScrapydWeb has that many of the other open source Scrapyd dashboards don’t have is the ability to easily connect multiple Scrapyd servers to your dashboard, execute actions on multiple nodes with the same command and autopackage your spiders on the Scrapyd server.

Although, ScrapydWeb has a lot of spider management functionality, its monitoring/job visualisation capabilities are quite limited, and there are a number of user experience issues that make it less than ideal if you plan to rely on it completely as your main spider monitoring solution.

Summary

If you want a easy to use open-source Scrapyd dashboard then ScrapydWeb is a great choice. It is the most popular open-source Scrapyd dashboard at the moment, and has a lot of functionality built-in.

Pros

- Open source.

- Robust and battle tested Scrapyd management tool.

- Lots of Spider management functionality.

- Best multi-node server management functionality.

Cons

- Limited job monitoring and data visualisation functionality.

- No customer support

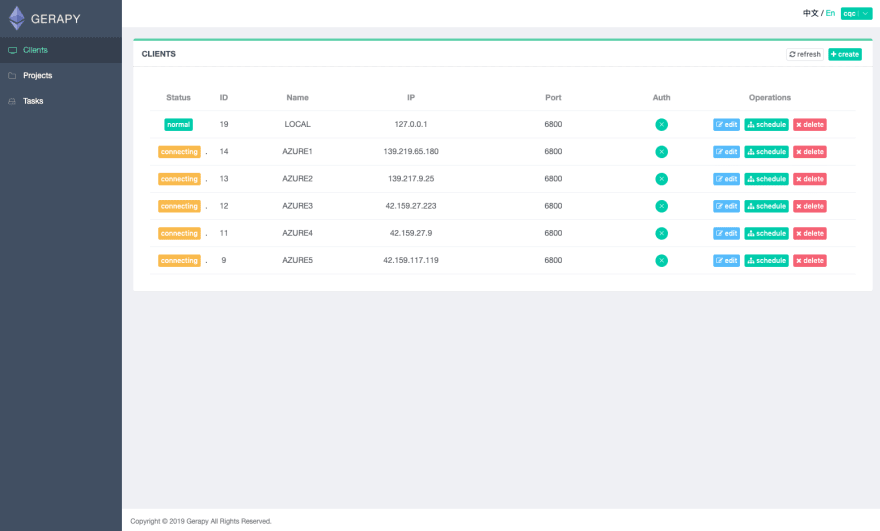

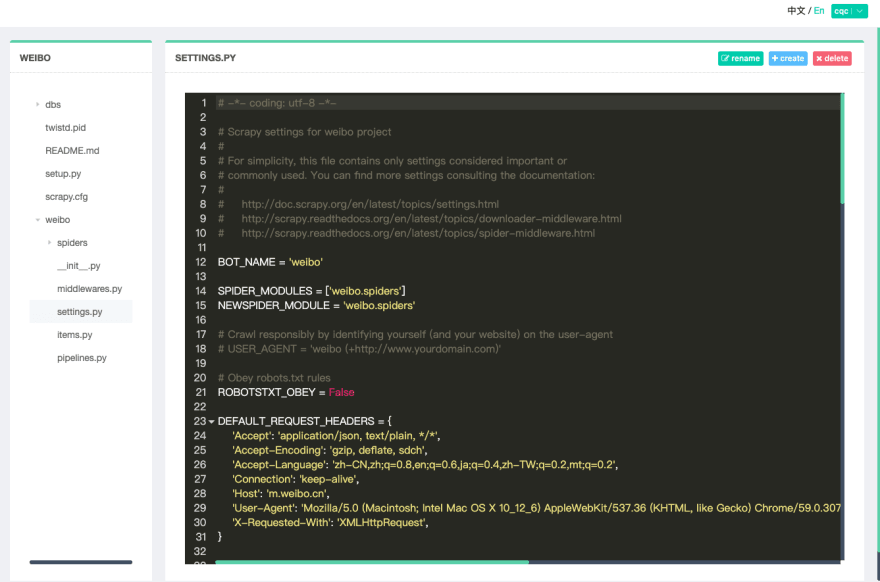

#3 Gerapy

Next, on our list is Gerapy. With 2.6k stars on Github it is another very popular open source Scrapyd dashboard.

Gerapy enables you to schedule, run and control all your Scrapy scrapers from a single dashboard. Like others on this list, it's goal is to make managing distributed crawler projects easier and less time consuming.

Gerapy boasts the following features and functionality:

- More convenient control of crawler runs.

- View crawl results in more real time.

- Easier timing tasks.

- Easier project deployment.

- More unified host management.

- Write crawler code more easily.

Unlike ScrapydWeb, Gerapy also has a visual code editor built-in. So you can edit your projects code right from the Gerapy dashboard if you would like to make a quick change.

Summary

Gerapy is a great alternative to the open-source ScrapydWeb. It will allow you to manage multiple Scrapyd servers with a single dashboard.

However, it doesn't extract the job stats from your log files so you can't view all your jobs scraping results in a single view as you can with ScrapydWeb.

Pros

- Open source, and very active maintainers.

- Robust Scrapyd management tool.

- Full Spider management functionality.

- Ability to edit spiders within dashboard.

Cons

- Limited job monitoring and data visualisation functionality.

- No equivalent log parsing functionality like LogParser with ScrapydWeb.

- No customer support

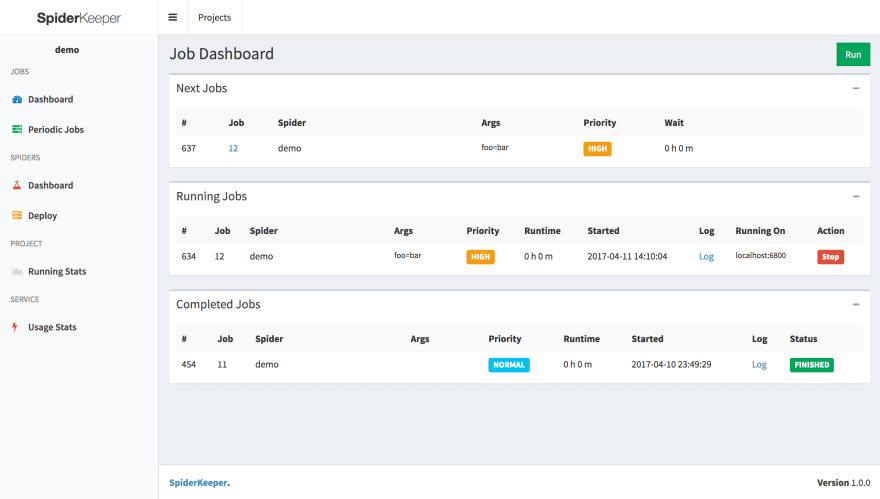

#4 SpiderKeeper

SpiderKeeper is another open-source Scrapyd dashboard based on the old Scrapinghub Scrapy Cloud dashboard.

SpiderKeeper was once a very popular Scrapyd dashboard because it had robust functionality and looked good.

However, it has fallen out of favour due to the launch of other dashboard projects and the fact that it isn't maintained anymore (last update was in 2018, plus numerous open pull requests).

SpiderKeeper is a simplier implementation of the functionality that ScrapeOps, ScrapydWeb or Gerapy providers, however, it still covers all the basics:

- Manage your Scrapy spiders from a dashboard.

- Schedule periodic jobs to run automatically.

- Deploy spiders to Scrapyd with a single click.

- Basic spider stats.

- Full Scrapyd API support.

Summary

SpiderKeeper was a great open-source Scrapyd dashboard, however, since it isn't being actively maintained in years we would recommend using one of the other options on the list.

Pros

- Open source.

- Good functionality that covers all the basics.

- Ability to deploy spiders within dashboard.

Cons

- Not actively maintained, last update 2018.

- Limited job monitoring and data visualisation functionality.

- No customer support

#5 Crawlab

Whilst Crawlab isn’t a Scrapyd dashboard per-say, it is definitely an interesting tool if you are looking for a way to manage all your spiders from one central admin dashboard.

Crawlab is a Golang-based distributed web crawler admin platform for spiders management regardless of languages and frameworks. Meaning that you can use it with any type of spider be it Python Requests, NodeJS, Golang, etc. based spiders.

The fact Crawlab isn't Scrapy specific gives you huge flexibility if you decide to move away from Scrapy in the future or need to create a Puppeteer scraper to scrape a particularly difficult site, then you can easily add the scraper to your Crawlab setup.

Of the open-source tools on the list, Crawlab is by far the most comprehensive solution with a whole range of features and functionality:

- Naturally supports distributed spiders out of the box.

- Schedule cron jobs

- Task management

- Results exporting

- Online code editor

- Configurable spiders.

- Notifications

One of the only downsides to it is that there is a bit of a learning curve to get it setup on your own server.

As of writing this article it is the most active open source project on this list.

Summary

Crawlab is a very powerful scraper management solution with a huge range of functionality, and is a great option for anyone who is running multiple types of scrapers.

Pros

- Open source, and actively maintained.

- Very powerful functionality.

- Ability to deploy any type of scraper (Python, Scrapy, NodeJS, Golang, etc.).

- Very good documentation.

Cons

- Job monitoring and data visualisation functionality could be better.

- No customer support

Top comments (0)