Welcome to Tutorial Tuesday!

We'll pick up where we left off last week with our Think Lab 2124 Tutorial A: Build & Deploy a Data Join Experiment!

AutoAI Overview

AutoAI in Cloud Pak for Data automates ETL(Extract, Transform, and Load) and feature engineering process for relational data, saves data scientists months of manual data prep time and acheives results comparable to top performing data scientists.

The AutoAI graphical tool in Watson Studio automatically analyzes your data and generates candidate model pipelines customized for your predictive modeling problem. These model pipelines are created iteratively as AutoAI analyzes your dataset and discovers data transformations, algorithms, and parameter settings that work best for your problem setting. Results are displayed on a leaderboard, showing the automatically generated model pipelines ranked according to your problem optimization objective.

Collect your input data in a CSV file or files. Where possible, AutoAI will transform the data and impute missing values.

Notes:

- Your data source must contain a minimum of 100 records (rows).

- You can use the IBM Watson Studio Data Refinery tool to prepare and shape your data.

- Data can be a file added as connected data from a networked file system (NFS). Follow the instructions for adding a data connection of the type Mounted Volume. Choose the CSV file to add to the project so you can select it for training data.

AutoAI Process

Using AutoAI, you can build and deploy a machine learning model with sophisticated training features and no coding. The tool does most of the work for you.

AutoAI automatically runs the following tasks to build and evaluate candidate model pipelines:

- Data pre-processing

- Automated model selection

- Automated feature engineering

- Hyperparameter optimization

In this Think Lab, you will see how to join several data sources and then build an AutoAI experiment from the joined data. The scenario we’ll explore in Part B of the Lab is for a mobile company that wants to understand the key factors that have an impact on user experience in their call center. You will use IBM AutoAI to automate data analysis for a dataset collected from a fictional call center. The objective of the analysis is to gain more insight on factors that impact customer experience so that the company can improve customer service. The data consists of historical information about customer interaction with call agents, call type, customer wireless plans and call type resolution.

Project Requirements

IBM Cloud (Free) Lite Tier Account

Project Setup Steps

- Create an IBM Cloud Lite Tier Account

- Create a Watson Studio Instance

- Provision Watson Machine Learning & Cloud Object Storage Instances

- Create a New Project

- Download the Call Center Dataset from the Gallery

- Unzip the Call Center Dataset's .zip File

- Add the Call Center Datasets to the Project

Project Setup

See Tutorial A for Project Setup

Think Lab Overview

In Tutorial B of this Think Lab, you will use IBM AutoAI to automate data analysis for a dataset collected from a fictional call center. The objective of the analysis is to gain more insight on factors that impact customer experience so that the company can improve customer service. The data consists of historical information about customer interaction with call agents, call type, customer wireless plans and call type resolution. Each source of information is kept in a separate table (a CSV file).

Using the data join capabilities of AutoAI, you will connect the tables using common coloumns, or keys, to create a single data source, without needing to write SQL-like queries. Additionally, AutoAI will do some automated data preparation, or feature engineering on the combined data before using the data to train the model.

About the Data

The data is divided as follows:

- User_experience: User experience reflects the satisfactory feedback from customers to each call agent daily.

- Call_log: Records historical information about the calls from customers to the call center in the last 3 years.

- Call_Type: Records call type information.

- Wireless_Plans: Records the kind of wireless plans customers are subscribed to.

- Call_Resolution_Type: Records type of call resolution.

Steps Overview

This tutorial presents the basic steps for joining data sets then training a machine learning model using AutoAI:

- Add and join the data

- Train the experiment

- Deploy the trained model

- Test the deployed model

Think Lab - Tutorial B Steps

- Create a New AutoAI Experiment

- Build the Data Join Schema

- Run the AutoAI Experiment

- Explore the Holdout & Training Data Insights

- Deploy the Trained Model

- Score the Model

- View the Prediction Results

Think Lab - Tutorial B: AutoAI Data Join Multi-Classification

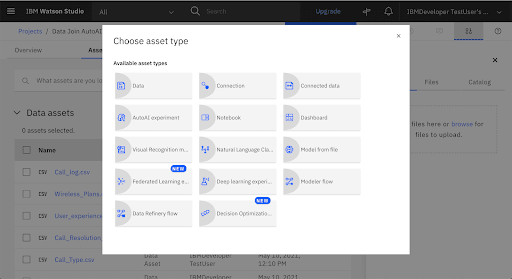

1. Create a New AutoAI Experiment

Add a New AutoAI Experiment to the Project

Associate a Machine Learning Service Instance

Select a Watson Machine Learning Service Instance from the dropdown menu. Click Create

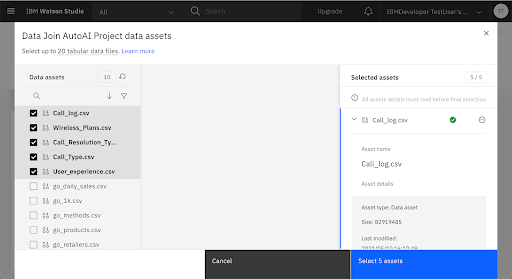

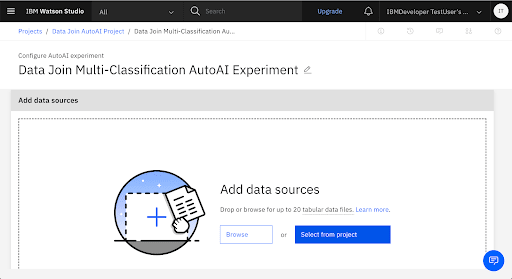

Add the Call Center Datasets

Select the call center data assets. Click Select 5 Assets

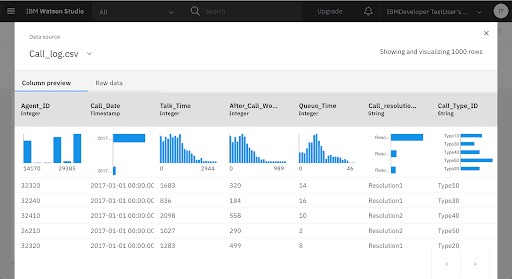

Optional: You can view metrics on each data source by clicking on it in the Data Source window.

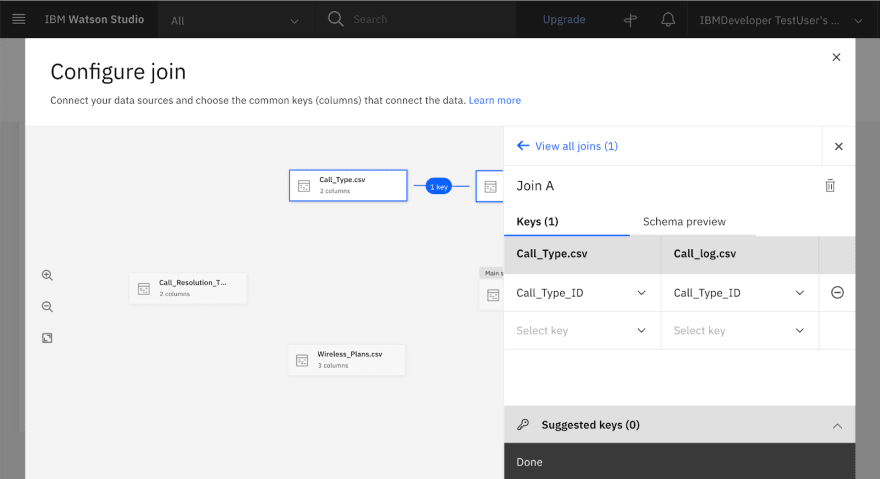

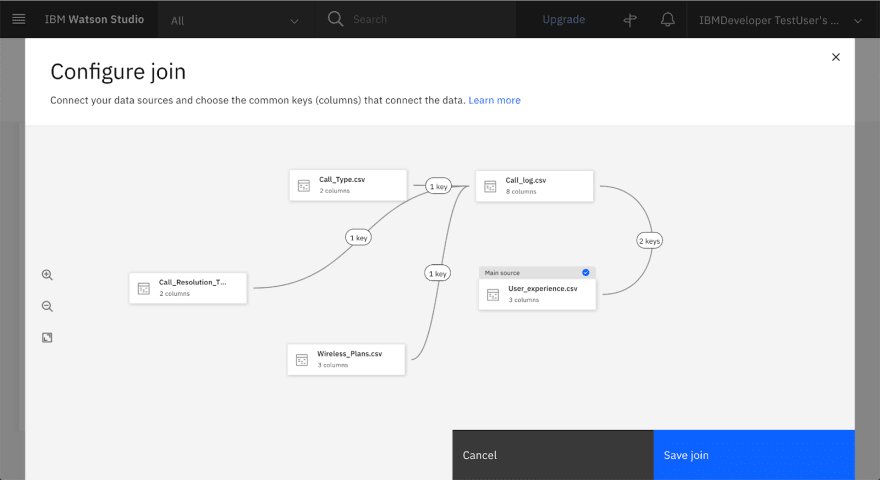

2. Build the Data Join Schema

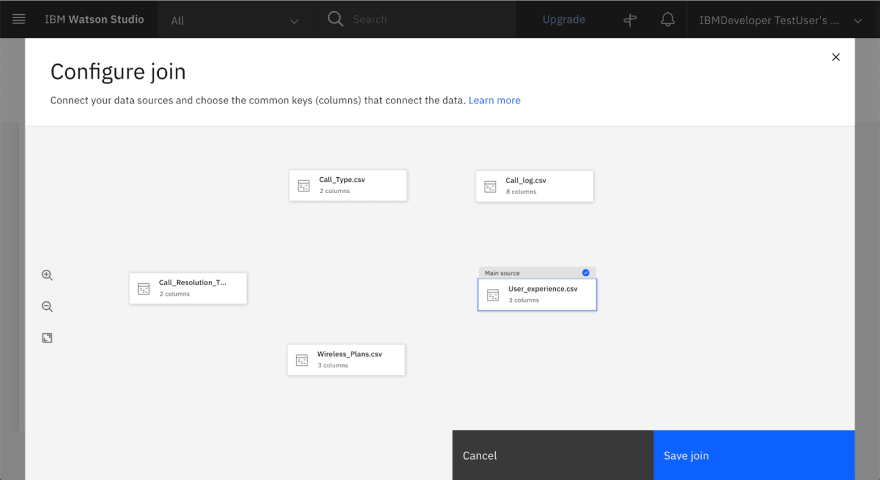

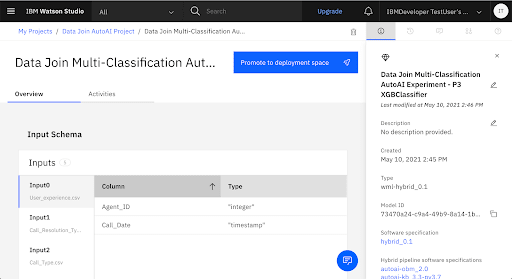

The main source contains the prediction target for the experiment. Select User_experience.csv as the main source, then click Configure join.

In the data join canvas you will create a left join that connects all of the data sources to the main source.

Use the Data Join Table to Build the Schema

Setup the nodes to match the following image to make connecting the nodes easier.

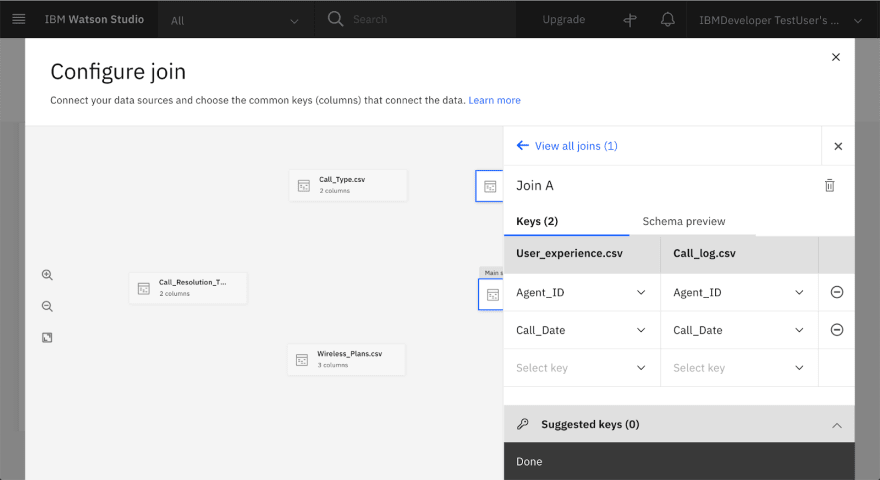

Drag from the node on one end of the User_experience.csv box to the node on the end of Call_log.csv.

In the panel for configuring the join, click (+) to add the suggested keys Agent_ID and Call_Date as the join keys.

Repeat the data join process until you have joined all the data tables.

The Completed Data Join Schema Should Look Like This:

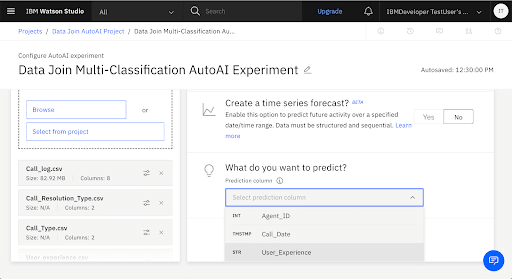

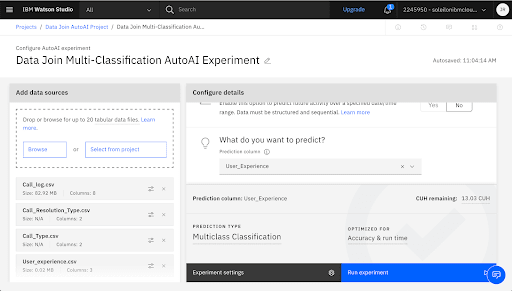

Choose User_Experience as the column to predict.

AutoAI analyzes your data and determines that the User_Experience column contains information making the data suitable for a Multiclass Classification model. The default metric for a Multiclass Classification model is optimized for Accuracy & run time automatically by AutoAI.

Note:

- Based on analyzing a subset of the data set, AutoAI chooses a default model type: binary classification, multiclass classification, or regression. Binary is selected if the target column has two possible values, multiclass if it has a discrete set of 3 or more values, and regression if the target column is a continuous numeric variable. You can override this selection.

- AutoAI chooses a default metric for optimizing. For example, the default metric for a binary classification model is Accuracy.

- By default, ten percent of the training data is held out to test the performance of the model.

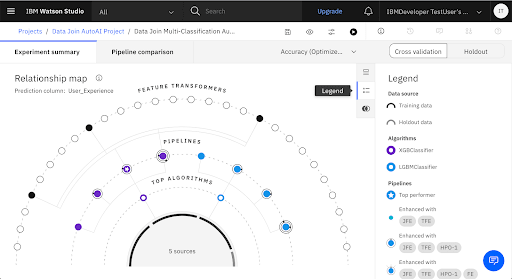

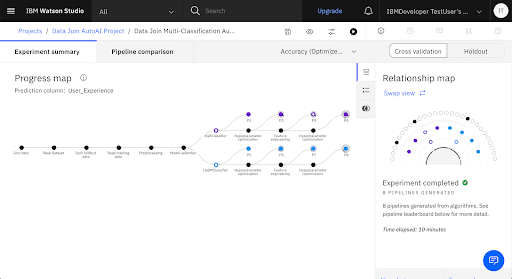

3. Run the AutoAI Experiment

4. Explore the Holdout & Training Data Insights

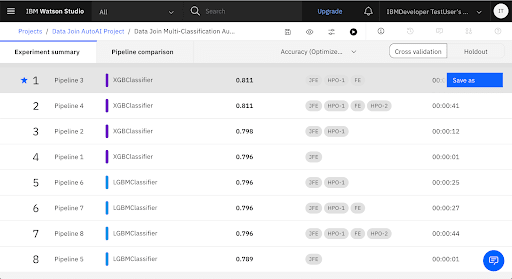

Pipeline Comparison

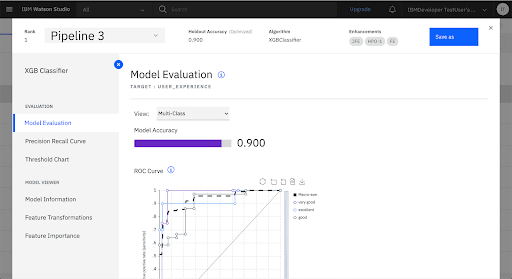

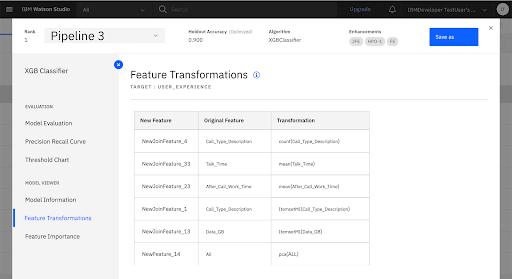

Eplore the Leading Pipeline

Model Evaluation

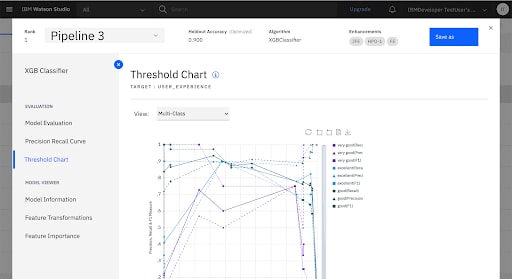

Threshold Chart

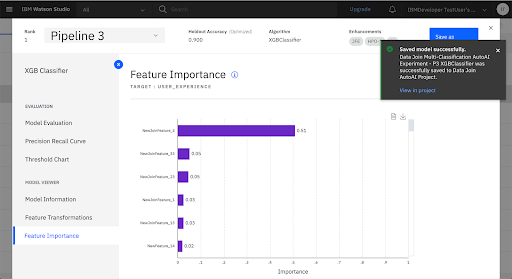

Feature Importance

From the feature importance chart, the most important feature is count (Call_Type_Description) which is the total calls in a day.

Other important features are from the After_Call_Work_Time, which are talk time and queue time. These features affect users experience the most.

The call center management team should pay attention to these features and try to figure out how to improve user’s experience by adjusting these features.

5. Deploy the Trained Model

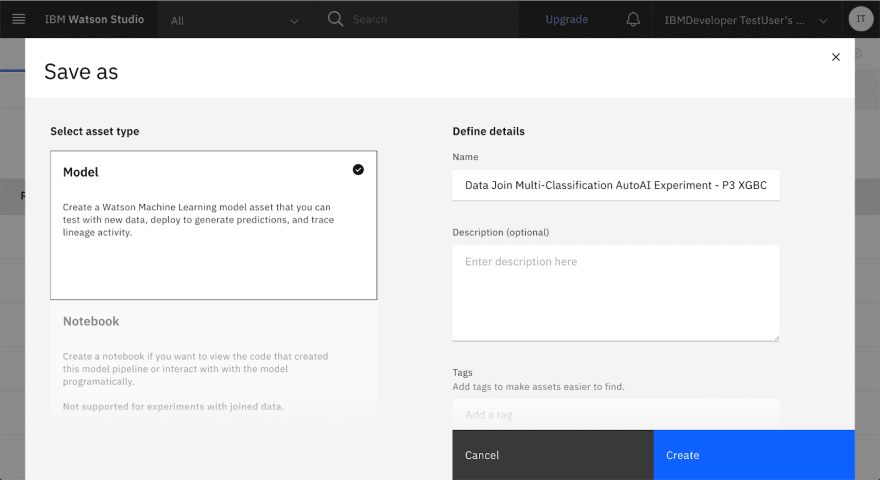

Click Save as and Select Model

Click Create

Click View in project

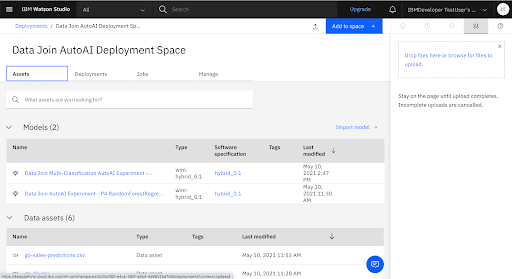

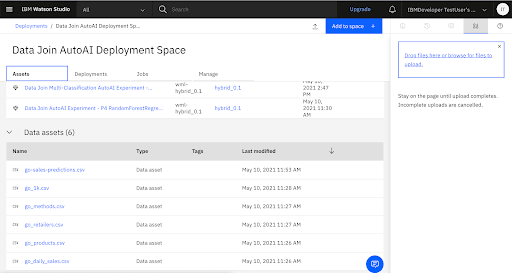

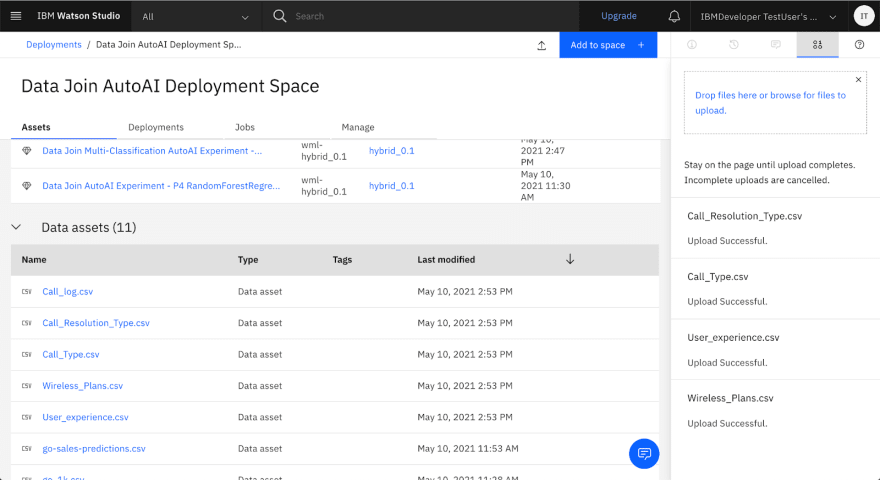

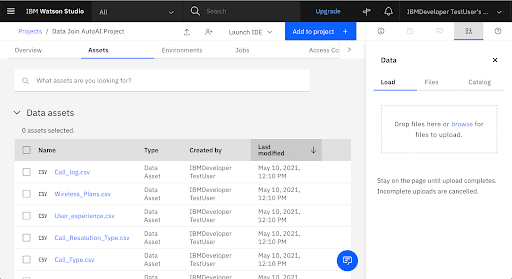

Add the Call Center Datasets to the Deployment Space

Navigate to the Deployment Space

Click browse for files to upload

Select Call Center Data Sources

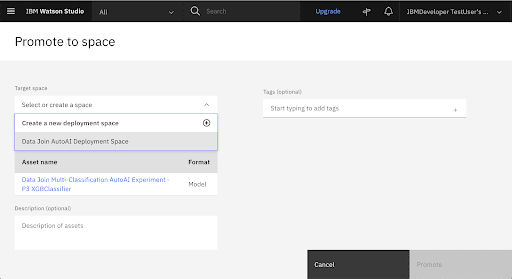

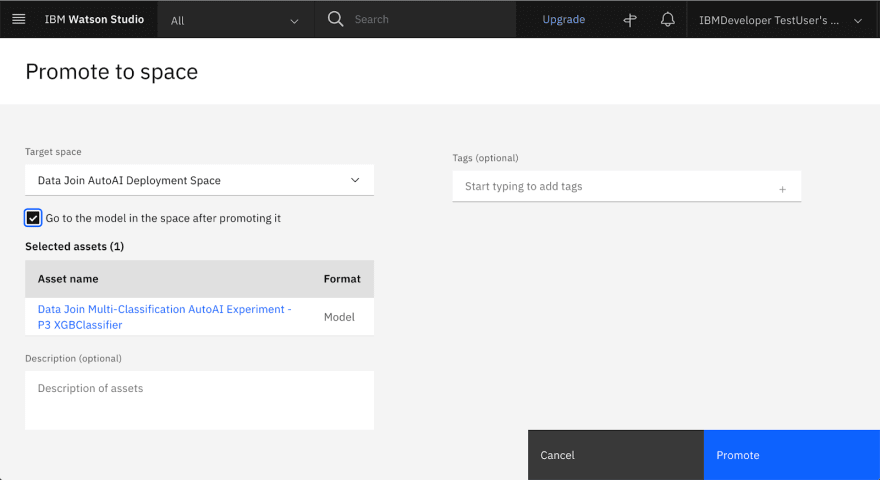

Promote the Trained Model to the Deployment Space

Click Promote to deployment space

Select the Deployment Space from the dropdown menu.

Check the Go to the model in the space after promoting it box.

Click Promote

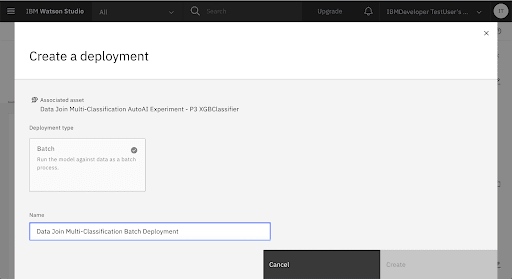

Deploy the Trained Model

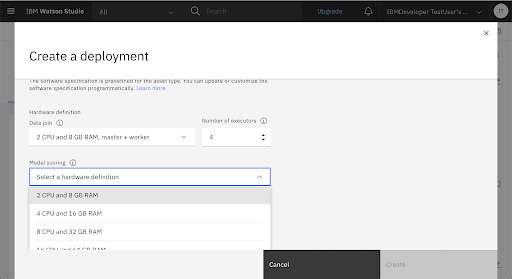

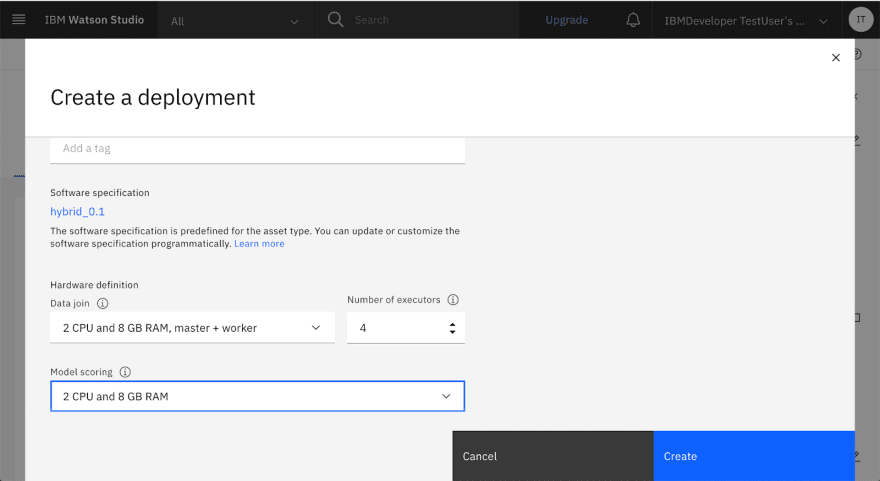

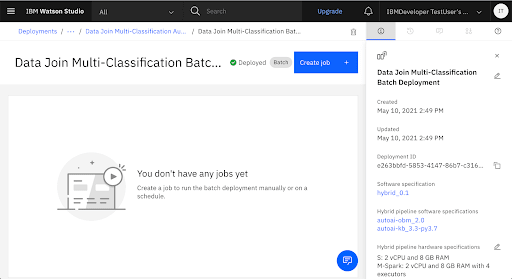

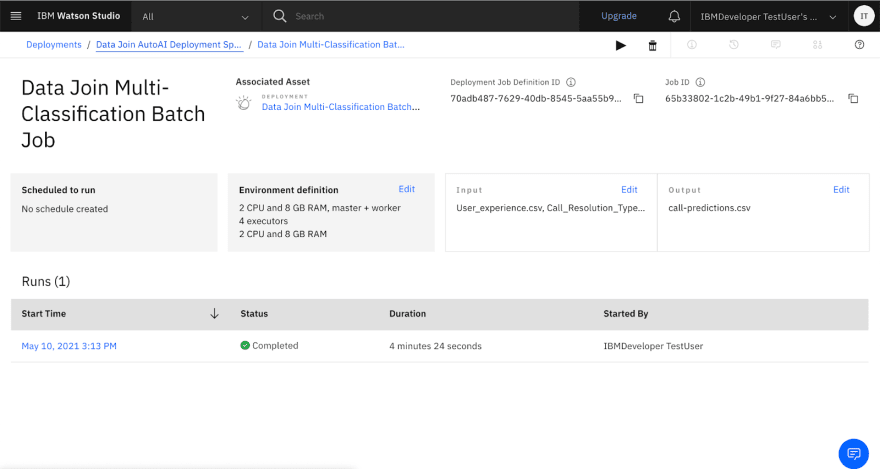

Create a New Batch Deployment

Add a name for the new Batch deployment.

Configure the Hardware Definition

Select the Data Join hardware.

Select the Model Scoring hardware.

6. Score the Model

To score the model, you create a batch job that will pass new data to the model for processing, then output the predictions to a file. Note: For this tutorial, you will submit the training files as the scoring files as a way to demonstrate the process and view results.

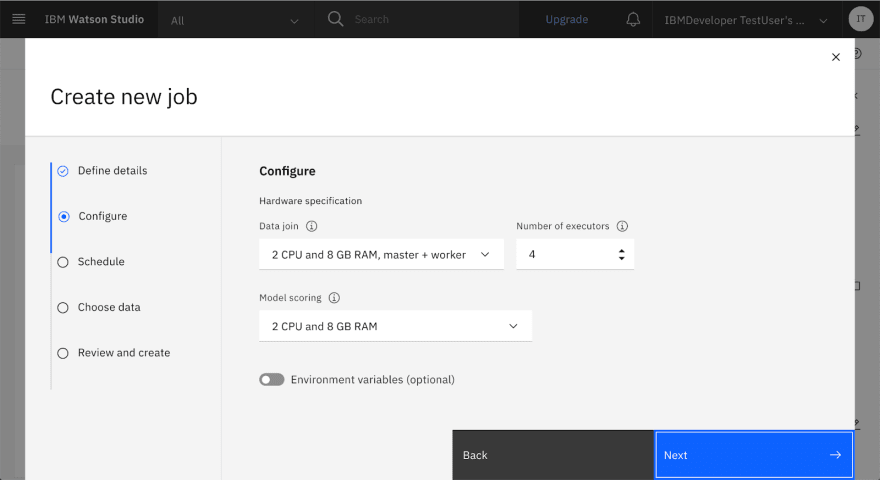

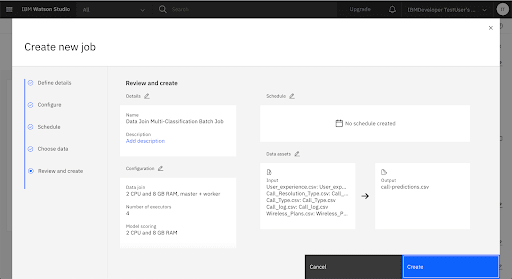

Create a New Batch Job

Add a name for the new Batch job. Click Next

Keep the current Hardware configuration. Click Next

Keep the Schedule off. Click Next

Add the Scoring Files

You will see the training files listed. For each training file, click the Select data source button, and choose the corresponding scoring file.

WARNING : Schema mismatch. The column types in this data asset do not match the column types in the Model Schema. Click Continue to select anyway.

Add call-predictions.csv as the Ouput file name.

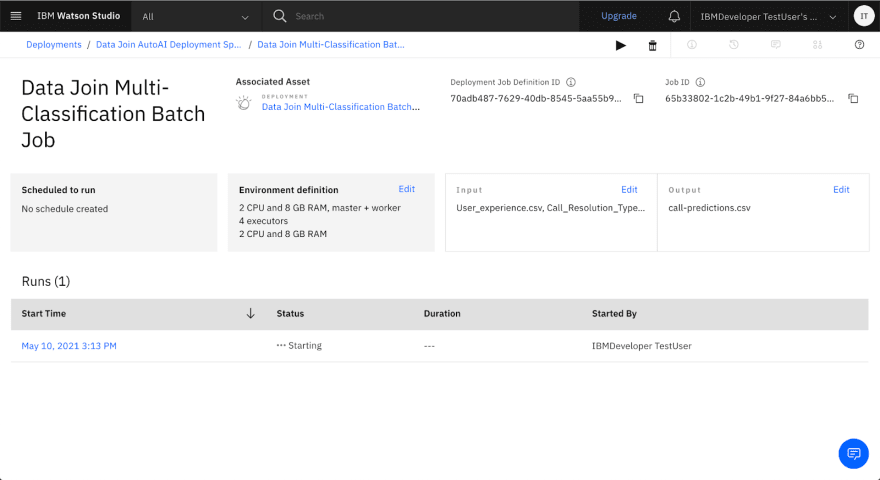

Run the Batch Job

View the Batch Job

Wait for the Batch Job to Complete

7. View the Prediction Results

Download call-predictions.csv, and view the prediction results in Excel.

CONGRATULATIONS!

You've completed Think Lab 2124: Build & Deploy AI/ML Models w Multiple Datasets w AutoAI!

Tune in next week for our next Tutorial Tuesday post.

Follow for more Cloud Native & Watson AI/ML content:

https://linktr.ee/jritten

Top comments (0)