What is Web Scraping?

Ans: Try Google Search lol.

As the name suggests it's just scraping data of the web sites using http request response cycle.

Request response cycle is just two way communication between servers or computers browser is just an interface like messanger and servers are like users.

Fun tip!!!

Try writing

curl https://www.example.com

in your native terminal.

That's also web scraping of sort.

But let's just cut to the chase.

So today we are gonna be scraping all the image from udemy.com, sounds fun :o!!!

To get started first you need to install a couple of python modules

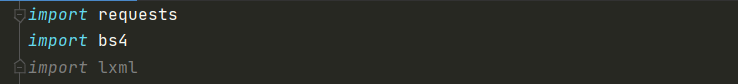

requests, bs4 and lxml

So requests package is used to make requests to the server,

bs4 is a tool that make stuff looks pretty and easy to work with,

and lxml well that's a parser to parse HTML response markup and goes along with bs4.BeautifulSoup() like a sauce.

We're ready and good to go.

Now just follow along.

Step 1: Import all the necessary modules.

Step 2: Declare variables name and url to make stuff easy.(totally optional)

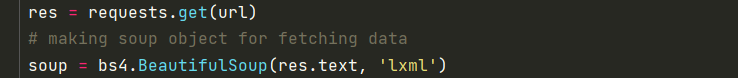

Step 3: Request server for HTML code via url and create a soup object of retrieved data via parser and bs4.BeautifulSoup() method.

Step 4: Select and fetch all the image objects or nodes.

Step 5: Parse all the images nodes one after the other to get image source which will help us to make requests for image data.

Step 6: Select source(value of src attribute) of the image.

At this time if you will try to print an image object it will look exactly like corresponding html code.

![]()

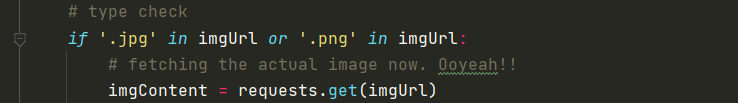

Step 7: Make a request to the corresponding server to receive image data.

So now we have image source, which can be used to in request.get() to ask some other server for "The Image Data". But we got to check for the type first because in HTML5 we can also use svg as source of an image that may be represented differently than the image. In short, we can surely save an svg's via this method but I just haven't added that functionality yet!

In fact you can fetch any type of data GIF's, PNG's, JPG's, you name it.

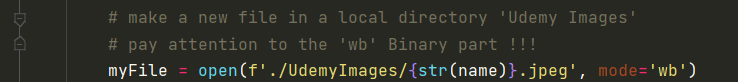

Step 8: Open a file in binary format to save data.

Step 9: Write all the data received from imageNode.content attribute.

Step 10: Bruh, you did it ! Congrats!!!

Let the loop complete its job.

Now you have a new script to show off.

You did a great job. Now you can scrape any type of data you like from a normal website.

What to do Now???

Try reading documentation of beautifulsoup4, requests, lxml or any other url parser you liked this stuff.

Before that, there is one more thing.

You can also fetch image file names from those source url's.

I prefer to do that by regex and believe me it's weird to see those funny looking patterns at first so please don't judge .

Exercise

example.com ! What's that?

Atulit Anand ・ Feb 23 '21 ・ 1 min read

Goto some site like the one displayed above and find out number of words in the page.

I'd love to hear about your progress in the comments section.

Hope, you liked it.

Embrace it's power.

and make some thing beautiful.

Have a beautiful day. ✨

Resources:

Cover image

Top comments (0)