It is indeed true that faster root cause detection is one of the benefits an APM deployment can unlock. That being true, using just the root cause detection feature in isolation leads to poor decisions – the root cause should be in focus only in situations where the impact of the particular root cause justifies the intervention. To illustrate this, let me walk you through one of the quite typical situations what we see in our daily lives:

- Customers are complaining that opening monthly reports is “too slow”

- Product owner reacts to the recurring complaints and assigns the engineers with the task of “lets make the reports faster”

- Engineer charged with the request, digs into the data exposed by the APM, finds a slow database call and spends a week optimizing the query to speed up the reporting 2x.

- The patched version is released and the complaints about reporting disappear.

What is wrong with using APM only for root cause detection?

What was wrong with the approach above? The problem was resolved, so what could have been improved in such a situation? As it happens to be, there are multiple flaws with the approach above, so let me walk you through these:

First and foremost, the team does not seem to have any clear performance requirements set. A symptom for this is the missing the objective; instead vague tasks containing phrases “too slow” or “make it faster” are popping up in backlog.

Performance happens to be only one of the non-functional requirements engineering team needs to deal with. In addition usability, security and availability are examples of other such requirements. To have a clear understanding of priorities, the team should understand for whether or not to even focus on performance issues. It could very well be that the application is performing well enough and the time should instead be spent on improving other aspects of the product.

Second, the problem awareness reached the engineering team only after a number of customer complaints had reached product manager. As a result of the delay days or even weeks passed before the task was made the priority for engineers. During all this time more and more users got frustrated with the product.

Last but not least, the engineering focused on improving the aspect the customers were complaining, which does not always correlate to spending the time with bottlenecks with the most impact. There might be other aspects of the product, which are even slower than the monthly reporting functionality that the team was focusing. As a result, the team might not spend the resources towards better performance efficiently.

How to improve the situation?

Understanding the problems with the described approach sets the scenery for improving. After all – first step to recovery is admitting that you have a problem, isn’t it? So let me walk you through how to really benefit from the APM in situations where performance issues occur.

Start by setting the performance objective(s) for the service. This builds the foundation for success and removes the vague “make it faster” and “too slow” tickets from the issue tracker. Having a clear requirement such as “The median response time must be under 800ms and 99% of the responses must complete in under 5,000ms” builds a clear and measurable target towards which to optimize.

Knowing where to set the latency thresholds might sound complex. Indeed, the ultimate knowledge in this field can be derived via understanding the correlation of performance metrics and business metrics. This is specific to the business the company is running. For example:

- For digital media, improving the median latency by 20%, the engagement over content increases 6%

- For e-commerce, the conversion rate in a funnel step increases by 17% if the first contentful paint of the step is reduced from 2,500ms to 1,300ms

Gaining this knowledge might not be possible initially as it requires domain-specific data correlation with performance and business metrics. For most companies however, the starting objectives can be set using a simple three-step approach:

- Understand the status quo, by analyzing the current baseline. As a result you will understand the performance your users are currently experiencing. This performance should be expressed in latency distribution as percentiles, similar to: The median latency is 900ms, and 99th percentile is 6,200ms.

- Pick a few low-hanging and juicy improvements from the root causes detected by the APM. This typically requires allocating a few man-weeks of the engineering time to tackle 2-3 bottlenecks with big impact, which are easy to mitigate.

- After the improvements are released, measure the performance again using the very same latency distribution. You should be facing a better performance, for example the same three latency-describing metrics could now be 800ms for median and 4,500ms for the 99th percentile.

Use this new baseline to formalize the objective. Using again the numbers from examples above, the team might agree that:

- Median response time of the API must be under 850ms for 99% of the time in any given month

- 99th percentile of the response time of the API must be under 5,000ms for 90% of the time in any given month.

After going through this exercise, you now have a clear objective to meet and you can continue with the next steps to reap the full benefits of an APM deployment.

Set up alerting. The objective here is to use the APM data to be aware immediately whenever the performance objectives are no longer met. This removes the lag in waiting for users to complain and significantly cuts down the time it takes to mitigate the issues when the performance drops.

The key to successful alerting lies in accepting that alerts should be based on the symptoms and not root causes or technical metrics. What this means in practice is that instead alerts based on increased memory usage or network traffic, you should alert your team based on what the real users experience. APMs (and RUMs) are a great source for such metrics.

Google with their Site Reliability Engineering movement has done a lot in this regard to educate the market, but there is still significant resistance in the field. But I can encourage you to give it a try – have a week where you disable the dozens of different technical metrics based alerts and replace them with APM or RUM based alerts. Now you will be alerted only on situations where either the throughput is abnormal, error rate exceeds a particular threshold or performance as experienced by users decreases. I am willing to take a bet that you are pleasantly surprised of the quality of the signal used. Brace yourself for significantly less false positives and negatives and gain confidence in your alerts!

Setting up the alerts in practice is simple. Pick the underlying metric, set the SLO-based thresholds and stream the alerts to a channel already in use (Slack, Pagerduty, email, …). For performance alerts – expanding on the example used in previous section – the team can set up alerts in situations where the median response time has exceeded 850ms or the 99th percentile of the response times has exceeded 5,000ms.

Work with root causes with most impact. In situations where the performance SLO is breached and the alert has been triggered, it is crucial to make sure the engineering time is spent in investigating and improving the bottlenecks that have contributed the most towards the breach.

Ironically, this is not often the case. Times and times again I have seen teams who are stubbornly shaving off milliseconds from a particular code feature it situation where the real problems are several orders of magnitude larger and located in a completely unrelated code sections. Use the power of your APM and before improving anything, make sure that the improvements are carried out in the most problematic areas of the source code.

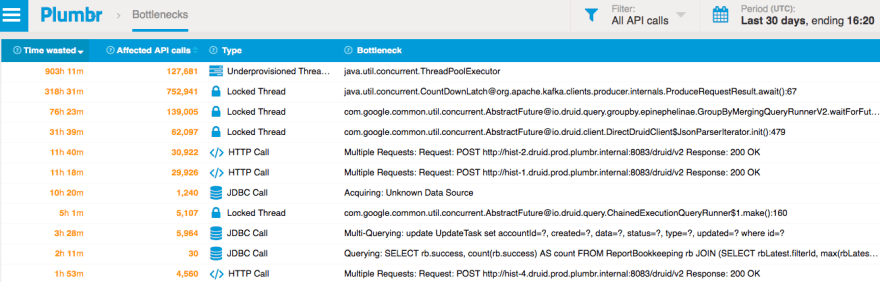

The best APM vendors make your job in this field trivial by ranking the different bottlenecks based on their impact. As a result, you would have information similar to following at your fingertips, giving you confidence that the improvements must focus on mitigating the first bottleneck:

Take-away

Any APM worth adopting will simplify the root cause resolution by locating the bottlenecks and errors in your application via distributed traces and additional technology-specific instrumentation. However, using the APM only for root cause resolution process is handicapping you. Expand how you are using the APM to really stay in control of the performance and availability of the digital services you are monitoring.

Being a strong believer in this, I can encourage you to take Plumbr APM out for a test run. Grab your 13-day free trial to enjoy the benefits described above.

Top comments (0)