This article was originally posted by Derrick Mwiti on neptune.ml/blog where you can find more in-depth articles for machine learning practitioners.

In this piece, we’ll take a plunge into the world of image segmentation using deep learning. We’ll talk about:

- what image segmentation is and the two main types of image segmentation

- Image segmentation architectures

- Loss functions used in image segmentation

- Frameworks that you can use for your image segmentation projects

Let's dive in.

What is Image Segmentation?

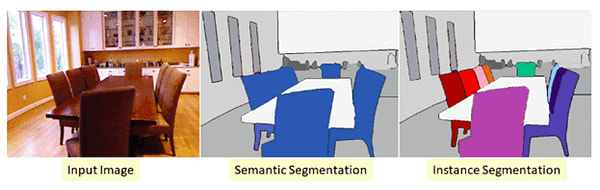

As the term suggests this is the process of dividing an image into multiple segments. In this process, every pixel in the image is associated with an object type. There are two major types of image segmentation - semantic segmentation and instance segmentation.

In semantic segmentation, all objects of the same type are marked using one class label while in instance segmentation similar objects get their own separate labels.

Image Segmentation Architectures

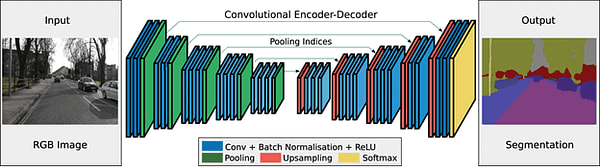

The basic architecture in image segmentation consists of an encoder and a decoder.

The encoder extracts features from the image through filters. The decoder is responsible for generating the final output which is usually a segmentation mask containing the outline of the object. Most of the architectures have this architecture or a variant of it.

Let's look at a couple.

U-Net

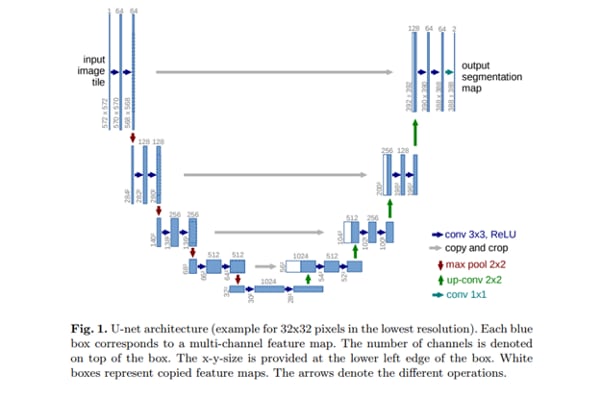

U-Net is a convolutional neural network originally developed for segmenting biomedical images. When visualized its architecture looks like the letter U and hence the name U-Net. Its architecture is made up of two parts, the left part - the contracting path and the right part - the expansive path. The purpose of the contracting path is to capture context while the role of the expansive path is to aid in precise localization.

U-Net is made up of an expansive path on the right and a contracting path on the left. The contracting path is made up of two three-by-three convolutions. The convolutions are followed by a rectified linear unit and a two-by-two max-pooling computation for downsampling.

U-Net's full implementation can be found here.

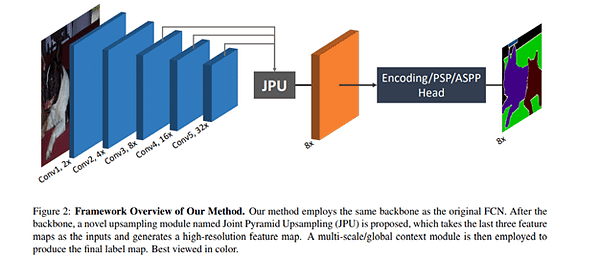

FastFCN - Fast Fully-connected network

In this architecture, a Joint Pyramid Upsampling(JPU) module is used to replace dilated convolutions since they consume a lot of memory and time. It uses a fully-connected network at its core while applying JPU for upsampling. JPU upsamples the low-resolution feature maps to high-resolution feature maps.

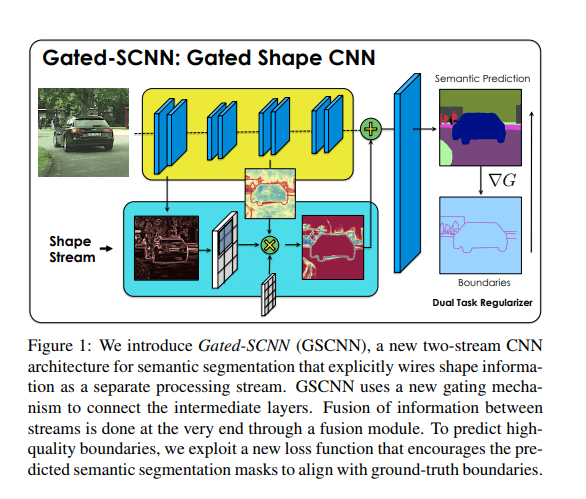

Gated-SCNN

This architecture consists of a two-stream CNN architecture. In this model, a separate branch is used to process image shape information. The shape stream is used to process boundary information.

You can implement it by checking out the code here.

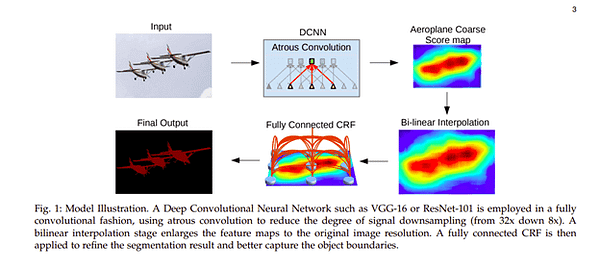

DeepLab

In this architecture, convolutions with upsampled filters are used for tasks that involve dense prediction. Segmentation of objects at multiple scales is done via atrous spatial pyramid pooling. Finally, DCNNs are used to improve the localization of object boundaries. Atrous convolution is achieved by upsampling the filters through the insertion of zeros or sparse sampling of input feature maps.

You can try its implementation on either PyTorch or TensorFlow.

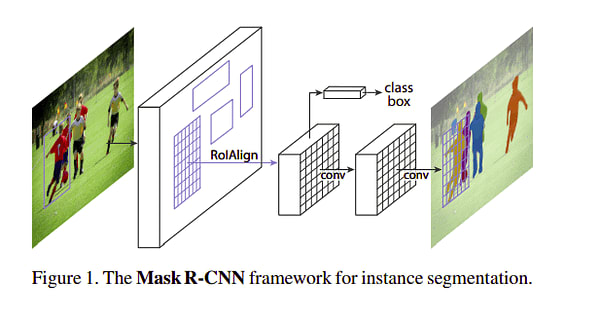

Mask R-CNN

In this architecture, objects are classified and localized using a bounding box and semantic segmentation that classifies each pixel into a set of categories. Every region of interest gets a segmentation mask. A class label and a bounding box are produced as the final output. The architecture is an extension of the Faster R-CNN. The Faster R-CNN is made up of a deep convolutional network that proposes the regions and a detector that utilizes the regions.

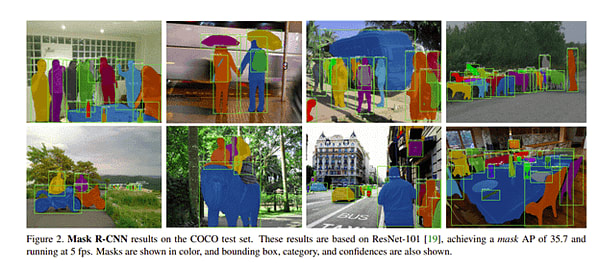

Here is an image of the result obtained on the COCO test set.

Image Segmentation Loss functions

Semantic segmentation models usually use a simple cross-categorical entropy loss function during training. However, if you are interested in getting the granular information of an image, then you have to revert to slightly more advanced loss functions. '

Let's go through a couple of them.

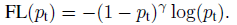

Focal Loss

This loss is an improvement to the standard cross-entropy criterion. This is done by changing its shape such that the loss assigned to well-classified examples is down-weighted. Ultimately, this ensures that there is no class imbalance. In this loss function, the cross-entropy loss is scaled with the scaling factors decaying at zero as the confidence in the correct classes increases. The scaling factor automatically down weights the contribution of easy examples at training time and focuses on the hard ones.

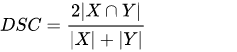

Dice loss

This loss is obtained by calculating smooth dice coefficient function. This loss is the most commonly used loss is segmentation problems.

Intersection over Union (IoU)-balanced Loss

The IoU-balanced classification loss aims at increasing the gradient of samples with high IoU and decreasing the gradient of samples with low IoU. In this way, the localization accuracy of machine learning models is increased.

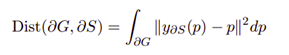

Boundary loss

One variant of the boundary loss is applied to tasks with highly unbalanced segmentations. This loss's form is that of a distance metric on space contours and not regions. In this manner, it tackles the problem posed by regional losses for highly imbalanced segmentation tasks.

Weighted cross-entropy

In one variant of cross-entropy, all positive examples are weighted by a certain coefficient. It is used in scenarios that involve class imbalance.

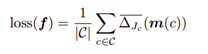

Lovász-Softmax loss

This loss performs direct optimization of the mean intersection-over-union loss in neural networks based on the convex Lovasz extension of sub-modular losses.

Other losses worth mentioning are:

- TopK loss whose aim is to ensure that networks concentrate on hard samples during the training process.

- Distance penalized CE loss that directs the network to boundary regions that are hard to segment.

- Sensitivity-Specificity (SS) loss that computes the weighted sum of the mean squared difference of specificity and sensitivity.

- Hausdorff distance(HD) loss that estimated the Hausdorff distance from a convolutional neural network.

These are just a couple of loss functions used in image segmentation. To explore many more check out this repo.

Image Segmentation Datasets

If you are still here, chances are that you might be asking yourself where you can get some datasets to get started.

Let's look at a few.

Common Objects in COntext - Coco Dataset

COCO is a large-scale object detection, segmentation, and captioning dataset. The dataset contains 91 classes. It has 250,000 people with key points. Its download size is 37.57 GiB. It contains 80 object categories. It is available under the Apache 2.0 License and can be downloaded from here.

PASCAL Visual Object Classes (PASCAL VOC)

PASCAL has 9963 images with 20 different classes. The training/validation set is a 2GB tar file. The dataset can be downloaded from the official website.

The Cityscapes Dataset

This dataset contains images of city scenes. It can be used to evaluate the performance of vision algorithms in urban scenarios. The dataset can be downloaded from here.

The Cambridge-driving Labeled Video Database - CamVid

This is a motion-based segmentation and recognition dataset. It contains 32 semantic classes. This link contains further explanations and download links to the dataset.

Image Segmentation Frameworks

Now that you are armed with possible datasets, let's mention a few tools/frameworks that you can use to get started.

- FastAI library - given an image this library is able to create a mask of the objects in the image.

- Sefexa Image Segmentation Tool - Sefexa is a free tool that can be used for Semi-automatic image segmentation, analysis of images, and creation of ground truth

- Deepmask - Deepmask by Facebook Research is a Torch implementation of DeepMask and SharpMask

- MultiPath - This a Torch implementation of the object detection network from "A MultiPath Network for Object Detection". OpenCV - This is an open-source computer vision library with over 2500 optimized algorithms.

- MIScnn - is a medical image segmentation open-source library. It allows setting up pipelines with state-of-the-art convolutional neural networks and deep learning models in a few lines of code. Fritz: Fritz offers several computer vision tools including image segmentation tools for mobile devices.

Final Thoughts

Hopefully, this article gave you some background into image segmentation and given you some tools and frameworks that you can use to get started.

We’ve covered:

- what image segmentation is,

- a couple of image segmentation architectures,

- some image segmentation losses,

- image segmentation tools and frameworks.

For more information check out the links attached to each of the architectures and frameworks.

Happy segmenting!

This article was originally posted on neptune.ml/blog where you can find more in-depth articles for machine learning practitioners.

Top comments (0)