In a world where artificial intelligence (AI) transforms how we interact with machines, prompt engineering is a crucial skill. Imagine having a conversation with a robot. The way you frame your questions or inputs – that's the essence of prompt engineering. It's not just about what you ask, but how you ask it. This determines the response you get and the AI's effectiveness in understanding and fulfilling your request.

In this article you will learn:

- Basic Techniques: The importance of chain-of-thought, tree-of-thought, and complexity-based prompting.

- Advanced Techniques: Utilizing least-to-most and self-refine prompting for sophisticated AI interactions.

- Crafting Effective Prompts: Balancing clarity with complexity and providing contextual details.

- Best Practices: Emphasizing specificity, contextuality, and using examples in prompts.

- Mitigating Challenges: Strategies to address prompt injection attacks and data biases.

Prompt engineering guides AI tools to generate accurate and relevant results based on inputs. AI tools, especially LLM-based ones, are getting as common as smartphones. Using these intelligent systems efficiently and effectively depends on how we communicate with them. Human intentions are translated into a language that AI is able to not only understand but effectively respond to through prompt engineering.

Why does this matter? Because as AI becomes more integrated into our daily lives, the clarity and structure of our interactions with it become critical. Just as we had to learn how to "Google things" when it was new, now we have to know how to craft prompts. Whether you're a developer fine-tuning a chatbot, a researcher probing the depths of machine learning, or just a curious user exploring the capabilities of AI, understanding prompt engineering is key to unlocking the full potential of these tools.

Let's dive into the what, why, and how of prompt engineering. From its basic principles to advanced techniques and from its vital importance to practical tips for better interactions, we will unpack the world of prompt engineering in a way that's insightful, practical, and, most importantly, accessible to all. So, let's begin this journey into the heart of AI communication – where every word matters and every prompt is a step towards a more intelligent future.

Input Prompts are what drives Generative AI

In the world of generative AI, the magic starts with input prompts. These tools, whether crafting art, writing code, or generating text, rely on the inputs we provide to make sense of what we're asking for. Think of them as sophisticated but somewhat literal-minded assistants; they need clear and well-structured instructions to deliver the goods. The more precise the prompt, the better the AI understands and responds. It's like programming, but we use natural language instead of code. This reliance on user input is what makes prompt engineering a crucial skill.

The Role of Prompt Engineering in Optimizing AI Outputs

Here's where prompt engineering shines. We can significantly enhance their outputs by fine-tuning the prompts we feed these AI tools. It's not just about getting an answer; it's about getting the right answer, or the most creative solution, or the most relevant piece of information. Effective prompt engineering can mean the difference between an AI delivering a generic response and one that's tailored and insightful. In essence, it's about bridging the gap between human intention and AI capabilities, ensuring that the conversation between humans and machines is as productive and seamless as possible.

Understanding Prompt Engineering

Prompt engineering is a unique blend of creativity and technical skill. It's like being a director in a play where AI is your lead actor. You provide the script – the prompts – and AI brings it to life. The better your script, the better the performance. This discipline is crucial because it steers AI to deliver what we want.

It's not just about getting any response; it's about getting the right one. With prompt engineering, we're not just asking questions; we're crafting a path for AI to follow, ensuring that the result aligns with our goals and expectations. It's a vital tool in our AI toolbox, merging human creativity with machine intelligence to achieve remarkable outcomes.

Mechanics of Prompt Engineering

Prompt engineering is like guiding AI through a maze with precise, detailed directions. For example, asking an AI to "write a story" is like telling a chef to "make dinner." The result could be anything. But if you specify, "write a sci-fi story about a robot learning to paint," it's akin to asking for "a vegetarian lasagna with spinach and mushrooms." You've given the AI a clear path to follow.

This process taps directly into the AI's neural networks, akin to programming a GPS with specific coordinates. The more detailed the prompt, the more accurately the AI can navigate its vast data network to reach the desired outcome. Providing context is like adding landmarks to the route. For instance, if the prompt includes, "The robot should struggle with understanding colors," it gives the AI a thematic landmark to focus on, enriching the story's depth, much like specifying "use fresh herbs" refines the dish's flavor profile.

Applications and Impact

Prompt engineering is very versatile and can be used across various fields. In healthcare, it's like giving doctors a super-tool. By precisely tailoring prompts, AI can assist in diagnosing diseases or suggesting treatments, much like a highly specialized consultant. It's about getting AI to sift through medical data and emerging with valuable, life-saving insights.

In creative industries, prompt engineering is the unseen artist behind the curtain. It generates novel ideas, writes scripts, or even composes music. By directing AI with specific, imaginative prompts, creators can explore new artistic territories, pushing the boundaries of what's possible.

With well-crafted prompts, AI becomes more than just a tool; it becomes a collaborator, capable of understanding and augmenting human intentions. This leads to a more intuitive, effective, and enjoyable experience for users, transforming our interaction with technology from mere transactions to meaningful exchanges.

It's your super smart virtual assistant.

Prompt Engineering - Basic Techniques

Chain-of-Thought Prompting

Example: Asking AI, "How can we reduce carbon emissions in urban areas?" The AI then breaks this down into steps like public transportation, green energy, and urban planning.

Tree-of-Thought Prompting

Example: Asking AI, "What are the solutions to climate change?" The AI explores multiple paths like technology, policy changes, and individual actions.

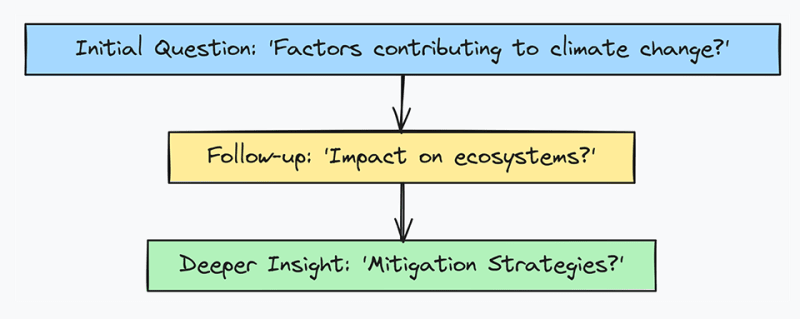

Maieutic Prompting

Example: Leading AI through a series of questions like, "What factors contribute to climate change?" followed by, "How do these factors affect different ecosystems?"

Complexity-Based Prompting

Example: For a complex topic like quantum computing, the prompt would include detailed aspects of quantum theory, whereas a simpler topic would have a more straightforward prompt.

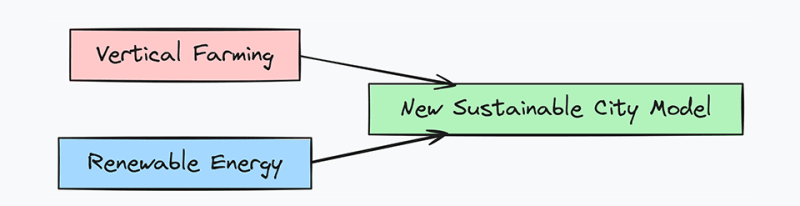

Generated Knowledge Prompting

Example: Asking AI to create a new concept by combining existing ideas, like "Design a sustainable city model that integrates vertical farming and renewable energy."

Prompt Engineering - Advanced Techniques

In advanced prompt engineering, techniques like least-to-most and self-refine prompting take AI interaction to new heights.

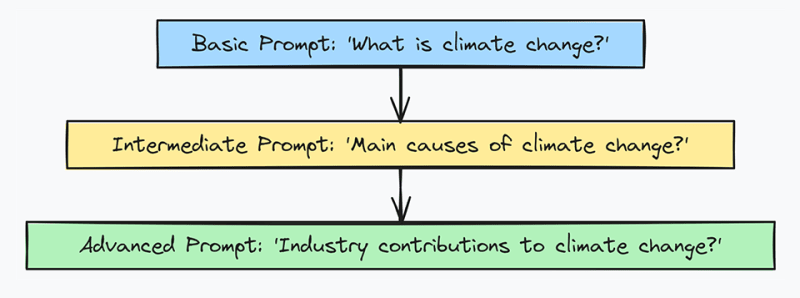

Least-to-Most Prompting

Least-to-most prompting is about guiding AI from simpler concepts to more complex ones. It's like teaching someone to swim, starting in the shallow end before venturing into deeper waters. You begin with a basic query and gradually introduce more complexity, allowing the AI to build upon its initial responses. This approach is particularly effective for tackling intricate problems or concepts, ensuring the AI doesn't get overwhelmed and stays on track.

Example: Starting with a basic prompt like "What is climate change?" and progressively asking more detailed questions like "What are the main causes of climate change?" and then "How do specific industries contribute to climate change?"

Self-Refine Prompting

Self-refine prompting is akin to an artist refining a sketch into a masterpiece. Here, the AI is initially given a general prompt, and then it's asked to refine or elaborate on its response based on specific criteria or feedback. This iterative process leads to more precise, tailored, and sophisticated outputs. It's like having a conversation with the AI, nudging it towards a more nuanced understanding or a more creative solution.

Example: Initially asking AI to "Describe a sustainable city," and then refining with "Focus on energy solutions in the sustainable city," followed by "Detail solar energy use in the city."

Both techniques require a deep understanding of how AI processes information and a creative approach to iterative questioning, making them advanced yet powerful tools in the prompt engineer's toolkit.

Crafting Effective Prompts

Crafting effective prompts is an art that balances clarity and complexity. You must avoid ambiguity and provide enough context to guide AI. Think of it as giving directions; being clear and precise ensures that AI doesn't take a wrong turn. But there's also a delicate balance to maintain. Over-simplify, and the AI might not grasp the full scope of your request. Over-complicate, and it might get lost in the details. You must find the sweet spot where the prompt is simple enough to be clear but complex enough to convey your full intent. This balancing act is crucial.

Examples of crafting effective prompts:

-

Unambiguity and Context:

- Ambiguous: "Tell me about energy."

- Clear and Contextual: "Explain the role of renewable energy in reducing carbon emissions."

- The second prompt provides specific context, guiding AI to a focused and relevant response.

-

Balancing Simplicity and Complexity:

- Too Simple: "Describe New York."

- Too Complex: "Describe New York's history, culture, economy, major landmarks, and its role in American politics."

- Balanced: "Describe the cultural significance of New York City in American history."

- The balanced prompt is specific enough to guide AI while not overwhelming it with too many facets.

Tips for Better Prompt Engineering

- Experimentation and Testing

Experimentation and testing in prompt engineering are all about embracing trial and error for continuous improvement. It's like a chef tweaking recipes: each iteration brings new insights. For instance, if an AI-generated summary of a news article misses key points, refine the prompt to specify the need for "major events and impacts." This iterative process, testing different wordings and structures, hones the AI's understanding and response quality. It's not just about getting it right the first time; it's about learning from each attempt to perfect the communication between human and machine. This approach ensures that AI tools are not just used, but also evolved and adapted to meet changing needs and complexities.

- Best Practices

Specificity and contextuality are key. For instance, instead of a broad prompt like "Write about technology," specify with "Write about the impact of AI in healthcare." This guides the AI to generate targeted content. Similarly, using examples in prompts can further clarify the intent. For example, "Describe a company like Apple in terms of innovation."

Chain-of-thought prompting is another best practice for deeper understanding. This involves leading the AI through a logical progression of thoughts or steps. For instance, in solving a math problem, you might prompt, "First, identify the variables, then apply the quadratic formula." This approach helps the AI break down complex tasks into manageable steps, leading to more insightful and accurate responses.

- Mitigating Challenges

There are two issues that present challenges with LLMs and generative AI: prompt injection attacks and data biases. Prompt injection attacks occur when users input prompts that manipulate AI to produce unintended or harmful outputs. To counter this, we need robust filtering systems that detect and neutralize such malicious inputs.

Data biases are another significant challenge. AI models can inherit biases present in their training data, leading to skewed or unfair responses. Combating this requires a two-pronged approach: diversifying training data and refining prompts to explicitly direct AI away from biased interpretations. For example, if an AI tends to associate medical professions with one gender, prompts can be structured to encourage gender-neutral responses, thus actively working towards fairer, more balanced AI outputs.

The Future of Prompt Engineering

The term "Prompt Engineering" will soon evaporate as we all become experts at it, and our queries become second nature. It's very similar to sending queries to a search engine. However, as AI becomes more sophisticated, prompt engineering will increasingly shape how we interact with these technologies. We must learn it well and avoid developing bad habits from the start.

Imagine a future where prompts are used to personalize educational tools, tailor medical advice, or even create interactive, AI-driven entertainment. The role of prompt engineering will expand beyond current applications, becoming a critical skill in designing and refining AI interactions for a wide range of personal, professional, and societal applications. This evolution will not only enhance AI's effectiveness but also make it more accessible and intuitive for everyone.

Top comments (1)

Really cool guide! Inspiring! Thanks for sharing friend!