Deploying an AWS PrivateLink for a Kafka Cluster

Kafka is a massively scalable way of delivering events to a multitude of systems. In the cloud however, Kafka is not always readily available all across the same networks. This is typically a problem for any type of application and connecting privately, not exposing to the internet, between cloud networks has been difficult. A feature that recently came out from AWS is PrivateLink. PrivateLink is a technology that allows you to connect to AWS Virtual Private Clouds together privately. The solution leverages AWS Network Load Balancers in the provider account to bind consumers too from the consumer account. In this approach, there are IPs in the consumer account that can be routed too and AWS handles the NAT translation to the IPs for the Network Load Balancer in the provider account. This works great for stateless applications where it does not matter which instance of a service serves the request as the application will behind that Network Load Balancer but for stateful applications it tends to matter which host processes the request especially in a distributed system.

One such service is Kafka. Kafka has a master/slave implementation where a master needs to process all producing of data requests but slaves, and the master, can be used for consumption of data. The way producing works is you provide a list of bootstrap servers. These bootstrap servers are used for discovering the rest of the cluster as well the metadata of the topics. How this works is the bootstrap servers, which can be any of the servers in the Kafka cluster, respond back with metadata about the rest of the cluster to the client such that the client can connect to the rest of the cluster. This is true for both producing and consuming data to/from the cluster.

To circumvent the stateful instance load balancing problem we must craft the rules for the load balancer to always send the requests to the right node that needs to respond.

Approach

The Producer and Consumer use case are exactly the same in this solution.

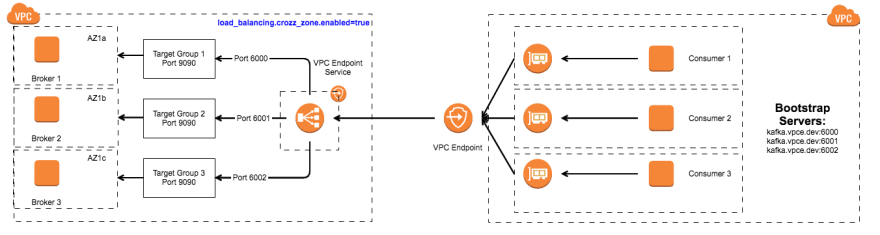

The solution relies on routing traffic from the Network Load Balancer with cross zone load balancing enabled based on port numbers. There is a target group per broker instance defined on the Network Load Balancer with a unique port number as the source. What this means is when a specific port arrives at the Network Load Balancer the NLB will route the traffic to a specific instance in the Kafka cluster.

In the above diagram the important pieces are we map the port 6000 to port 9090 on Broker 1, 6001 to port 9090 on Broker 2, and port 6002 to port 9090 on Broker 3. This mapping scheme is the key to making the Kafka connectivity across the PrivateLink work. Now we just have to configure the individual Kafka brokers to respond with the right connection information.

# Broker 1

advertised.listeners=INTERNAL_PLAINTEXT://:9092,VPCE://kafka.vpce.dev:6000

listeners=INTERNAL_PLAINTEXT://:9092,VPCE://:9090

inter.broker.listener.name=INTERNAL_PLAINTEXT

listener.security.protocol.map=VPCE:SSL,INTERNAL_PLAINTEXT:PLAINTEXT

# Broker 2

advertised.listeners=INTERNAL_PLAINTEXT://:9092,VPCE://kafka.vpce.dev:6001

listeners=INTERNAL_PLAINTEXT://:9092,VPCE://:9090

inter.broker.listener.name=INTERNAL_PLAINTEXT

listener.security.protocol.map=VPCE:SSL,INTERNAL_PLAINTEXT:PLAINTEXT

# Broker 3

advertised.listeners=INTERNAL_PLAINTEXT://:9092,VPCE://kafka.vpce.dev:6002

listeners=INTERNAL_PLAINTEXT://:9092,VPCE://:9090

inter.broker.listener.name=INTERNAL_PLAINTEXT

listener.security.protocol.map=VPCE:SSL,INTERNAL_PLAINTEXT:PLAINTEXT

There are several things going on in this configuration that the configuration manual for the Kafka brokers should be consulted but the overview is we are telling the brokers to advertise themselves as kafka.vpce.dev:600[0-2] back to the clients during the bootstrap phase of connecting. This setup does have implication in that kafka.vpce.dev must resolve to the VPC Endpoint Consumer IPs in the PrivateLink. This indicates that the consumer end of the of VPC Endpoint must host/own/agree upon the advertised dns names used in the listeners. This can be accomplished as easily as setting up a private hosted zone, vpce.dev, in Route53 of your AWS account where you dump various VPC Endpoint consumers as Alias A records. In this case, kafka,vpce.dev will be an Alias A Record to the VPC Endpoint consumer.

Conclusion

Connecting Kafka across VPCs can be overly complex and not straight forward but as with all complex problems can be fun! However, it will be certainly more easily debuggable if using Kafka using PrivateLinks is avoided. Using AWS Transit Gateway or good ol VPC peering would definitely be preferable.

Top comments (5)

Hi Jonathan, I read your article and found it very helpful! Thank you for posting this method but I am a newbie in AWS and kafka and I still have a few doubt that I wish you can answer.

1 I suspect you set the listener, advertised.listener, etc in server.properties, please correct me if I am wrong. However, in AWS, where does the server.properties locates? In EC2 instance? or other places.

Thank you in advance and looking forward to your reply.

-hom

The properties file lives on the ec2 instance. It’s part of the Kafka configurations files.

VPCE stands for virtual private cloud endpoint which is synonymous with PrivateLink. Sorry, should avoid acronyms unless I explain them. AWS will use both PrivateLink and VPCE interchangeably.

Kafka.vpce.dev is the route53 hosted zone I made up. Because of how Kafka works both the provider, the VPC that is hosting Kafka, and the consumer, the vpc that’s using Kafka, must agree on a hostname pattern so the IP resolution using DNS works. The zone that’s agreed upon is the zone you put the consumer IPs in on the consumer VPC and thats the zone that Kafka must use as part of the advertised.listeners.

Thank you for the swift reply! And I have a few follow ups.

I installed kafka on my EC2 instance and there is a server.properties locating in /home/kafka_2.12-2.2.1/config. Is this property file the one to change? But how can I connect this server.properties with the kafka I want to manipulate with since this property file does not specify which kafka it corresponds to? Btw, I created the kafka through console, but not command line.

In terms of kafka.vpce.dev, can I put "DNS of endpoint:9092" to replace "kafka.vpce.dev:9092" in server.properties so that I can do less work by eliminating the need of setting up route53?

-hom

Yes that is the file you would be editing. I am not entirely sure though what you mean by "which kafka" it corresponds too. You can start multiple kafka processes on a server and they should all use that same config file by default. You'd have to tell it to use a different one at startup but not entirely sure of your setup.

You COULD put endpoint:9092 on what to respond with as an advertised listener, however your clients will receive "endpoint:9092" and will try to resolve "endpoint" in their VPCs. Unless they have "endpoint" configured in DNS to resolve to an IP of the PrivateLink they will fail with an unknown host exception.

Hi Jonathan, sorry for not making my problem clear. On my EC2, I only have one server.properties. But there are many MSK clusters in this VPC. If I change the server.properties, which cluster am I changing the properties of? Since we cannot specify a cluster in server.properties.

Also, in the line of advertised.listeners=xxxx, I have seen titles like PLAINTEXT, INTERNAL_PLAINTEXT, VPCE, CLIENT, CLIENT_SECURE. I'm confused by these mapping names, where can I set them? Or is there a list of these names for different purposes and we should stick to them?

It is an approach that not many people tried, really appreciate your help, Jonathan!