Accessing the Spark UI for live Spark applications is easy, but having access to it for terminated applications requires persisting logs to a cloud storage and running a server called the Spark History Server. While some commercial Spark platform provides this automatically (e.g. Databricks, EMR) ; for many Spark users (e.g. on Dataproc, Spark-on-Kubernetes) getting this requires a bit of work.

Today, we’re releasing a free, hosted, partly open sourced, Spark UI and Spark History Server that work on top of any Spark platform (whether it’s on-premise or in the cloud, over Kubernetes or over YARN, using a commercial platform or not).

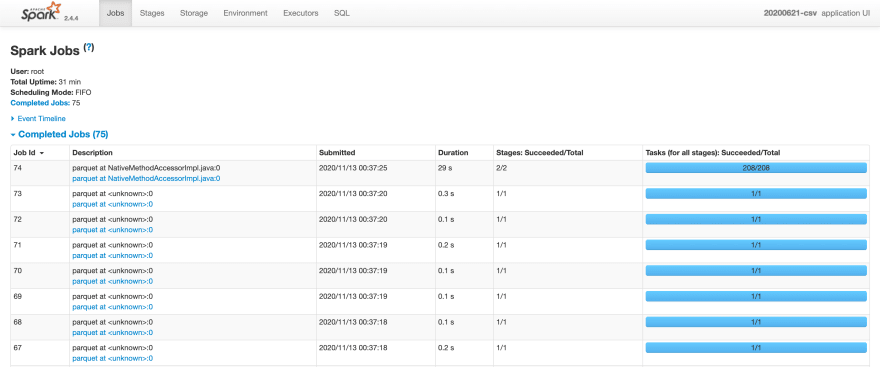

Spark UI Screenshot. Image by Author.

Spark UI Screenshot. Image by Author.

It consists of a dashboard listing your Spark applications, and a hosted Spark History Server that will give you access to the Spark UI for your recently finished applications at the click of a button.

How Can I Use it?

- Create an account on Data Mechanics Delight

You should use your company’s Google account if you want to share a single dashboard with your colleagues, or your personal Google account if you want the dashboard to be private to you.

Once your account is created, go under Settings and create a personal access token. This will be needed in the next step.

2. Attach our open-source agent to your Spark applications.

Follow the instructions on our Github page. We have instructions available for the most common setups of Spark:

Spark-submit (on any platform)

Spark-on-Kubernetes using the spark-operator

If you’re not sure how to install it on your infrastructure, let us know and we’ll add instructions that fit your needs.

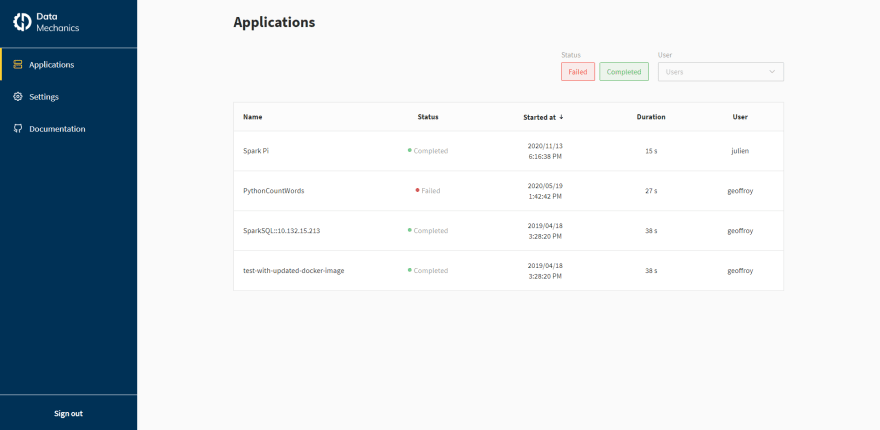

This is the dashboard that you’ll see once logged in. Image by Author.

This is the dashboard that you’ll see once logged in. Image by Author.

Your applications will automatically appear on the web dashboard once they complete. Clicking on an application opens up the corresponding Spark UI. That’s it!

How Does It Work? Is It Secure?

This project consists of two parts:

An open-source Spark agent which runs inside your Spark applications. This agent will stream non-sensitive Spark event logs from your Spark application to our backend.

A closed-source backend consisting of a real-time logs ingestion pipeline, storage services, a web application, and an authentication layer to make this secure.

.](https://res.cloudinary.com/practicaldev/image/fetch/s--ooKIWJtM--/c_limit%2Cf_auto%2Cfl_progressive%2Cq_auto%2Cw_880/https://cdn-images-1.medium.com/max/3840/0%2AZQ8fS6d0VfDbwdGz.png) Architecture of Data Mechanics Delight.

Architecture of Data Mechanics Delight.

The agent collects your Spark applications event logs. This is non-sensitive information about the metadata of your Spark application. For example, for each Spark task there is metadata on memory usage, CPU usage, network traffic (view a sample event log). The agent does not record sensitive information such as the data that your Spark applications actually work on. The agent does not collect your application logs either — as typically they may contain sensitive information.

This data is encrypted using your personal access token and sent over the internet using the HTTPS protocol. This information is then stored securely inside the Data Mechanics control plane behind an authentication layer. Only you and your colleagues will be able to see your application in our dashboard. The collected data will automatically be deleted 30 days after your Spark application completion.

What’s Next?

The release of this hosted Spark History Server is a first step towards a Spark UI replacement tool called Data Mechanics Delight. Our announcement in June 2020 to build a Spark UI replacement with new metrics and visualizations generated a lot of interest from the Spark community.

Design prototype for the next releases of Data Mechanics Delight. Image by Author.

Design prototype for the next releases of Data Mechanics Delight. Image by Author.

The current release does not yet replace the Spark UI, but we hope it will still be valuable to some of you. The next release of Delight, scheduled in January 2021, will consist of an overview screen giving a bird’s-eye view of your applications’ performance. Links to specific jobs, stages or executor pages will still take you to the corresponding Spark UI pages until we gradually replace these pages too.

Our mission at Data Mechanics is to make Apache Spark much more developer-friendly and cost-effective. We hope this tool will contribute to this goal and prove useful to the Spark community!

Top comments (0)