So I have a Hugo website now but deploying the generated HTML files to my AWS S3 bucket and invalidating my AWS Cloudfront deployment is very time consuming.

Therefore I planned to have a AWS Code Pipeline which helps me in this process.Following steps are needed:

Reading guides from other Bloggers (alimac.io, YagoYns, Symphonia) gave me a good starting point to build my own solution based on AWS CodePipeline.

I will publish a complete CloudFormation script in one of the following blog posts but will only talk about the AWS CodeBuild part today.

During the Build process I need a functionality which generates the static HTML files based on my Hugo and asciidoc files in my GitHub repo. The easiest way getting this functionality is to use a Docker container which has Hugo and asciidoctor installed.

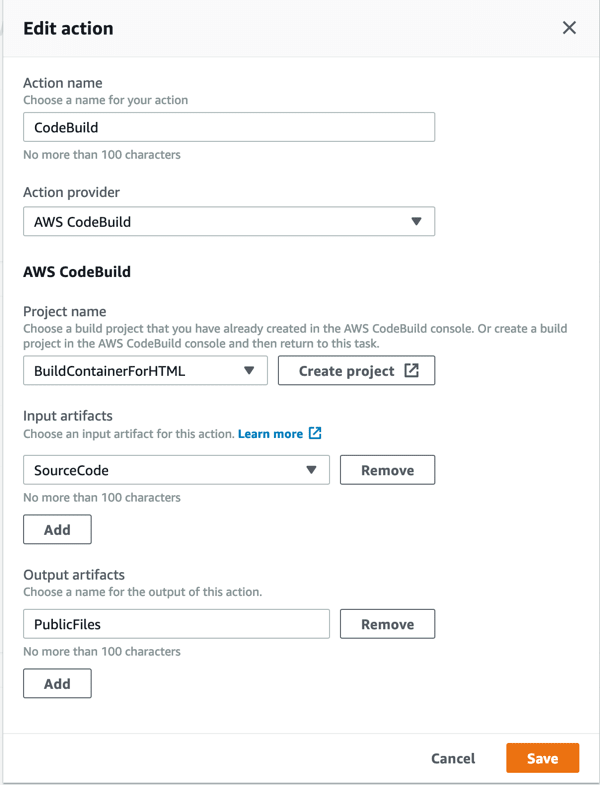

So let’s start this by creating a build project in AWS CodeBuild by clicking on Create project and defining a ProjectName BuildContainerForHTML.

During the creation of your build project you can choose either if you want to use an AWS Managed Docker Image or if you want to use a Custom Image:

Here we will choose Managed Image and will use following parameters:

This means we will use the standard AWS Ubuntu 14.04 Base Docker container, so nothing installed per default.

Now we need to install the needed software which is defined through a file called buildspec.yml (which should be placed in the source code root directory → GitHub repo).

Hardest part for me was to find out how the buildspec.yml has to look like. This buildspec file is used to create the build commands which will be used in the docker container.

As you can see I’m only using two phases in this example and define the output artifact:

buildspec.yml

version: 0.2

phases:

install:

commands:

- echo Entered the install phase...

- apt-get -qq update && apt-get -qq install curl

- apt-get -qq install asciidoctor

- curl -s -L https://github.com/gohugoio/hugo/releases/download/v0.53/hugo_0.53_Linux-64bit.deb -o hugo.deb

- dpkg -i hugo.deb

finally:

- echo Installation done

build:

commands:

- echo Entered the build phase ...

- echo Build started on `date`

- cd $CODEBUILD_SRC_DIR

- rm -f buildspec.yml && rm -f .git && rm -f README.md

- hugo --quiet

finally:

- echo Building the HTML files finished

artifacts:

files:

- '**/*'

base-directory: $CODEBUILD_SRC_DIR/public/

discard-paths: no

- install:

-

Here we have all commands to install Hugo and asciidoctor

- build:

-

Here we switch to the directory with the source files from GitHub ($CODEBUILD_SRC_DIR) & execute the Hugo build command which will create all static pages in the directory public

- artifacts:

-

here we define $CODEBUILD_SRC_DIR/public/ as base directory and add all files in this directory to the output artifact

We also have to define a Policy which should be used by the CodeBuild Project. In this example we only need access to the Input (kbil-artifacts) and Output (kbild-yourwebsite) S3 bucket and the CloudWatch logs. Following policy is used:

CodeBuild Policy

---

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::kbild-yourwebsite",

"arn:aws:s3:::kbild-yourwebsite/*",

"arn:aws:s3:::kbil-artifacts",

"arn:aws:s3:::kbil-artifacts/*"

],

"Effect": "Allow",

"Sid": "VisualEditor0"

},

{

"Action": "logs:*",

"Resource": "*",

"Effect": "Allow",

"Sid": "VisualEditor1"

}

]

}

---

This Build Project can now be added to the Code Pipeline as Build Stage.

SourceCode artifact from GitHub will be used as input and the public html files will be exported as PublicFiles artifact which will be send to S3 later on.

After pushing a new blog post to the GitHub Repo we will see our CodePipeline in full action:

Nice!

Adding additional stages and steps is pretty easy and I guess I will use CodePipelines a lot in future.

Stay tuned for a CloudFormation script which will create the whole pipeline.

Top comments (0)