In this article I’ll explain the rendering pipeline of Spring Flowers WebGL Demo and its corresponding Android app. Also I will describe what problems we’ve encountered and what solutions we used to overcome during development and testing of the Android live wallpaper app.

You can check out the live demo page and play with various configuration options in the top right controls section.

Implementation

Scene is composed from the following main objects: sky, ground, and 3 types of grass: flowers (each containing individual instances of leaves, petals and stems), small round grass and tall animated grass. To make the scene more alive, a sphere for glare and moving ants+butterflies are also drawn.

Draw order is the following: first objects closer to the camera and larger ones to use z-buffer efficiently, then objects closer to ground, then sky and ground. Ground plane has transparent edges which blur with the background sky sphere so it is drawn the last after the sky.

For sun glare effect we draw a sphere object with a specular highlight. It is drawn last over the whole geometry without depth test. This way everything is slightly over-brightened when viewed against the sun, and glare is less prominent when the camera is not facing the sun.

Tiled culling of instances

Grass and flowers are drawn using similar shaders with a common part in them being instanced positioning. These instanced objects get their transformations from the FP32 RGB texture.

All instanced shaders use the same include COMMON_TRANSFORMS which uses 2 samples from texture to retrieve translation in XY plane, scale and rotation. Please note that rotation is stored in the form of sine and cosine of an angle to save on rotation math.

Original transformation is stored in arrays FLOWERS, GRASS1 and GRASS2 declared in GrassPositions.ts. However, these arrays have coordinates for all instances of objects, they are not split into tiles yet. For this, they are processed using sortInstancesByTiles function. It creates a new FP32 array with rearranged positions+rotations and creates an array of tiles which specify instances count and start offset in the final texture used by the shader. This ready information is stored in the TiledInstances object. This function allows to split all instances spreaded across square ground area into arbitrary N^2 tiles. In both web demo and Android app all instances are split into reasonable 4x4 tiles area with 16 tiles. Tiles have a small padding which allows them to slightly overlap. This padding has a size of grass model so the instances placed at the very edge of tile won’t disappear abruptly when tile gets culled.

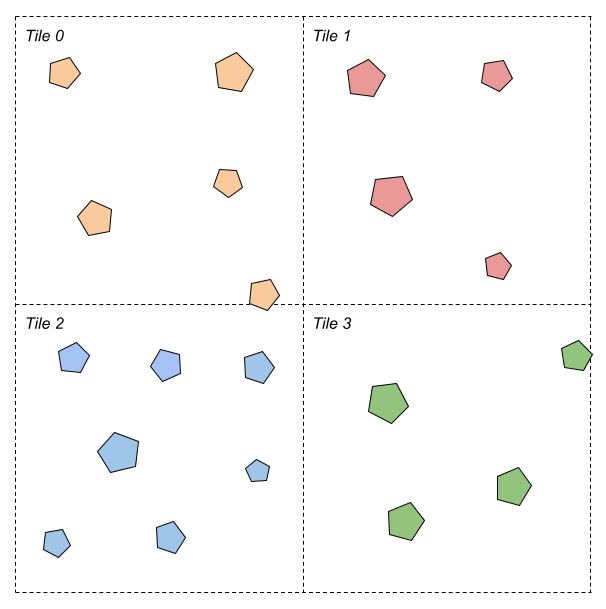

To visualize how instances are split into tiles, let’s imagine a sample area with 20 randomly placed objects which we would like to cull per tile. Let’s rearrange these instances into 2x2 grid with 4 total tiles:

Here is the structure of texture containing these objects, showing tiling and data stored in each component per instance:

Here instances for tile 0 have offset=0 and count=5, for tile 1 offset=5 and count=4, and so on.

This structure allows us to draw all 20 instances in 4 draw calls and cull them in batches per tile without updating any data on the GPU.

Culling of tiles bounding boxes on CPU is also relatively cheap. It is done on each frame and you can see how many tiles and individual instances are currently rendered in “Stats” section of controls.

And reducing grass density to scale performance is also really easy with this approach because inside each tile instances are random. All we have to do is to proportionally reduce number of instances per draw call (you can use density slider in controls to test it):

There are different instanced shaders for drawing different objects. Small grass and flower petals are the simplest ones - they use a simple diffuse colored shading. Dandelion stems and leaves apply specular highlights, and the shader used to render tall grass blades also uses vertex animation for wind simulation.

Random ants

To make the ground more alive, we draw some ants on it. They are also instanced - total 68 ants are rendered in 2 draw calls.

They move in circles with a random radius and center. They are drawn in two draw calls for clockwise and counterclockwise rotation within these circular paths. You can examine the math for positioning vertices in the shader’s source code.

It will be almost impossible to notice any animation on these pretty small and very fast moving objects so we don’t animate them at all.

Butterflies

No summer can be imagined without butterflies so we added them too. They are positioned similar to ants but a sine wave is added to their height. Each instance gets its color from texture atlas with 4 different variants.

On the contrary to ants, butterflies must have animation. To animate them we don’t use any kind of baked animation. Actually, a really cheap trick is used to animate it in vertex shader. It is animated by simply moving wing tips up and down. Wing tips are determined as vertices with high absolute values of X coordinate. Of course it is not a correct circular movement of wings around the butterfly’s body - wings elongate noticeably with higher movement amplitude, but this simplifies shader math and looks convincing enough in motion, as can be seen on this image:

Android-specific optimizations

As always with our web demos, they are optimized for the smallest possible network data size and the fastest loading times. So it doesn’t use compressed or supercompressed textures. The Android app is optimized for power efficiency so it uses compressed textures (ASTC or ETC2) depending on hardware capabilities.

To further improve efficiency it uses variable rate shading (VRS) on supported hardware.

And when the app detects that the device is in energy saving mode (triggered manually or when battery is low) it will reduce FPS and will use simplified grass shader without animation to significantly reduce power draw. Additionally, in this mode the app will use more aggressive VRS.

We’ve encountered general performance issues with rendering lots of instanced geometries on low-end Android phones - the bottleneck appeared to be vertex shaders. So when the app detects it is running on a low-end device it will render grass with slightly reduced grass density and without wind animation.

Failed implementations

Before implementing this tiled rendering pipeline a couple of more naive less performant implementations have been tried and tested.

Fully randomized

The very first version of grass was with fully randomized positioning of instances. It didn’t use texture to store pre-calculated random transformation for instances but calculated them in shader instead. This introduced more complexity in vertex shaders (random and noise functions have quite some math in them). Additionally, random values were different on different GPUs which made it impossible to finely hand-pick camera paths. Take a look at this photo where we tested this version on different devices - while the code is identical, placement of instances is different:

This version had no visibility calculation or frustum culling which also affected performance.

Per-instance culling

The first naive implementation of culling has been implemented per-instance. This version already used texture to reduce vertex shader math but each instance has been tested for visibility and then texture has been updated with only visible instances.

This worked just fine on PC and on high-end Android devices but proved to be way too slow on low-end phones - CPU took about 10 ms to calculate visibility of instances. Updating the texture on the fly with glTexSubImage2D() also was unacceptably slow - it took ~20 ms. For comparison, tiled culling takes ~1 ms of CPU time on low-end devices.

Final result

Total size of the demo page is just 741 kB so you can carry it on a floppy disk.

You can play around with different parameters on the live demo page. You can alter time of day, density of grass and other settings. Double click toggles free camera mode with WASD camera movement and rotation with right mouse button down (similar to navigation in viewport in Unreal Engine).

And as usual you can get source code which is licensed under MIT license, so feel free to play around with it.

Top comments (0)