For Android live wallpapers it is very important to be lightweight. To get the best possible performance, smallest memory and power usage we constantly improve our live wallpapers by reducing the size of app resources and using various compressions supported by hardware.

The latest update of 3D Buddha Live Wallpaper introduced a more compact storing 3D objects to save memory and improve performance. We’ve updated its WebGL demo counterpart in the same way, and in this article we will describe the process of this optimization.

Compact data types in OpenGL ES / WebGL

Previously in our apps we used only floats to store all per-vertex information — position, normal, colors, etc. These are standard 32-bit IEEE-754 floating-point values which are versatile enough to keep any type of information ranging from vertex coordinates to colors.

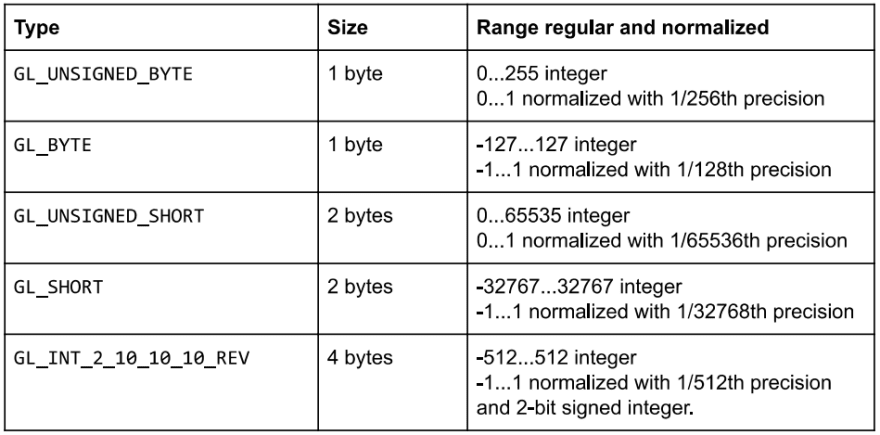

However, not all types of data require precision of 32-bit floats. And OpenGL ES 2.0/WebGL have other less precise but more compact data types to use instead of 32-bit floats.

First, OpenGL supports 16 and 8 bit signed and unsigned integers. So how can an integer value substitute a float? There are two options — use integer values in shader as is and cast them to floats, or normalize them. Normalization means that the driver/GPU performs conversion from integer to float value and vertex shader receives ready to use float value. Normalization converts integer values to a range [0, 1] or [-1, 1], depending on whether they are unsigned or signed integers. Precision of normalized value is specified by range of source integer value — the more bits are in source integer, the better is precision.

So, for example, unsigned byte value 128 will be normalized to 0.5, and signed short -16383 will be normalized to -0.5. You can read more about conversions of normalized integers on this OpenGL wiki page.

To use normalized integers, you must set the normalized parameter of glVertexAttribPointer to true, and a shader will receive normalized floats.

Typical values stored in unsigned bytes are colors, because there’s no need to have more than 1/256th precision for colors’ components — 3 or 4 unsigned bytes are perfect to store RGB or RGBA colors, respectively. Two shorts can be used to store UV coordinates of a typical 3D model, assuming they are within the [0, 1] range and repeating textures are not used on meshes. They provide enough precision for these needs — for example, unsigned short will provide sub-texel precision even for texture with dimension of 4096 since it’s precision is 1/65536.

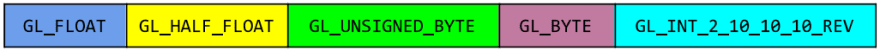

Newer OpenGL ES 3.0 (and WebGL 2 which is based on it) introduces new compact data types:

- Half floats for vertex data — these are 16-bit IEEE-754 floating point numbers. They use 2 bytes similar to

GL_SHORTbut their range and precision are not as limited as normalized values. - 4-bytes packed format

INT_2_10_10_10_REVwhich contains 4 integer values which can be normalized to floats. Three of these integers have 10 bits precision and one has only 2 bits. This format is described in section 2.9.2 of OpenGL ES 3.0 specs.

On some hardware usage of normalized integer types may not be free and could require a couple of extra GPU cycles to convert values to floats before feeding them into the shader. However memory savings provide more benefits than additional conversion overhead since it is performed per vertex.

Stride size, offsets and paddings

In our pipeline we use a two-steps approach — first generate and then compress vertex data. First, source OBJ and FBX files are converted into ready-to-use by GPU arrays — vertex indices and interleaved vertex attributes data (strides). The next step is converting float values to more compact data types. This is done with a command-line utility written in JavaScript running on Node.js. You can get it from GitHub.

To achieve the best cache coherency of reading vertex data it is recommended to create strides of a certain size. However, this depends on a GPU type so there are quite different recommendations regarding optimal total stride size:

- According to official Apple iOS OpenGL ES documentation, stride size must be a multiple of 4 bytes to achieve best performance and reduce driver overhead. Apparently this is caused by the architecture of Apple chips, and they use Imagination Technologies PowerVR GPUs.

- Official PowerVR Performance Recommendations document vaguely states that some hardware may benefit from strides aligned by 16 byte boundaries.

- ARM in their Application Optimization Guide recommends aligning data to 8 bytes for optimal performance on Mali GPUs. There are no official recommendations for vertex data alignment for Qualcomm Adreno GPUs.

Our tool aligns data by 4 bytes to save more memory (in our applications we don’t use models with an excessive amount of vertices so accessing vertex data is not the bottleneck).

Next, when you use mixed data types in interleaved vertex data it is necessary for each attribute data to be properly aligned within stride.This is stated in section 2.10.2 of OpenGL ES 3.0 specs — attribute offsets must be a multiple of corresponding data type size. If you don’t fulfill this requirement there are differences in behavior of OpenGL ES on Android and WebGL. OpenGL ES doesn’t produce any errors and the result depends on hardware (and probably drivers) — Adreno GPUs seem to process such malformed data without generating any errors while Mali GPUs fail to draw anything. WebGL implementations, on the other hand, detect misaligned interleaved attributes and you will find either an error or a warning about this in the console.

Chrome gives the following error:

GL_INVALID_OPERATION: Offset must be a multiple of the passed in datatype.

Firefox generates this warning:

WebGL warning: vertexAttribI?Pointer: `stride` and `byteOffset` must satisfy the alignment requirement of `type`.

Our tool can add empty padding bytes to properly align any data types.

As it was mentioned before, OpenGL ES 3.0 and WebGL 2 support special packed INT_2_10_10_10_REV structures which contain three 10-bit and one 2-bit signed integers. This data type provides a bit better precision than byte while taking only 1 byte more than 3 separate bytes. Our tool can convert 3 floats to this packed data type. Please note that even if you use only 3 components from this structure you should specify size 4 for glVertexAttribPointer when using it (in shader you may still use vec3 uniforms, w components will be ignored).

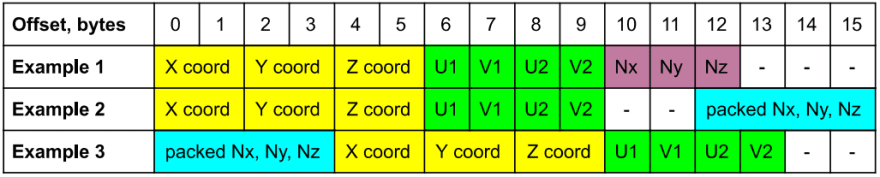

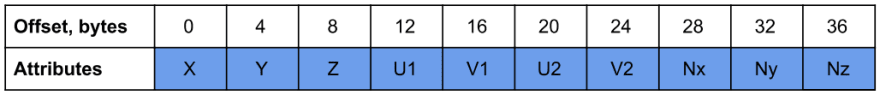

Here are three different examples of compressed and aligned strides. Original size of each stride composed of 32-bit floats is 40 bytes (10 floats) — 3 floats for vertex coordinates, 4 for two sets of UV coordinates (diffuse and lightmap), and 3 for normals. Here are examples of the same data compressed in three different ways down to 16 bytes (60% smaller than original) per vertex without visually perceivable quality loss.

Different variants of compressed strides:

In the first case normals don’t require alignment because they use normalized GL_UNSIGNED_BYTE type. The second case uses all normal values packed into a single INT_2_10_10_10_REV structure for better precision. Please note that this requires it to be aligned by multiple of 4 boundaries. For this alignment 2 unused padding bytes are added, shifting normals to offset 12. Useful data size of the first case is 13 bytes with 3 padding bytes to align total stride size, and the second case uses 14 bytes with 2 unused bytes for internal alignment. Both of them fit into 16 bytes (a closest multiple of 4) for GPUs to fetch whole strides more efficiently.

You may want to swap certain attributes to fit data tightly and eliminate the necessity of using internal empty paddings. In general, placing the largest data types first will make it easier to align smaller data types after them. For example, in the third case packed normals are stored at offset 0 and since this doesn’t cause misaligned half-floats and bytes after it, there’s no need to add internal padding bytes.

Size, performance and quality difference

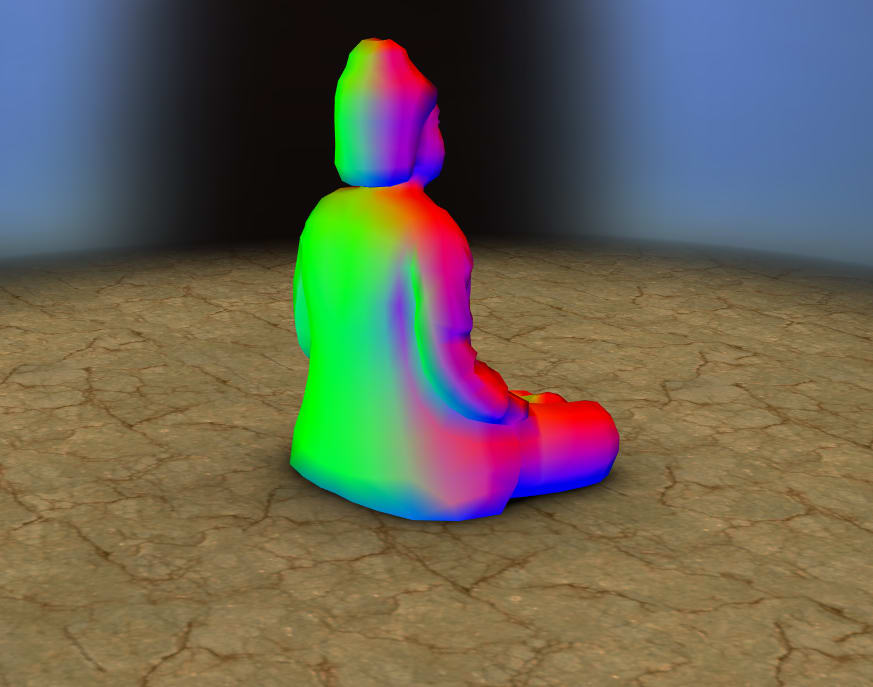

We have compressed vertex data for Buddha statue model by using half floats for positions, unsigned bytes for diffuse and lightmap UV coordinates, and signed bytes for normals. This resulted in reduction of uncompressed (before gzip) strides data size from 47 kB to 18 kB.

Even though we used the least accurate precision for UV coordinates, it is just enough because in this model we don’t use textures larger than 256x256. And normalized signed bytes are enough for normals. Test visualization of normals shows no visual difference between various data types, only perceptual diff can spot minuscule difference between certain pixels. Unfortunately dev.to doesn’t support WebP images so you can use this Google Drive link to view animation which shows that difference between various data types is visually unnoticeable.

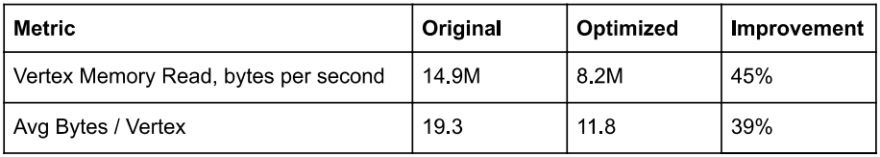

To accurately measure how optimization affected memory usage we used Snapdragon Profiler to capture average values for two real-time vertex data metrics. On Google Pixel 3 we have the following results:

This is a significant change which decreases app’s total RAM consumption and also reduces total memory bandwidth. Reduced GPU load allows for smoother system UI drawn over live wallpaper and improves battery usage.

Result

You can get the updated Android live wallpaper from Google Play, watch the updated live WebGL demo here, and examine its sources here.

Top comments (0)