Before I jump into what container means and how containerization improves modern-day application deployment. The default way to deploy an application was on its own physical computer. To set one up, you would find some;

• Physical space,

• Power,

• Cooling,

• Network connectivity,

• Install an operating system,

• Any software dependencies, and

• The application itself.

If you need more processing power, redundancy, security, or scalability, what would you do?

Well, you would have to add more computers. It was very common for each computer to have a single purpose. For example database, web server, or content delivery.

This practice, as you might imagine, wasted resources, and took a lot of time to deploy, maintain, and scale. It also was not very portable. Applications were built for a specific operating system and sometimes even for the specific hardware as well.

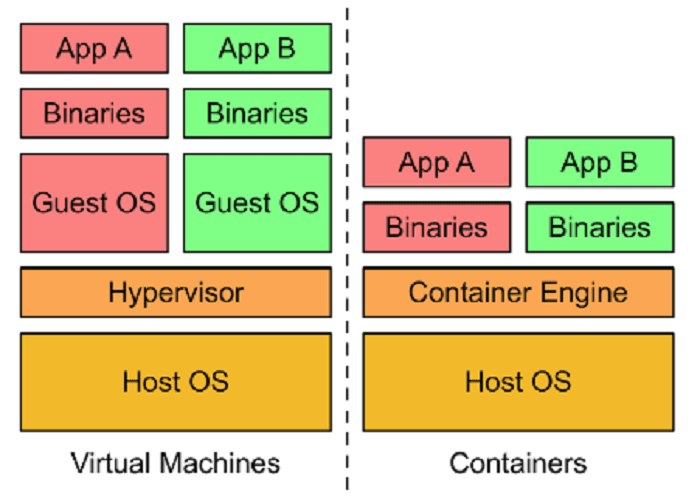

In comes the dawn of virtualization. Virtualization helped by making it possible to run many virtual servers and operating systems on the same physical computer. A hypervisor is the software layer that breaks the dependencies of an operating system with its underlying hardware and allows several virtual machines to share that same hardware. KVM is one well‑known hypervisor.

Today, you can use virtualization to deploy a new service quickly. Now adopting virtualization means that it takes us less time to deploy new solutions. We waste less of the resources on those physical computers that we are using. We get some improved portability because virtual machines can be imaged and then moved around.

However, the application, all its dependencies, and operating system are still bundled together. It is not very easy to move from a VM from one hypervisor product to another. Every time you start up a VM, its operating system still takes time to boot up.

Running multiple applications within a single VM also creates another tricky problem. Applications that share dependencies are not isolated from each other. The resource requirements from one application can starve out other applications of the resources that they need.

A dependency upgrade for one application might cause another to stop working. You can try to solve this problem with rigorous software engineering policies. For example, you could lock down the dependencies that no application can make changes to. But this leads to new problems because dependencies do need to be upgraded occasionally.

You can add integration tests to ensure that applications work. Integration tests are great, but dependency problems can cause new failure modes that are hard to troubleshoot. It really slows down development if you must rely on integration tests to just perform basic integrity checks of your application environment.

Now the VM‑centric way to solve this problem is to run a dedicated virtual machine for each application. Each application maintains its own dependencies, and the kernel is isolated. So, one application will not affect the performance of another. What you can get, as you can see here, are two complete copies of the kernel that are running. But here too we can run into issues as you are probably thinking. Scale this approach to hundreds of thousands of applications, and you can quickly see the limitation.

Just imagine trying to do a simple kernel update. So for large systems, dedicated VMs are redundant and wasteful. VMs are also relatively slow to start up because the entire operating system must boot.

A more efficient way to resolve the dependency problem is to implement abstraction at the level of the application and its dependencies. You don't have to virtualize the entire machine or even the entire operating system, but just the userspace. The user space is all the code that resides above the kernel and includes the applications and their dependencies. This is what it means to create containers. Containers are isolated user spaces for running application code.

Containers are lightweight because they don't carry a full operating system. They can be scheduled or packed tightly onto the underlying system, which is very efficient. They can be created and shut down very quickly because you're just starting and stopping the processes that make up the application. And not booting up an entire VM and initializing an operating system for each application. Developers appreciate this level of abstraction because they don't want to worry about the rest of this system.

Containerization is the next step in the evolution of managing code. You now understand containers as delivery vehicles for application code. They're lightweight, standalone, resource‑efficient, portable execution packages. You develop application code in the usual way, on desktops, laptops, and servers. The container allows you to execute your final code on VMs without worrying about software dependencies like;

• Application runtime,

• System tools,

• System libraries, and

• Other settings.

You package your code with all the dependencies it needs, and the engine that executes your container is responsible for making them available at runtime.

Containers appeal to developers because they're an application-centric way to deliver high‑performing and scalable applications. Containers also allow developers to safely make assumptions about the underlying hardware and software. With the Linux kernel underneath, you no longer have code that works on your laptop but doesn't work in production. The container is the same and runs the same anywhere. If you make incremental changes to a container based on a production image, you can deploy it very quickly with a single file copy. This speeds up your development process.

Finally, containers make it easier to build applications that use the microservices design pattern, which is loosely coupled, fine‑grained components. This modular design pattern allows the operating system to scale and also upgrade components of an application without affecting the application as a whole.

Top comments (0)