I've setup a local Git LFS server with rudolfs open source lfs server by jasonwhite.

https://github.com/jasonwhite/rudolfs

Background

I want to manage my Unity project on GitHub but its LFS size limit is 1GB by default. I was looking for local lfs server to save large files into a local disk.

Git LFS's wiki has a list of Git LFS implementations.

https://github.com/git-lfs/git-lfs/wiki/Implementations

I chose rudolfs which supports amazon S3 and local disk.

How to use

$ git clone https://github.com/jasonwhite/rudolfs.git

$ cd rudolfs

# Generate a random key to encrypt files.

# Save this key to your password manager.

$ openssl rand -hex 32

# Assing the key to a variable for test run

$ KEY=********************************

# Test run with cargo

# --cache is unnecessary when use local disk

# "local" indicates to use local disk

# --path is where storing large file location

$ cargo run -- --host localhost:8080 --key $KEY local --path data

Now, rudolfs server is running as localhost:8080.

Open localhost:8080 with a web browser and follow the instructions to set up Git LFS on your cloned local repository.

In my case .lfsconfig likes this.

[lfs]

url = "http://localhost:8080/api/kurohuku7/git-hub-reponame"

I added .mp4 extension to .gitattributes for testing.

git lfs track "*.mp4"

Then add an mp4 file to my repo. When I push it to GitHub, that video is saved in the rudolfs data folder specified by the --path attribute.

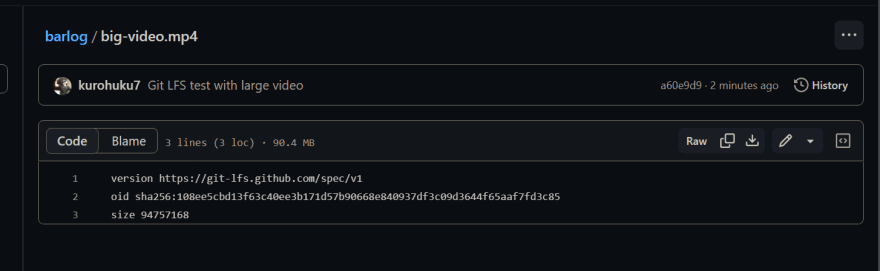

On GitHub, the video file content is just a text pointer to the LFS server instead of the original video.

Delete the local repository and re-clone from the remote. The large video file comes from the local disk. Perfect.

This is enough for my personal use case for now. Now, I can manage codes on GitHub and save large files to the local LFS server. All I have to do is launch rudolfs when start-up or by some dev command.

Tried with Amazon S3

I also tried using Amazon S3 as an online Git LFS server with rudolfs.

- Create an IAM user with permission set to access S3.

- Generate the IAM user's access key.

- Create a new bucket for a LFS server.

- Follow README of rudolfs.

Pass the AWS access key as environment variables and run docker compose command. When you push some large files as above, they should be saved on the S3 bucket instead of a local disk.

That's fine though I'm OK to use a local disk. Happy to manage code on GitHub without worrying about large file size limits.

Top comments (1)

How and where did you create the repository. I have created an empty repository on Github and cloned locally and created the .lfsconfig file with below content still I am getting error while pushing

[lfs]url = "http://localhost:8080/api/test_lfs"

I cloned my repo in home directory and trying to push after tracking and adding a large .zip file