There's a lot written about how the way developers structure their daily work can cause unproductivity. An example is when unnecessary meetings are scheduled across the day so nobody can get into deep focus mode. Today I want to look into the biggest killers in developer productivity: the way you configure and setup your DevOps workflow. In almost all situations I've come across there were some quick-wins that help you avoid most of the problems.

Killer #1: Go all in on microservices without the proper tooling

When teams work in a monolithic setup everything sort of works. The toolchain is prepared to handle this one monolith well, but yes, changing one small thing requires the deployment of the whole monolith. End to end tests need to be run in order to verify that everything is still fine. The bigger the monolith is, the less efficient this will be. So the team goes ahead and adopts microservices. Their first experience is great, colleagues can work on individual services independently, deployment frequency goes up and everybody is happy.

The problems start when teams get carried away with microservices and take the "micro" a little too seriously. From a tooling perspective you will now have to deal with a lot more yml files, docker files, with dependencies between variables of these services, routing issues etc. They need to be maintained, updated, cared for. Your CI/CD setup as well as your organizational structure and probably your headcount needs a revamp.

If you go into microservices for whatever reason, make sure you plan sufficient time to restructure your tooling setup and workflow. Just count the number of scripts in various places you need to maintain. Think about how long this will take, who is responsible and what tools might help you keep this under control. If you choose tools, make sure they have a community of users also using them for microservice setups.

Killer #2: Adopting Containers without a plan for externalising configuration

Containerization is an awesome technology for a lot of situations. However it comes with a price tag and can have an impact on your productivity. Containers add overhead from a security perspective and through necessary configuration and environment management etc. They can also hurt your productivity and developer experience if you don't agree on certain conventions as a team.

The most common mistake I'm seeing is: building your config files or environment variables into your container. The core idea of containerization is portability. By hard coding configuration you will have to start writing files and pipelines for every single environment. You want to change a URL? Nice, go ahead and change this in 20 different places and then rebuild everything.

Before you start using containers at scale and in production, sit down as a team and agree what config conventions are important to you. Make sure to consistently cover this in code-reviews and retros. Refactoring this a-priori is a pain.

Killer #3: Adopt Kubernetes the wrong way

All the cool kids are hyped about this open source project called Kubernetes. However, Kubernetes is hard to keep running and hard to integrate into your developer flow while keeping productivity and experience high. A lot can go wrong:

Kubernetes worst case: Colleague XY really wanted to get his hands dirty and found a starter guide online. They set up a cluster on bare-metal and it worked great with the test-app. They then started migrating the first application and asked their colleagues to start interacting with the cluster using kubectl. Half of the team is now preoccupied learning this new technology. The poor person that is now maintaining the cluster will be full time on this the second the first production workload hits the fan. The CI/CD setup is completely unprepared for dealing with this and overall productivity is going down as the entire team is trying to balance Kubernetes.

What can be done to prevent this: Kubernetes is an awesome technology and can help achieve a PaaS like developer experience if done right. After all, it descends from Borg - the platform Google built to make it easy for their Software Engineers to build massively scalable applications. Thus, it's kind of an open source interpretation of Google's internal platform.

Best practices:

- Wherever possible teams should not setup and run the barebone cluster themselves but use a managed Kubernetes service. Read the reviews on what managed Kubernetes cluster suits your needs best. At the time of me writing this article Google Kubernetes Engine (GKE) is by far the best from a pure tech perspective (though permission schema is still a pain - what is your problem with permissions, Google?) closely followed by Azure Kubernetes Service (AKS). Amazon's Elastic Kubernetes Service (Amazon EKS) and is racing to catch up.

- Use automation platforms or aContinuous Delivery API as offered by Humanitec. They allow you to run your workload on K8s out of sight of your developers. There is almost zero value in exposing everyone to the complexity of the entire setup. I know the argument with "everybody should be able to do everything" but the pace of change is so fast and the degree of managed automation so high that it really doesn't make sense.

- If teams really want developers to manage the Kubernetes cluster themselves, they should give them adequate time to really understand the architecture, design patterns, kubectl etc. and to really focus on this.

Killer #4: Forget to deal with Continuous Delivery

"Wait, I already have a CI Tool". There is a common misconception that the job is done well if there is a Continuous Integration setup. You are still missing Continuous Delivery! The confusion is not helped by a lot of these vendors coining the term "CI/CD tool" giving you the impression you've nailed Continuous Delivery if you have Jenkins, CircleCI etc. - that's not the case.

A well tuned Continuous Delivery setup, either self-scripted or "as-a-Service" is much more the "glue" in a teams toolchain:

- It allows all the different components, from source control system to CI-Pipeline, from database to cluster and from DNS setup to IaC to be integrated into a streamlined and convenient developer experience.

- It's a way to structure, maintain and manage the growing amount of yml and configuration scripts. If done well this allows your developers to dynamically spin up environments with the artefacts built by the CI-Pipeline and fully configured with databases provisioned and everything set up.

- It can act as a version control system for configuration states with an auditable record on what is deployed where, in what config and it allows you to roll back and forth as well as manage blue/green/canary deploys.

- Well thought through CD setups have a game-changing effect on developer productivity. They make developers self-serving with less dependencies within the team while increasing maintainability of your setup.

- Teams using these practices ship more frequently, faster, show overall higher performance and happiness.

Killer #5: Unmaintainable test automation in a limited test setup

Efficient testing is not possible without automation. With continuous delivery comes continuous responsibility to not break anything.\

You need to continuously make sure to not fall into the trap of inverting your test pyramid. For this you need to be able to run the right kind of tests at the right point of your development lifecycle.

Sufficient CI tooling will help you to put your unit and integration tests into the right place while CD tooling with configuration management and environment management helps you to run your automated end to end tests in a reliable way.

Well done setups allow developers or testers to dynamically spin up environments that are preconfigured. Strictly externalize your configuration and make sure to have a configuration management that injects these variables at deployment time. This leads to a number of positive improvements:

- Run the right tests at the right time, while providing efficient feedback to the development team

- Developers gain autonomy and you reduce key person dependencies,

- QAs are now able to test subsets through feature-environments,

- QA can parallelize testing which will save time while being able to test on subsets of your data.

Killer #6: Manage your databases yourself

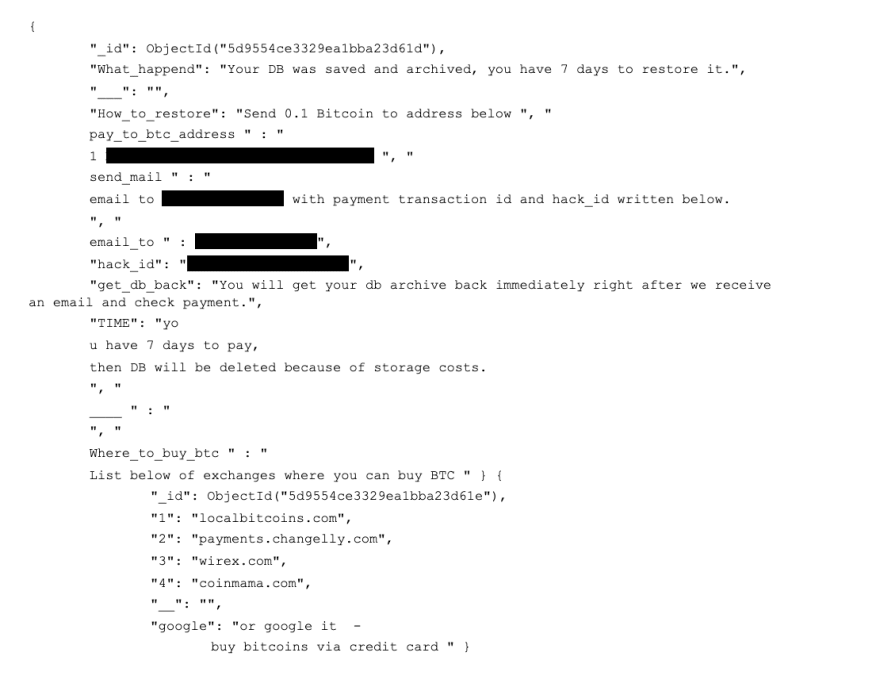

The teammate who just left was responsible for setting up MongoDB for a client project and of course used the open source project to run it themselves. And of course the handover was 'flawless' and of course the database wasn't protected properly and one evening this shows up where the data was supposed to be:

And of course:

You check the backups.

There was a syntax error.

You now have to reverse engineer all the data.

This is a real life example that happens frequently.

Self managed DBs are an operational and security risk. They are distracting, boring and unnecessary. Use Cloud SQL or other offerings and sleep well. We are commonly seeing managed offerings from companies such as Aiven.io. These companies offer most databases, they can run them on all the big cloud providers for you and they are more feature-rich, mature and sophisticated. Also, they are often cheaper and ensure zero lock-in at a higher developer convenience which if it goes hand in hand I'd always prefer.

Killer #7: Go multi-cloud for no reason

There is a difference between just going multi-cloud and trying to design your systems to be cloud agnostic and portable. The latter has a lot of different advantages such as dynamic environments and makes more sense than going multi-cloud. Sure there's a historical legacy: some teams had been using GCP and the other department started with AWS and here you are. Others include specialization. One might argue that GPUs run more efficiently on GCP than on AWS or cost-reasons. But these effects to really surface you need a sufficient size. Uncomplicated multi-cloud setups require a high degree of automation and shielding of provisioning and setup tasks from developers. Otherwise one ends up in scripting hell.

As a general rule: don't do multi-cloud if not absolutely necessary.

Conclusion

I hope these points help you avoid the biggest mistakes in this field. Remember what Nicole Forsgren, Jez Humble and Gene Kim write in their book "Accelerate": "the top 1% of teams ship 10x more often".

This is because they are getting the most of what is possible today. I spend 1 hour a month looking at my personal workflows, my to-do lists, the way I organize my apps. Why? Because it really adds up over the weeks if you have inefficient flows. These tiny things such as searching for your photo app distract your brain. Stop and spend an afternoon a month to ensure your productivity is streamlined. It will help you focus on innovation rather than configuration and will make for a happier team.

Reach out directly to me if you have ideas, comments or suggestions. Or register for one of our free webinars to get in touch with us.

Top comments (1)

Sometimes I wish I could like a post multiple times. As a DevOps (more ops than Dev) with CI/CD focus for the last 8 years now, most of those pain points I experienced at least once.

Thank you for aptly describing common project killers, despite causing me wild flashbacks now :D