Maybe you've had this problem: Some asks you to build a service that can export a bunch of from a system. Recently I was working on a side-project, a Shopify integration that needed to export a bunch of Shopify API metadata from one store and import it into another store.

It needed to work something like this:

That seems pretty simple, right? I thought so too. "This should take me a few days," I said. "I can knock it out over the weekend." 🤦♂️

Here's what I quickly discovered:

Challenge: Exporting big datasets won't work using only HTTP

HTTP is a synchronous protocol, which means that it if it sends a message it requires a response. If it doesn't get a response within a certain amount of time it gives up and throws an error. But my export needed to accommodate pages and pages of Shopify metadata, each bit of metadata needing to be built with multiple API calls. There was no way that I'll be able to build the export file in a time to respond to a single HTTP request.

Even if I was able to put together the raw computing power to do a big export in a few seconds, there was no reason to do so. Important as they are, exports happen infrequently enough that users are fine waiting for them. HTTP was just the wrong application for the task.

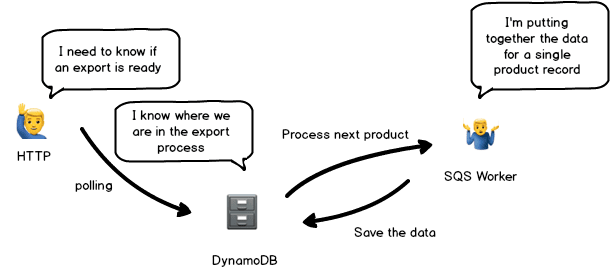

The first thing I did was switch to AWS SQS and wire up a DynamoDB table. So instead of my HTTP request trying to build an entire export, it just started an export and would regularly ask if it was done via polling. So the new system looked like:

Much better. This model didn't care how big or small the Shopify data set was. It just issued SQS messages and Lambda workers would assemble the data. DynamoDB kept track of state and would just keep issuing batches of SQS messages until nothing was left to be exported. It would scale.

Or so I thought, but I forgot one thing...

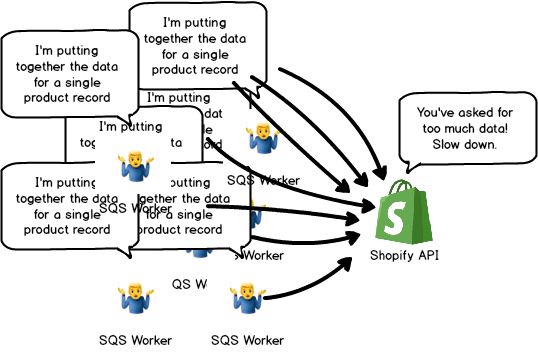

Challenge: Shopify's API is rate limited

The problem with this lovely scalable, asynchronous system was that it was too scalable. It performed like gangbusters, so much that we immediately started hitting the Shopify API rate limit as soon as it tried this with a decent sized store.

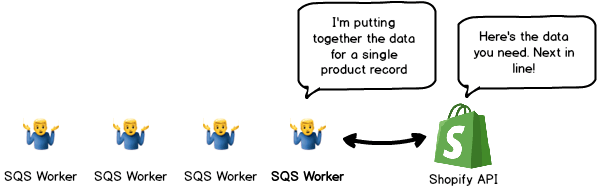

The underlying problem with that my process was asynchronous. It would just issue loads of SQS messages, generating loads of parallel lambda workers, themselves generating loads of API requests. It was the equivalent of a hungry mob swarming a single food truck. The answer in both cases is "form an orderly line! We will serve you in order." In the AWS world this is a FIFO queue.

FIFO (first in, first out) queues are special SQS queues that make sure your messages form an orderly line and nobody cuts. For me this meant that I could post as many SQS messages, as quickly as I wanted but my workers would only trigger in sequence, never all at once. The best part of this is that I didn't have to significantly refactor any of my code. I only needed to change the queue type to FIFO, add a few extra parameters to my message, and AWS did the rest of the work. No more rate limit failures.

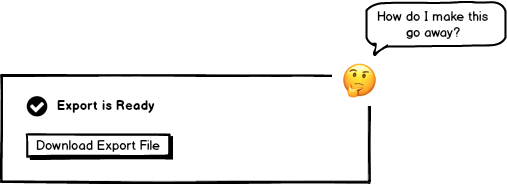

Challenge: Exports should expire

The last part of this is that I wanted exports to expire within 24 hours after they were ready. But the more I thought about this little feature, the more complicated it seemed. Should I build a scheduled / chron function to query the DB for old records and delete them? That seemed like a lot of work and potentially brittle. That's when DynamoDB TTL came to the rescue.

TTL (time to live) is a lovely little feature of DynamoDB that lets you set an expiration date for a record. Just add a property that says how long the record should stick around. In this case, instead of writing a bunch of logic to do this myself, I let DynamoDB handle the complexity. What could have been hours of development turned into minutes.

Conclusion: Read the AWS docs

AWS is an incredibly broad ecosystem with a lot of features that are easy to miss. Frankly, while the docs are thorough, they don't exactly hold your hand when you're trying to figure out how something works. But if you spend the time to do the research up front, you'll save yourself hours and potentially days of dev work.

In this situation, two simple AWS features, FIFO and TTL, shaved days off of schedule that had already grown more than I wanted.

===

🤓 Hey, if you want to follow along with me as I build a static web host for designers, sign up for the Wunderbucket beta. Let me know that you're from the dev.to community and I'll be sure to put you to the front of the line.

Top comments (2)

I love the emojis for representing the FIFO SQS workers

Thanks, Andrew :-)