TLDR: Win trivia night with more than you may ever need to know about USPS deliverability data…but when that data is key to driving decisions, you’ll be glad you went on the following ride with us.

Our customers send millions of mailpieces through our direct mail automation software every single day, and to do so requires a great deal of trust. But what happens when we lose confidence in the data driving our product? (Spoiler alert: We level up.)

Houston Lob, we have a problem

Lob has a large indirect marketing customer that sends direct mail campaigns via our Print & Mail APIs. They own their own address data but verify that data against our Address Verification API; month-over-month they have about a 2.2% return to sender (RTS) across all campaigns. But a few months ago, they reported individual campaigns returning as much as 97% of mail submitted back to sender. Eek.

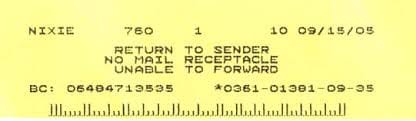

They sent us pictures of piles of postcards with the dreaded “nixie” label (Return to Sender) slapped on each. Mail can be RTS for a variety of reasons, some common examples are insufficient address, unable to forward, or vacant, but we saw the following:

As the verbiage would indicate, this means there was no mailbox or USPS-approved container to receive mail.

This customer facilitates direct mail campaigns for their customers by allowing them to geographically select an area to send mail to. Cases in which a user may be attempting to send to a rural area, a specific complex that rejects marketing mail, or perhaps entire neighborhoods without mail receptacles may be partially responsible for the high volume of RTS, but this answer is insufficient as it leaves room for doubt in the quality of our AV product. The confidence in our solution becomes dubious if there are numerous failed campaigns and irritated end-users, regardless of reasoning. A boost in efficacy in our product became critical at this juncture, and one solution arose from re-evaluating the finer data points of our Address Verification API.

Digging into the data

The thing is, the data we had led us to believe these were clean addresses. Our API response would have looked something like this example in our API documentation. Notable fields include ”deliverability”, “valid_address”, "deliverability_analysis", and “lob_confidence_score.”

Lob’s Address Verification product is CASS certified, and we include USPS deliverability prediction in the API response. Upon investigation, these postcards were returned to sender despite USPS confidence they would be “deliverable.”

The “valid_address” field indicates whether an address was found in a more comprehensive address dataset that includes sources from the USPS, open source mapping data, and our proprietary mail delivery data. These postcards were being returned despite a value of “true.”

Speaking of our proprietary data, in 2020, our team developed the Lob Confidence Score. Because we process addresses and ingest USPS tracking events from the mailpieces we send, we have an enormous amount of data we can marry together to calculate a Confidence Score that predicts the likelihood of delivery. But these postcards were returned to sender despite Lob having 100% confidence they would be deliverable.

Digging (deeper) into the data

To get to the bottom of this we had to dive into the USPS deliverability analysis. USPS includes details in their metadata including Delivery Point Validation (DPV) information for each address. This includes “dpv_footnotes,” or 2-character codes that provide more specific information or context. (For example, there is a footnote code to indicate the address input matches a military address or a code to indicate a valid address that is missing a secondary number.) DPV is intended to confirm USPS addresses but also identify potential addressing issues that may hinder delivery. These codes are one of many variables factored into USPS decision-making when determining deliverability.

Though not shown in our sample address, one of the DPV footnotes present was ”R7”, which is short for Carrier Route R777, also known as Phantom Route. If you get asked on trivia night, this is code for an “address confirmed but assigned to phantom route R777 or R779 and USPS delivery is not provided” (source). In practice, this means the mailpiece is not eligible for “street delivery” but is still technically deliverable at the doorstep. Addresses with the R7 footnote are still deemed deliverable by the USPS since they are able to receive mail at the door. That is, these addresses are technically deliverable but practically undeliverable.

Historically, our AV team followed suit. That is, when this data was present, we also reported this address as deliverable.

But when our customer became buried in returned postcards, we determined Lob needed to change our response when we see the R7 DPV footnote. If any part of the USPS response says “undeliverable,” then we should err on the side of caution and note it as undeliverable. The customer still has all the data available in the response and can choose to proceed or not.

Problem solved, right?

Not entirely. We still had returned to sender mail where R7 was not present!?

Digging (deepest) into the data

We opened the investigation to our contacts at the USPS to determine why these addresses were indicated as deliverable by the USPS, had been delivered to previously (that is, had a 100% Lob confidence score), and lacked the R7 code—but were still being returned.

The USPS informed us they were returning these to the sender based on a different identifier on the mailpiece:

“The Service Type Identifier (STID) came back as a Full-Service First Class Mail Piece using Informed Visibility with No Address Corrections. In this case, we return the pieces if they are undeliverable based on the address on the mail piece. That could be due to the customer moving, the address could be missing a suite or apartment number, etc.“

Sure, these reasons could be valid, but since all data prior to the send predicted a successful delivery, were they?

Our team did not let up.

We sent 10 specific addresses to the USPS for a deeper dive and we eventually got details from individual mail carriers. For our samples in Burlington, WI, “neither address has a mailbox.” For zips in 98947, they reported “no street delivery for any address in the city of Tieton, WA.” This makes sense; in any geolocation, there may be a whole suite of houses that don't have mailboxes. Ideally, the USPS could update each of these addresses to reflect their R7 status, but understandably they do not have the resources to tackle such a problem. And as we have learned, even with the correct DPV code, the USPS would still return a prediction of “deliverable” if queried.

That leads us to the unfortunate truth, which is that USPS data on deliverability is prone to imperfection.

Light at the end of the tunnel

But wait. We're not just surfacing USPS data alone, are we?

Our Address Verification team is back in the ring, and look who has a chair? Let’s not forget about the Lob Confidence Score!

Now when we evaluate the same set of “problem” addresses, the accuracy of our Confidence Score has improved significantly. For example, when we re-analyzed the addresses for the 97% campaign, the Confidence Score shifted to more closely reflect reality. The majority of these addresses now have a Confidence Score of 0. The lower the score, the lower the confidence in successful delivery.

Confidence Scores have and will continue to improve as more data is collected on deliveries. That is, the more mail we send, the more tracking data we get, and the more valuable our Confidence Score is for our customers.

Oh, and NBD, but this customer is now seeing a 0.8% RTS rate across sends, cutting down their original amount by over half! Needless to say, both the customer and our team are thrilled with this outcome.

Wrap up

And so, this is how we gained even more confidence in the Lob Confidence score. The fact is address data is prone to faults. It's manual data subject to clerical error and imperfections and many elements are outside of our control. It would be easy to simply roll over and accept this. But here at Lob, we own the outcome; to stand behind having the most comprehensive deliverability data available in the market, our commitment to product improvement will never cease.

Content adapted from a presentation by Solution Engineer Michael Morgan.

Top comments (0)