Performance is the heartbeat of any JavaScript application, wielding a profound impact on user experience and overall success. It's not just about ...

Some comments have been hidden by the post's author - find out more

For further actions, you may consider blocking this person and/or reporting abuse

You should distinguish application performance and Javascript performance. This is NOT the same:

Do you think, cutting the time of the smallest part (the Javascript routines) will cause any measureable performance boost?

Actually continuously updating the vdom (rerendering) is the biggest performance bottleneck for react apps (other than fetching data). The proposed optimizations help to decrease the number of rerenders as well as reduce the cost of rerendering.

If you look to the React history, the VDom was invented to free the developers from the burden of writing performant code. So, it´s really an interesting question, if streamlining your Javascript will have much impact at all. If so, React did a bad job.

It was invented to save time on browser DOM search and updating because DOM search methods are slower than usual JS tree/array search.

And VDOM's updating is based on the basic and fast comparison

===operator instead of using deep equality, which is way slower, and it's easier to delegate reference updating to devs instead of inventing fast deep equalityVDOM was invented to make DOM updates more efficient and it did. How often you update the VDOM and how long the VDOM update takes is still up to the developer. If you loop over a million table rows on every render, obviously its going to cause problems.

You are totally right. But that means, you should avoid to loop unnecessarily over millions of table rows, not just loop faster!

Well yes, but sometimes it cannot be avoided and then it's better to make it as fast as possible

with HMR my rebuilds are usually really quick (like 4-5 seconds for initial build then < 1s most changes to modules not too high up the dependency tree)

Let me give you an example:

The DOM of this page is created completely dynamic with Javascript, the main content is generated by a custom markdown parser, which is not optimized in any case. Building the DOM takes about 90 ms on the first run, rebuilding the whole DOM takes about 15 ms on my laptop with chrome. So, we are below 0.1s in any way.

If you do some measurements with chrome dev-tools, the page load takes about 2,1 s (ok, I´m on a train now), but even on a faster network this will be more than 10 times slower than all JS-actions. So, even if I could speed up my code by 50%, this would not make any difference for the user.

Yeah I don't think there is any debate about this on this thread. Minuscule optimisations like that proposed in the post are never gonna yield any measurable improvement besides making the code hard to read for some people.

I´m not sure everybody is aware of the facts. It´s the same with bundlers. The number of files and the order they are called is often much more important than the total file size. Nevertheless there is a whole fraction of developers squeezing every possible byte out of their library to win the contest: "who has the smallest library". I spend days to decode this highly optimized code to find some hidden bugs or simply understand, what the code does. And what is it good for to have the world smallest framework with less than 1 kB of code, if your hero image is 20 times larger...

Couldn't agree more, and I cannot but partly recognize my younger self in your description.

Long ago for instance I had tasked myself with writing the shortest possible Promise library that passes the A+ test suite... I think my entry is quite brief indeed, but god, it's worse than regexps in its write-only nature, totally unmaintainable.

Rebuild !== rerender

I could only agree with some of your points when working on very simple projects on the front-end.

Yeah, when you run very simple code and you don't handle a lot of data, making another loop is not a bottleneck. But when working with millions of data entries on the front-end or the back-end (node/bun) it is very important to consider loop optimization.

That is why I still think @mainarthur 's post has value.

Whatever the number of loops you'll do the same exact operations !

This code:

Is almost exactly the same as

Which again is the same as (assuming order of operations doesn't matter):

The only thing you're saving is a counter. It's peanuts.

They're not the same, but you cannot have good application performance with bad JS performance. When you deal with performance issues, the reason could be everywhere, and it also could be from antipatterns I've described in my post. It's beneficial to proactively avoid these issues before delving into debugging, freeing up your time to check other performance factors.

Thank you for your insights and the article regarding page performance. I'm planning to craft more comprehensive articles, both general and specific, targeting the pain performance points that affect most developers

I was gonna say I couldn't remember a website where the JS seems ludicrously slow, then I remembered EC2 Console and Google Developer Console: they both suck so much.

Hmm, I like this idea, since API is taking 3s to load and I can't do anything to optimize it, let's do some extra iterations on the frontend just for fun 😊

Hy Ediur,

not a bad Idea. Call it AI (Artificial Iterations) and it will be the next big thing!

Agreeing with Eckehard. Optimisation and priorities are very very different in backend and in frontend. Backend and frontend are two very different beasts.

It is not only a question of priority. The reasons for a delay may be very different.

If an application needs to wait for some data, we can try to use some kind of cache if the result is used more than once. Or we can change the way in which data are requested. If you need to request a database scheme via SLQ before requesting the data, you get a lot of traffic forth and back, each causing an additional delay. If you run the same operation on your database server and ask only for the result, you will get the result much faster.

But If an operation takes the same time to finish a million of loops, you would probably need a very different strategy. In that case, optimizing your code might help, but it is probably better to find a strategy that does not require so much operations at all.

To know, if an optimization helps at all, it is necessary to know, what causes the delay. Without this knowledge, you can waste a lot of time optimizing things that are fast anyway.

In theory. But typically one shouldn't have a million of loops in frontend, that's backend's job normally. And that's my entire point. If one finds themselves having to optimise that kind of things on the frontend, then i think they should take a step back and re-evaluate, they've probably got their frontend/backend division wrong.

imho... me thinks

;o)But things are shifting. There are a lot of SPA´s out there that just require some data and have no backend.

Aah, a lot of SPA's with a lot of data and million-loops, and no backend. I really know nothing about those, I don't even know an example case (feel free to share a url), but I'll take your word for it ;o)

See here, here, here, here, here, here, here or here.

This is from the vue-documentation:

If you just have a database running on a server, I would not call this a "backend". React or Vue can run completely in the browser, so you just need a webserver and some API endpoints to get your data. An application like this would not run much faster, if you try to put the image processing on the server, but it´s worth to see, what causes the delay.

Thanks a lot, i will read👍

dev.to/efpage/comment/2b22f

Realistically, they're getting the data from some backend somewhere, just not necessarily maintained by the maker of SPA. There are a lot of public APIs out there that can be consumed (which is how an SPA could have no backend but still huge data sets), and theoretically filters can be passed in requests to limit or prevent large data sets in the response.

Great article with simple tips to implement.

JS is notorious for abusing loops! Thanks for sharing.

Thank you for your feedback, @lnahrf ! Are there any JavaScript antipatterns you're familiar with and dislike?

Might be controversial, but I really dislike Promise.then - it creates badly formatted code (nesting hell), but for some reason I see a lot of developers using it instead of coding in a synchronous style with async/await.

Another architectural anti-pattern I notice quite a lot is the fact that developers name their components based on location and not functionality. For example, naming a generic tooltip component "homepageTooltip" (because we use it on the homepage) instead of "customTooltip".

But I am a backend engineer so I don't know anything about any of this 😁

I completely agree that Promise.then point, async/await syntax is fantastic and widely compatible across browsers and Node environments, so it should be more prioritized.

When it comes to naming and organizing components, I'm a fan of atomic design. Its structure is exceptional, clear, and easily shareable within a team.

This gave me an idea for a library - a library that implements all the common array utils, but using the Builder pattern, so you can chain the util functions together and they will be run only once at the same time.

Anyhow great article and thanks for the tip!

Hey! Great tips! There are other methods I like too, suchs as

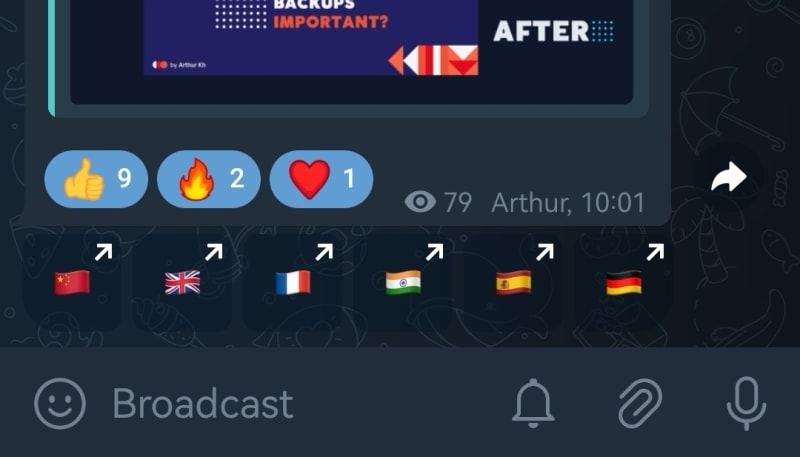

.trim()and.some()your Telegram channel is Russian! So I can't understand, unfortunately.

Hey!

I've developed a bot, which automatically translates my posts to popular languages so anyone can read

It's sometimes hard to choose which language to use when you're trilingual :D

Great tips, great article, thanks!

Personally I steer clear of using

reducefor all but the simplest cases because it gets unreadable pretty quickly.Using

.reducemight lead to unreadable code, particularly when the reducer function grows larger. However, the reducer function can be refactored into a more readable formCompare

reducewithfor of, And you would realize thatfor ofperforms faster compared toreduce.I would give least preference toreduceoverfor of,filter, map, reduceall can be done in a singlefor of.The choice between using array methods like

map,filter, andreduceversus explicit loops often depends on your code style. While both approaches achieve similar results, the array methods offer clearer readability: map modifies the data structure, filter removes specific elements from an array, and reduce accumulates values. However, using loops, like 'for', might require more effort to grasp since they can perform a variety of tasks, making it necessary to spend time understanding the logic within the loop's body.I don’t believe this to be accurate.

For starters, just because you're looping once doesn't mean the total number of operations changed. In your reduce function, you have two operations per iteration. In the chained methods there are two loops with one operation per iteration each.

In both cases the time complexity is O(2N) which is O(N). Which means their performance will be the same except for absolutely minor overhead from having two array methods instead of one.

Even if we assume you managed to reduce the number of operations, you can't reduce it a full order of magnitude. At best (if at all possible) you can make your optimization be O(N) while the chained version is O(xN), and for any constant x the difference will be quite minimal and only observable in extreme cases.

This is just a theoretical analysis of the proposal here. I haven't done any benchmarks, but my educated guess is that they will be in line with this logic.

I made a very similar comment, it pleases me to see it here already :)

I'm baffled by the number of people who seem to think that if they cram more operations into one loop they are somehow gonna reduce overall complexity in any meaningful way... But no: it'll still be O(n) where n is the length of the array.

Many people don't understand what "reduce" does, it can thus make more sense if you're in a team to use a map and a filter, which is extremely straightforward to understand and not a performance penalty.

I love obscure one-liners as much as any TypeScript geek with a love for functional programming, but I know I have to moderate myself when working within a team.

Shit, is big O complexity still studied in computer science?

The code you start off with is readable but as you optimize the code, it becomes more unreadable since your fusing many operations into a single loop. Consider using a lazy collections or a streaming library which applies these loop fusion optimizations for you so you don’t have to worry about intermediate collections being generated and you can keep your focus on more readable single purpose code. Libraries like lazy.js and Effect.ts help you do this

While doing more operations in one loop is gonna save you incrementing a counter multiple times, you'll still have O(n) complexity where n is the size of your array, so you will most likely not save any measurable amount of resources at all.

Sometimes it is more clear to write map then filter just for readability, cuz not everyone understands what reduce does unfortunately.

Code that cannot be read fluently by the whole team is in my experience way more costly than a few sub-optimal parts in an algorithm that takes nanoseconds to complete.

You should study the subject of algorithmic complexity if you don't know much about this concept.