In large scale systems terms like Load Balancer, Reverse Proxy, and API Gateway often comes up. Lot of times these words will be used interchangeably, but it is important to understand the difference between them. Once you understand them then it makes it easy to choose which fits your problem very easily.

Real life analogies

Let us take 3 examples and explain each of them.

Let us take a very busy restaurant, when we enter the restaurant, we will be greeted by host/hostess who is responsible for greeting and seating the guests evenly across waiters so that they can provide best experience for guests. In this case the host/hostess is nothing but L*oad Balancer*.

If you look at mailrooms in offices, there will be receptionists who take care of packages and letters. They do lot of work like sorting mails, deliver mail to employees/departments, take inventory of mailing supplies, forwarding misdirected mail, and signing off on certified mail etc. In this case they do more than just sorting and delivering mail to employees (which you can assume as load balancing), and that receptionist is nothing but Reverse Proxy.

If you go to a library, you will meet librarians who does lot of works like helping people find books and resources, verify library identity card, manage staff, responding to enquiries, make sure not one person borrowing too many books, etc. They do more work than what a receptionist does in above case and in this case, librarian is nothing but API Gateway.

Hopefully above examples provided enough context for you to understand the differences between all three. In simple words both Reverse Proxy and API Gateway provides load balancing features along with other functionalities. Also, API Gateway is a specific type of reverse proxy designed for managing APIs. We will dig deep into each of them.

Load Balancer

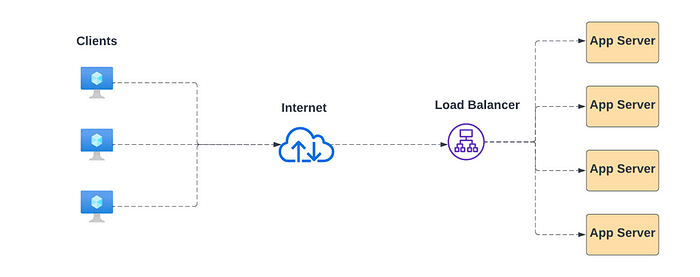

In this section we will look at what is load balancer, what are different types of load balancers and various load balancing algorithms. Contemporary websites with high traffic face the challenge of handling concurrent requests from numerous users. Whether it’s serving text, images, video, or application data, meeting these demands requires a substantial increase in processing power, often achieved by adding more servers. To maximize the efficiency of all servers, the incoming requests need to be distributed among them. This process of distributing network traffic across a group of backend servers is known as Load Balancing. The diagram below illustrates a typical load balancer environment.

The Load Balancer, a software component dedicated to load balancing, resides in front of the servers. Often referred to as a reverse proxy, it acts on behalf of the servers by receiving and routing requests. The load balancer efficiently manages requests, ensuring quick service. In the event of a server crash, the load balancer redirects traffic to other active servers. When a new server joins the group, the load balancer automatically starts routing requests to it.

Moreover, the load balancer can be configured to perform health checks on the registered backend servers. These health checks act as tests to verify the availability of the backend servers.

Load Balancer Types

There are different types of load balancers but below are the important ones.

- Network Load Balancer / Layer 4 (L4) Load Balancer — The Network Load Balancer functions at Layer 4, specifically handling TCP traffic. It distributes traffic at the transport level, making routing decisions based on network variables such as IP addresses and destination ports. This load balancer focuses solely on network layer information when directing traffic.

- Application Load Balancer / Layer 7 (L7) Load Balancer — Operating at Layer 7 of the OSI model, the Application Load Balancer distributes the load based on parameters at the application level. This load balancer enhances the security and simplifies the application by always ensuring the use of the latest SSL/TLS ciphers and protocols.

Load Balancer Algorithms

Static Algorithms

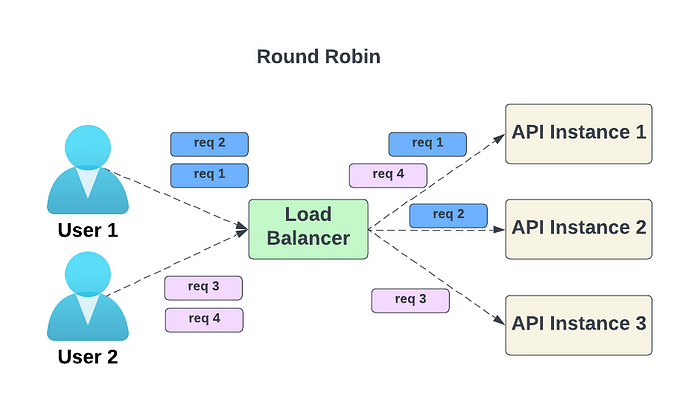

- Round robin — Client requests are sequentially sent to different service instances. Typically, statelessness is required for the services.

- Sticky round-robin — An enhanced version of the round-robin algorithm where subsequent requests from a client go to the same service instance that handled the initial request.

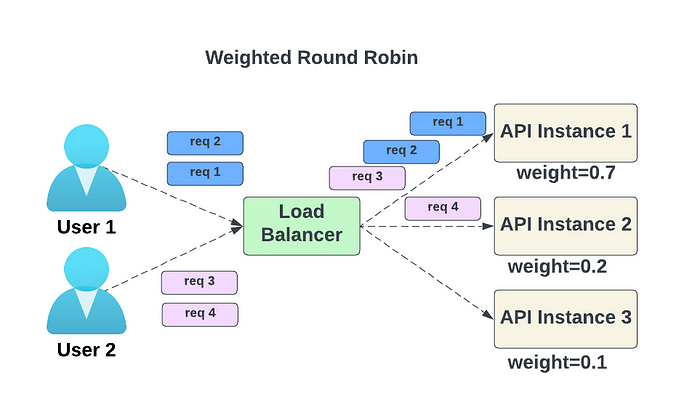

- Weighted round-robin — The administrator can assign weights to each service, determining the proportion of requests each service handles.

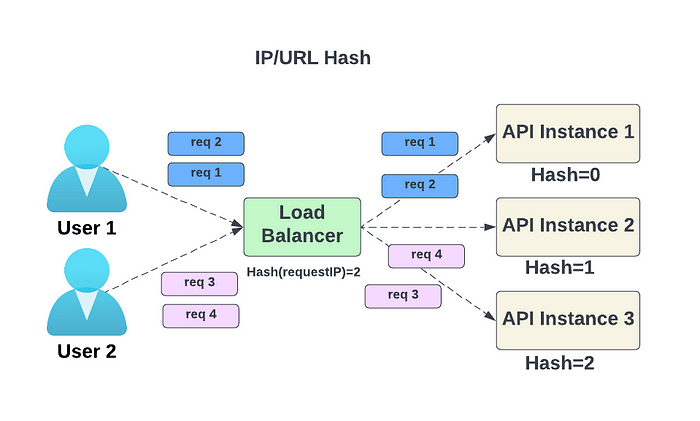

- Hash — This algorithm applies a hash function to the IP or URL of incoming requests. The instances to which requests are routed depend on the hash function’s result.

Dynamic Algorithms

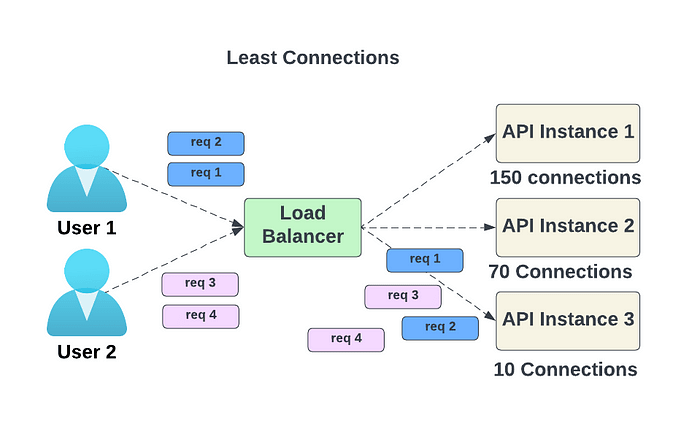

- Least connections — new requests are directed to the service instance with the fewest concurrent connections.

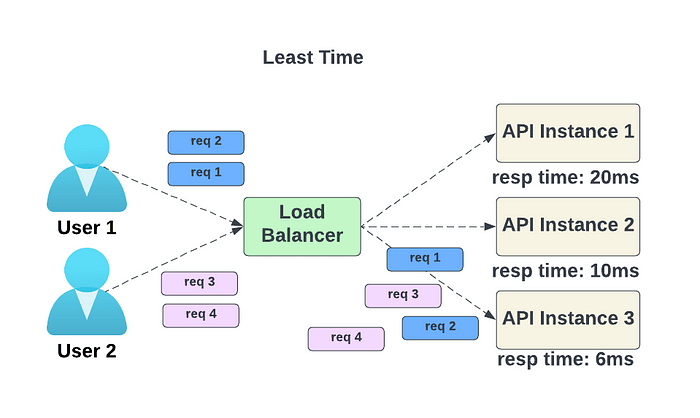

- Least response time — new requests are sent to the service instance with the quickest response time.

Benefits of Load Balancer

- Efficiently distributes client requests or network load among multiple servers.

- Enhances response time by alleviating the load on specific servers.

- Ensures high availability and reliability by directing requests only to online servers.

- Facilitates scalability by dynamically adding or removing servers based on network requirements.

- Enables early detection of failures, allowing effective management without impacting other resources.

- Provides SSL termination, reducing the computational load on web servers by handling SSL traffic decryption.

- Enhances security with an additional layer of protection, defending systems against distributed denial-of-service (DDoS) and other attack types.

Issues with Load Balancer

- If not set up correctly, it will become a single point of failure.

- Requires careful configuration and monitoring.

Reverse Proxy

A reverse proxy functions as a mediator between clients and servers. Clients interact solely with the reverse proxy to reach backend servers, as the proxy forwards requests to the relevant server. This mechanism conceals the implementation specifics of individual servers within the internal network. As shown below, a reverse proxy sits in front of an origin server and ensures that no client ever communicates directly with that origin server.

A reverse proxy is frequently employed for:

- Load distribution via Load balancing.

- Caching — For repeated requests, it can independently respond, either in part or in full. Frequently accessed content is stored in the proxy cache, reducing the need to fetch data from the backend and providing clients with quicker responses.

- Security measures — It provides the option to implement control systems like antivirus or packet filters. These systems, situated between the Internet and the private network, offer added protection for the backends.

- SSL termination — It can be set up to decrypt incoming requests and encrypt outgoing responses, thereby freeing up valuable backend resources.

Reverse proxies function at Layer 7 (application layer) of the OSI model, where they manage requests and responses at the HTTP level. This allows them to provide advanced features and functionalities. One such capability is URL rewriting, simplifying complex URLs and enhancing SEO.

Benefits of Reverse Proxy

By implementing a reverse proxy, a website or service can maintain the confidentiality of its origin server(s)’ IP addresses. This adds a layer of protection against targeted attacks, like DDoS attacks, as attackers can only direct their efforts towards the reverse proxy. The reverse proxy, being more secure and resource-equipped, provides enhanced defense against cyber-attacks. Other benefits include Caching and SSL Termination.

API Gateway

Consider the Gateway API as an expanded version of a Reverse Proxy. The API Gateway not only forwards requests but also conceals from clients the backend partitioning in the architecture. It goes beyond simple request forwarding, potentially engaging in orchestration or aggregation. This simplifies client code and reduces the number of API requests/roundtrips. Additionally, instead of interacting with multiple backends, clients communicate solely with the API gateway. Another noteworthy aspect involves Protocol Translation, where the API Gateway can convert protocols (e.g., XML to JSON, gRPC to JSON) to facilitate client-server integration. The API Gateway serves as a pivotal tool in addressing various essential capabilities such as security, reliability, scalability, observability, and traceability.

Key Features of API Gateway

- Request Routing — Guides incoming requests to the relevant service.

- API Composition — Combines multiple services into a unified API.

- Rate Limiting — Manages the volume of requests a user can make to an API within a defined time.

- Security — Incorporates features such as authentication and authorization.

- Centralized Management — Offers a unified platform for managing all aspects within our ecosystem.

- Analytics and Monitoring — Enables comprehensive analysis and tracking capabilities.

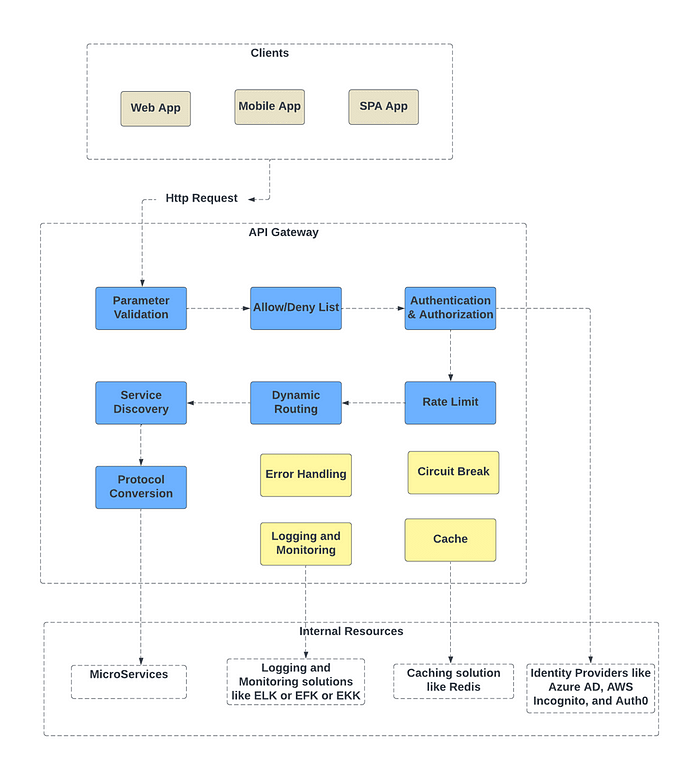

API Gateway Internal Steps

In below diagram the blocks in blue are the steps through which HTTP request goes through. The blocks in yellow are other functionalities API Gateway provides while processing the HTTP requests.

- Parameter Validation — The API gateway examines and validates the attributes within the HTTP request.

- Allow/Deny List — API gateway assesses allow/deny lists for request validation.

- Authentication & Authorization — API gateway verifies and grants authorization through an identity provider.

- Rate Limit — Rate-limiting rules are enforced, and requests exceeding the limit are declined.

- Dynamic Routing and Service Discovery — The API gateway directs the request to the relevant backend service using path matching.

- Protocol Conversion — API gateway converts the request into the suitable protocol and relays it to backend microservices.

- Error Handling — The API gateway manages any encountered errors during request processing to ensure graceful service degradation.

- Circuit Break — The API gateway incorporates resiliency patterns such as circuit breaks to identify failures, preventing the overload of interconnected services and mitigating cascading failures.

- Logging and Monitoring — Utilizing observability tools like the ELK stack (Elastic-Logstash-Kibana), the API gateway facilitates logging, monitoring, tracing, and debugging.

- Cache — The API gateway may choose to cache responses for recurring requests, enhancing overall responsiveness.

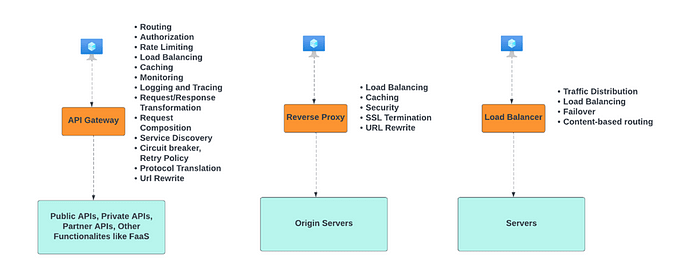

Key differences between three

- API Gateways specialize in API management, Load Balancers distribute network traffic, and Reverse Proxies ensure secure request forwarding.

- While both API Gateways and Reverse Proxies can manage request routing, their core objectives vary.

- Load Balancers primarily function at the transport layer, whereas API Gateways and Reverse Proxies operate at the application layer.

More differences shown below.

When to use which?

- Load Balancers excel in distributing network traffic to enhance availability, scalability, and even load distribution across multiple servers, commonly applied in web applications and services.

- API Gateways focus on the management, security, and optimization of APIs within microservices architectures. Their pivotal role involves exposing, securing, and controlling access to APIs.

- Reverse Proxies find application in security, performance optimization, and load balancing. They are frequently utilized in web servers, caching solutions, and as integral components of application delivery networks.

Navigating the intricate landscape of load balancers, reverse proxies, and API gateways might seem challenging, but armed with the right knowledge, you’re now equipped to make informed decisions and select the ideal components for your web application. Keep in mind that load balancers distribute traffic across multiple backend servers, reverse proxies provide additional application-level features, and API gateways offer centralized management and security for microservices-based applications.

Moreover, feel free to explore a mix-and-match approach with these components for optimal results. Combining load balancers, reverse proxies, and API gateways allows you to construct a web architecture that is not only efficient but also secure and scalable. Embrace the power of these components to unlock the full potential of your application.

Top comments (2)

Pls do a post on products available in market like haproxy.

Hello @rajatgarg

Thanks for your valuable comment.

In upcoming post we will cover with proper example.

Thanks again 😊