Do you plan to scrape websites using JavaScript? With the help of the Node.js platform and its associated libraries, you can use JavaScript to develop web scrapers that can scrape data from any website you like.

We are in an era where businesses depend largely on data, and the Internet is a huge source of data with textual data being the most important. Social and business researchers are interested in collecting data from websites that have data of interest to them. Unfortunately, most websites do not make it easy for data scientists to collect the required data. For this reason, researchers have to use automated means to collect these data on their own. This automated means of collecting publicly accessible data on web pages using web scrapers is known as web scraping.

We are in an era where businesses depend largely on data, and the Internet is a huge source of data with textual data being the most important. Social and business researchers are interested in collecting data from websites that have data of interest to them. Unfortunately, most websites do not make it easy for data scientists to collect the required data. For this reason, researchers have to use automated means to collect these data on their own. This automated means of collecting publicly accessible data on web pages using web scrapers is known as web scraping.

Web scrapers can be developed using any programming language that is Turing complete. Java, PHP, Python, JavaScript, C/C++, and C#, among others, have been used for writing web scrapers. Being that as it may, some languages are much more popular than others as far as developing web scrapers are concerned. JavaScript is not a popular choice. In recent times, its popularity as a language for developing web scrapers is on the rise – thanks to the availability of web scraping libraries. In this article, I will be showing you how to develop web scrapers using JavaScript.

Node.js – The Game Changer

JavaScript was originally developed for frontend web development to add interactivity and responsiveness to web pages. Outside of a browser, JavaScript cannot run. For this reason, you cannot use it for backend development as you can use the likes of Python, Java, and C++. This then means that you will need to be proficient in two languages to be able to do both frontend and backend development. However, Developers thought that JavaScript is a complete programming language and, as such, shouldn’t be confined to only the browser environment.  This led Ryan Dahl to develop Node.js. Node.js is a JavaScript runtime environment built on Chrome’s V8 JavaScript Engine. With Node.js, you can write codes and get them to run on PCs and servers, just like PHP, Java, and Python. This now made many developers take JavaScript as a complete language to be taken seriously – and many libraries and frameworks were developed for it to make programming backend using JavaScript easily. With Node.js, you can now use one language to write codes for both frontend and backend.

This led Ryan Dahl to develop Node.js. Node.js is a JavaScript runtime environment built on Chrome’s V8 JavaScript Engine. With Node.js, you can write codes and get them to run on PCs and servers, just like PHP, Java, and Python. This now made many developers take JavaScript as a complete language to be taken seriously – and many libraries and frameworks were developed for it to make programming backend using JavaScript easily. With Node.js, you can now use one language to write codes for both frontend and backend.

As a JavaScript developer, you can develop a complete web scraper using JavaScript, and you will use Node.js to run it. I will show you how to code a web scraper using JavaScript and some Node.js libraries.

Installations and Setup

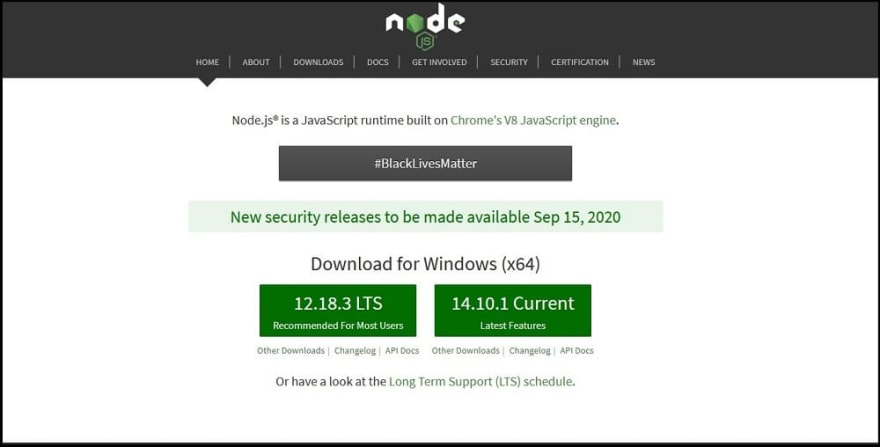

Unlike the JavaScript runtime that comes installed in every modern browser, you will need to install Node.js for you to use it for your development. You can install Node.js from the Node.js official website – file size is less than 20MB for Windows users. After installing Node.js, you can enter the below code in your command line to see if it has been installed successfully.

Unlike the JavaScript runtime that comes installed in every modern browser, you will need to install Node.js for you to use it for your development. You can install Node.js from the Node.js official website – file size is less than 20MB for Windows users. After installing Node.js, you can enter the below code in your command line to see if it has been installed successfully.

Node

if no error message is returned, then Node has been installed successfully. You can also confirm by looking out for the Node.js application in your list of installed programs. After installation, the next step is to install the necessary libraries/modules for web scraping. For this tutorial, I will advise you to create a new folder in your desktop and name it web scraping. Then launch Command Prompt (MS-DOS/ command line) and navigate to the folder using the command below.

cd desktop/web scraper

Now, let start installing the Node.js packages for web scraper – don’t close Command Prompt yet.

-

Axios

The Axios module is one of the most important web scraping libraries. It is an HTTP Client, just like a browser that sends web requests and gets back a response for you. You will be using it to download pages you want to scrape data from. To installAxios, use the code below.

npm install axios

-

Cheerio

Cheerio is a lightweight library you can use to transverse the DOM of the HTML page downloaded using Axios for the purpose of collecting the required data. Its syntax is like that of jQuery, and as such, you will have no problem using it if you already know how to make use of jQuery. Use the below command to install it.

npm install request

-

Puppeteer

If you are scraping from static pages, the libraries above will do the work. However, when you intend to scrape from pages that its contents are dynamically generated and depend on JavaScript evens to load, then Axios isn’t useful as it only downloads what the server sends to it when it initials a request. For dynamic websites that depend on JavaScript events to load content, you will need a browser automation tool to help you control a browser so that all contents will load,and then you can scrape them.

npm install puppeteer

Scraping from Static Websites

Scraping from static web pages is the easiest – when we are not putting anti-scraping systems into consideration. This is because, for static web pages, all that is required is for you to use an HTTP Client (Axios) to request for the content of a page, the website’s server will send back a response as HTML. You can then make use of Cheerio to transverse the DOM and scrape the required data. In the example below, I use JavaScript to scrape the text within the h1 tag from https://example.com/. In the code below, I use Axiosto download the whole page and then Cheerio for collecting traversing the DOM and scraping the text within the h1 tag.

constaxios = require("axios")

const cheerio = require("cheerio")

async function fetchHTML(url) {

const{ data } = await axios.get(url)

return cheerio.load(data)

}

const $ = await fetchHTML("https://example.com")

// Print the full HTML

console.log(`Site HTML: ${$.html()}\n\n`)

// Print some specific page content

console.log(`First h1 tag: ${$('h1').text()}`)

Scraping Dynamic Websites

Dynamic websites pose a serious challenge to web scraper. Websites on the Internet were initially developed as static websites with little to no form of interactivity. For these websites, when you send a request for a page, all the content for that page is loaded at ones – this is the model that is easiest for web scrapers to scrape. However, with the advent of dynamic websites, many pages on the Internet do not get their content loaded at ones. Some of the content depends on JavaScript events. If you are posed with scraping a website like this, you will need to use Puppeteer.

What Puppeteer does is that it controls Chrome to visit the website, trigger the JavaScript events that will load content, and then when content is loaded, you can then scrape the required data out. There is a lot you can do with Puppeteer. In the below example, the code scrapes the title and summary of the Fast Five movies from IMDb. There is more you can do with Puppeteer; read the Puppeteer documentation here to learn more about its APIs and usage.

const puppeteer = require("puppeteer");

async function collectData() {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto

("https://www.imdb.com/title/tt1013752/");

const data = await page.evaluate(() => {

const title = document.querySelector(

"#title-overview-widget >div.vital>

div.title_block> div >div.titleBar>

div.title_wrapper> h1"

).innerText;

const summary = document.querySelector(

"#title-overview-widget >

div.plot_summary_wrapper>div.plot_summary>

div.summary_text"

).innerText;

// This object will be stored in the data variable

return {

title,

summary,

};

});

await browser.close();

}

collectData();

A Note About Anti-Scraping Techniques

Looking at the codes above, I didn’t incorporate techniques to bypass anti-bot systems. This is because the tutorial is minimalistic and a proof of concept. In reality, if you develop your own web scrapers and do not put into consideration anti-scraper systems, your bot will be blocked after a few hundred requests. This is because websites do not want to be scrapped as it adds no value to them – instead, it adds to their running cost. For this reason, they put in place anti-scraping systems to discourage web scraping and other forms of automated access.

The most popular anti-scraping techniques used by websites include IP tracking and block, and Captcha systems. Some websites use cookies, local storage, and browser fingerprinting to prevent bot traffic too. So, I will advise you to read the Axion documentation in order to learn how to use proxies, change user-agent string, and other headers, as well as rotate them.For a more comprehensive article on how to evade blocks, read this article – How to Scrape Websites and Never Get Blocked.

Conclusion

With the development of Node.js, it is time for everyone that has looked down on JavaScript to know that JavaScript is like every other programming language. Unlike in the past, you can now develop web scraper in JavaScript that requires no browser to run – it will run on a server or your local PC with the help of Node. Node goes crape any website you like with the help of JavaScript, Node.js, proxies, and anti-Captcha systems.

Top comments (0)