This is a Plain English Papers summary of a research paper called Adam-mini: Use Fewer Learning Rates To Gain More. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- The paper introduces a new optimization method called Adam-mini, which aims to improve the efficiency of the popular Adam optimizer by using fewer learning rates.

- Adam-mini modifies the standard Adam algorithm to use a single global learning rate instead of separate learning rates for each parameter.

- The authors claim that Adam-mini can achieve comparable or better performance than standard Adam while using significantly less memory.

Plain English Explanation

The Adam optimizer is a widely used technique in machine learning for updating the parameters of a model during training. Adam works by adjusting the learning rate for each parameter individually, which can help the model converge more quickly.

However, the authors of this paper argue that the per-parameter learning rates used by Adam can also be inefficient, as they require storing and updating a large number of additional variables. To address this, they propose a new method called Adam-mini, which uses a single global learning rate instead of separate rates for each parameter.

The key idea behind Adam-mini is that a single global learning rate can often be just as effective as the individual rates used in standard Adam, while requiring much less memory to store and update. This can be particularly beneficial for training large models or running on resource-constrained devices, where memory usage is a concern.

The authors demonstrate that Adam-mini can achieve comparable or even better performance than standard Adam on a variety of machine learning tasks, while using significantly less memory. This suggests that Adam-mini could be a useful alternative to the standard Adam optimizer in many practical applications.

Technical Explanation

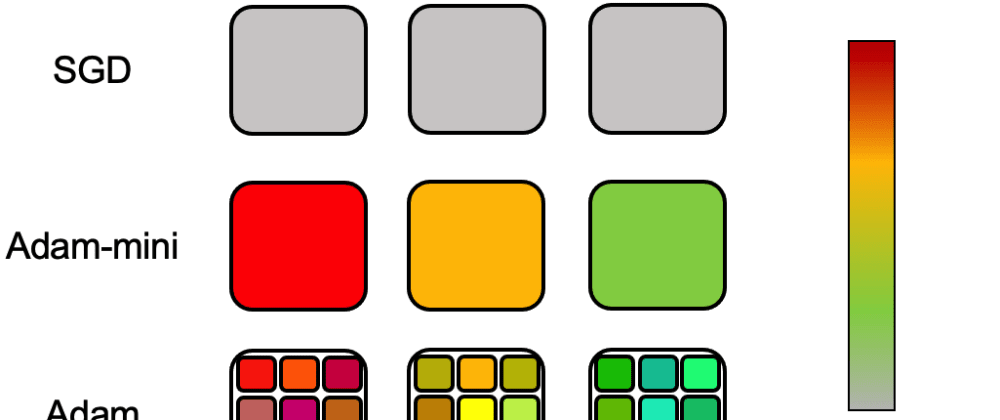

The Adam optimizer is a popular algorithm for training machine learning models, as it can often converge more quickly than traditional stochastic gradient descent. Adam works by maintaining separate adaptive learning rates for each parameter in the model, which are updated based on the first and second moments of the gradients.

While the adaptive learning rates used by Adam can be beneficial, they also come with a significant memory overhead, as the algorithm needs to store and update a large number of additional variables. This can be problematic for training large models or running on resource-constrained devices.

To address this issue, the authors of the paper propose a new optimization method called Adam-mini. In Adam-mini, the authors modify the standard Adam algorithm to use a single global learning rate instead of separate rates for each parameter. This reduces the memory footprint of the optimizer, as the algorithm only needs to maintain a small number of additional variables.

The authors show that Adam-mini can achieve comparable or even better performance than standard Adam on a variety of machine learning tasks, including image classification, language modeling, and reinforcement learning. They attribute this to the fact that a single global learning rate can often be just as effective as the individual rates used in standard Adam, especially for well-conditioned problems.

The authors also provide theoretical analysis to support their claims, showing that Adam-mini can achieve similar convergence guarantees to standard Adam under certain conditions. Additionally, they demonstrate that Adam-mini can be easily combined with other memory-efficient techniques, such as BADAM and ADALOMO, to further reduce the memory requirements of the optimization process.

Critical Analysis

One potential limitation of the Adam-mini approach is that the single global learning rate may not be as effective as the individual rates used in standard Adam for more complex or ill-conditioned optimization problems. In such cases, the additional flexibility provided by the per-parameter learning rates in standard Adam may be necessary to achieve optimal performance.

Additionally, the authors note that the performance of Adam-mini can be sensitive to the choice of hyperparameters, such as the initial learning rate and the momentum decay rates. Careful tuning of these hyperparameters may be required to achieve the best results, which could limit the practical applicability of the method in some scenarios.

Finally, while the authors demonstrate that Adam-mini can be combined with other memory-efficient techniques, it would be interesting to see how the method performs in comparison to other memory-efficient optimization algorithms, such as MicroAdam or HIFT. A more comprehensive comparison of these approaches could provide further insights into the relative strengths and weaknesses of the Adam-mini method.

Conclusion

The Adam-mini optimization method introduced in this paper offers a promising approach to improving the efficiency of the popular Adam optimizer. By using a single global learning rate instead of separate rates for each parameter, Adam-mini can achieve comparable or better performance while using significantly less memory.

This could be particularly beneficial for training large models or running on resource-constrained devices, where memory usage is a concern. While the method may have some limitations in certain optimization scenarios, the authors' theoretical and empirical results suggest that Adam-mini could be a useful addition to the suite of optimization techniques available to machine learning practitioners.

Overall, this paper provides an interesting contribution to the ongoing efforts to develop more efficient and memory-friendly optimization algorithms for machine learning applications.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)