This is a Plain English Papers summary of a research paper called AI System Uses Multiple Data Types to Better Understand Human Emotions via Reinforcement Learning. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

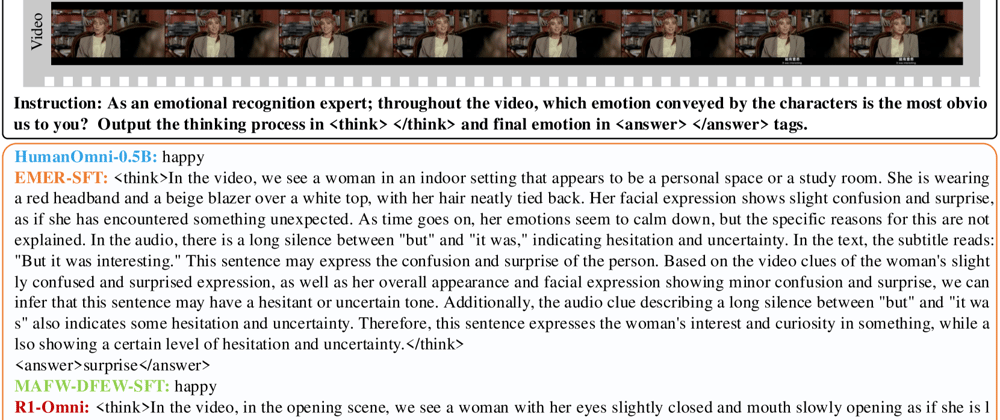

- R1-Omni is a new approach to emotion recognition using multiple modalities

- Combines reinforcement learning with emotion recognition

- Provides explainable results by showing which modalities influenced decisions

- Achieves state-of-the-art performance across multiple datasets

- Can handle any combination of audio, video, text, and biometric signals

- Uses a modality selection strategy to focus on most relevant inputs

Plain English Explanation

Emotions are complex. Detecting them accurately requires more than just looking at someone's face or listening to their voice. The R1-Omni system tackles this challenge by combining different types of information—facial expressions, voice tone, words spoken, and even biological...

Top comments (0)