This is a Plain English Papers summary of a research paper called Chain-of-Thought Unfaithfulness as Disguised Accuracy. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- This paper examines the phenomenon of "chain-of-thought unfaithfulness" in large language models, where the models produce reasoning that appears accurate but is actually disconnected from their true understanding.

- The authors propose a new technique called "chain-of-thought faithfulness testing" to evaluate the alignment between the models' reasoning outputs and their underlying knowledge.

- The paper also discusses related work on measuring the faithfulness and self-consistency of language models, as well as the inherent challenges in this area.

Plain English Explanation

Large language models, like those used in chatbots and virtual assistants, are incredibly capable at generating human-like text. However, a previous study has shown that these models can sometimes produce "chain-of-thought" reasoning that appears correct but is actually disconnected from their true understanding.

Imagine a student who can recite facts and formulas but doesn't really understand the underlying concepts. They may be able to solve math problems step-by-step, but their reasoning is not grounded in a deeper comprehension of the material. Similarly, large language models can sometimes generate convincing-sounding explanations without truly grasping the meaning behind them.

This paper introduces a new approach called "chain-of-thought faithfulness testing" to better evaluate the alignment between a model's reasoning outputs and its actual knowledge. The authors draw inspiration from related work on measuring the faithfulness and self-consistency of language models, as well as the inherent challenges in this area.

By developing more rigorous testing methods, the researchers aim to gain a better understanding of when and why large language models exhibit "unfaithful" reasoning, and how to potentially address this issue. This is an important step in ensuring that these powerful AI systems are truly aligned with human knowledge and values, rather than just producing plausible-sounding output.

Technical Explanation

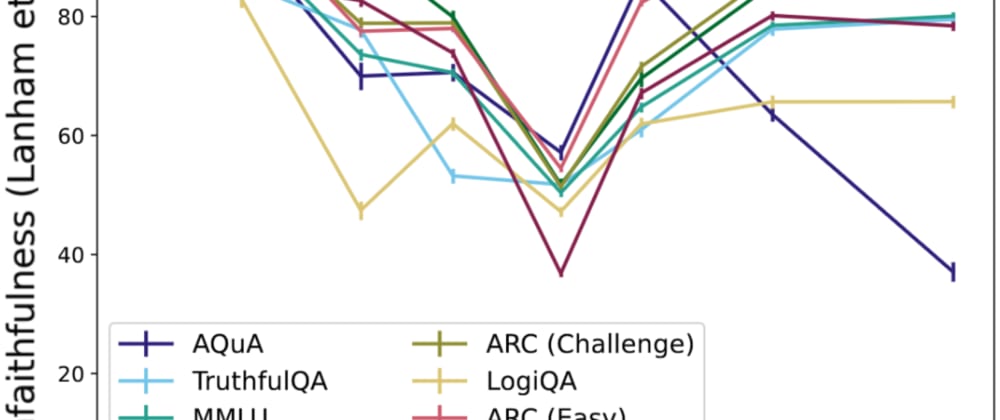

The paper introduces a new technique called "chain-of-thought faithfulness testing" to evaluate the alignment between the reasoning outputs of large language models and their underlying knowledge. This builds on previous research that has identified the phenomenon of "chain-of-thought unfaithfulness," where models can generate logically coherent but factually inaccurate reasoning.

The authors draw inspiration from related work on measuring the faithfulness and self-consistency of language models, as well as the inherent challenges in this area. They propose using a combination of automated and human-evaluated tests to assess the degree to which a model's reasoning aligns with its true understanding.

The paper also discusses the direct evaluation of chain-of-thought reasoning and the potential for dissociation between faithful and unfaithful reasoning in large language models. These insights help inform the development of the proposed faithfulness testing approach.

Critical Analysis

The paper raises important concerns about the potential disconnect between the reasoning outputs of large language models and their actual understanding. While the authors' proposed "chain-of-thought faithfulness testing" approach is a valuable contribution, it also highlights the inherent challenges in accurately measuring the faithfulness of these models.

One potential limitation is the subjective nature of the human-evaluated tests, which may be influenced by individual biases and interpretations. Additionally, the paper does not address the potential for models to adapt their reasoning in response to specific testing scenarios, which could undermine the validity of the results.

Furthermore, the paper does not delve into the underlying causes of "chain-of-thought unfaithfulness," nor does it propose concrete solutions to address this issue. Exploring the cognitive and architectural factors that lead to this phenomenon could be an important area for future research.

Overall, this paper raises important questions about the need for more rigorous and transparent evaluation of large language models, to ensure that their outputs are truly aligned with human knowledge and values. As these models become more ubiquitous, it is crucial to develop robust testing methodologies that can reliably assess their faithfulness and self-consistency.

Conclusion

This paper explores the concept of "chain-of-thought unfaithfulness" in large language models, where the models' reasoning outputs appear accurate but are actually disconnected from their true understanding. The authors introduce a new technique called "chain-of-thought faithfulness testing" to better evaluate the alignment between the models' reasoning and their underlying knowledge.

The paper draws inspiration from related work on measuring the faithfulness and self-consistency of language models, as well as the inherent challenges in this area. By developing more rigorous testing methods, the researchers aim to gain a better understanding of when and why large language models exhibit "unfaithful" reasoning, and how to potentially address this issue.

Ensuring the faithfulness of large language models is a crucial step in aligning these powerful AI systems with human knowledge and values, rather than just producing plausible-sounding output. The insights and approaches presented in this paper represent an important contribution to this ongoing effort.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)