This is a Plain English Papers summary of a research paper called Improving Text Embeddings with Large Language Models. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- The paper explores techniques for improving text embeddings, which are numerical representations of text that can be used in various natural language processing tasks.

- The researchers propose using large language models, which are powerful AI systems trained on vast amounts of text data, to enhance the quality of text embeddings.

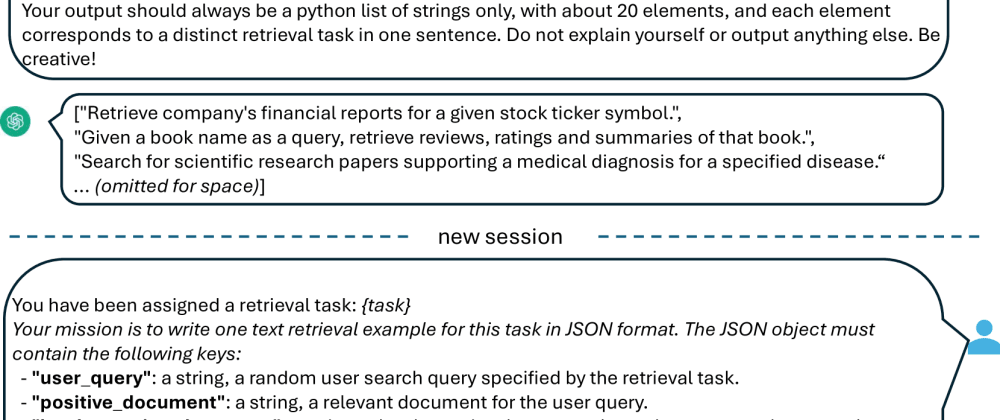

- The paper presents a method for generating synthetic data to fine-tune large language models and improve their text embedding capabilities.

- The researchers also discuss related work in the field of text embedding enhancement and the potential benefits of their approach.

Plain English Explanation

The paper is about a way to make text embeddings better. Text embeddings are numbers that represent words or phrases, and they're used in all kinds of language AI tasks. The researchers found that using big, powerful language models - the kind that can write whole essays - can help improve these text embeddings.

They have a method where they generate fake text data and use it to fine-tune the language models. This helps the models learn even better ways to turn text into useful numbers. The researchers explain how this builds on previous work in this area, and they discuss the potential benefits of their approach.

Technical Explanation

The paper presents a method for improving text embeddings using large language models. Text embeddings are numerical representations of text that capture semantic and syntactic information, and they are a crucial component in many natural language processing tasks.

The researchers propose fine-tuning large language models, such as GPT-2 and BERT, on synthetic data generated using techniques like data augmentation and back-translation. This fine-tuning process allows the language models to learn better representations of text, which can then be used to generate high-quality text embeddings.

The researchers evaluate their approach on several standard text embedding benchmarks and find that it outperforms previous methods, including those that directly fine-tune the language models on downstream tasks.

Critical Analysis

The paper presents a promising approach for improving text embeddings, but it also acknowledges several limitations and areas for further research. One limitation is that the method relies on the availability of large, high-quality language models, which may not be accessible to all researchers and developers.

Additionally, the researchers note that the performance of their approach may be sensitive to the quality and diversity of the synthetic data used for fine-tuning. Generating high-quality synthetic data that is representative of real-world text can be challenging, and this could impact the effectiveness of the method.

Furthermore, the paper does not explore the potential biases or fairness implications of using large language models, which are known to exhibit biases present in their training data. This is an important consideration that should be addressed in future research on this topic.

Conclusion

Overall, the paper presents a novel approach for enhancing text embeddings using large language models and synthetic data generation. The researchers demonstrate promising results and highlight the potential benefits of their method, which could have wide-ranging applications in natural language processing and beyond.

However, the work also raises important questions about the limitations and potential pitfalls of this approach, which should be carefully considered by researchers and practitioners in the field. As with any emerging technology, it is crucial to think critically about the implications and to continue exploring ways to improve the robustness and fairness of text embedding systems.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)