This is a Plain English Papers summary of a research paper called New AI Model Merging Technique Makes Language Models Forget Unwanted Information More Efficiently. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

- Team ZJUKLAB tackled model unlearning at SemEval-2025 Task 4

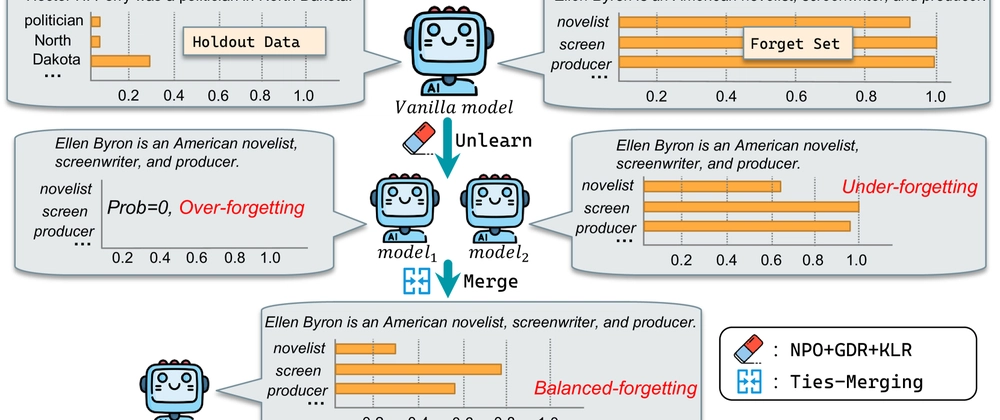

- Developed an innovative model merging approach for efficient unlearning

- Combined a base model with a model fine-tuned on retain data

- Used weight interpolation with task-specific parameter selection

- Achieved competitive performance while maintaining utility

- Demonstrated better unlearning efficiency than gradient-based methods

Plain English Explanation

When we teach AI models, they learn everything we show them. But sometimes we need them to forget specific information - like private data or harmful content. This is called "machine unlearning."

Team ZJUKLAB created a clever way to make AI models forget certain things without...

Top comments (0)